The Google Professional Data Engineer certification is a highly respected credential in the data engineering field. It validates the skills and knowledge required to design, build, operationalize, and secure data processing systems using Google Cloud Platform (GCP) technologies. The certification exam tests a candidate’s understanding of GCP tools and services, data engineering best practices, machine learning concepts, and data visualization techniques. Achieving the Google Professional Data Engineer certification demonstrates to employers and peers that an individual possesses the expertise and skills necessary to design and deploy scalable, reliable, and secure data solutions on GCP.

To help candidates prepare for the Google Professional Data Engineer exam, cheat sheets have become popular study aids. Cheat sheets condense a large amount of information into a concise and easily digestible format, making them an effective tool for memorization and quick reference. In this blog post, we’ll take a closer look at a cheat sheet for the Google Professional Data Engineer exam and how it can help you pass the certification exam.

How to prepare your own Google Professional Data Engineer (GCP) Cheat Sheet?

Preparing a cheat sheet can be a great way to consolidate the key information you need to remember for an exam. Here are some steps you can follow to prepare your own cheat sheet for the Google Professional Data Engineer (GCP) exam:

- Make sure you have a good understanding of what topics the exam covers by reviewing the exam objectives. You can find the exam guide on the official Google Cloud certification website.

- Look for the most heavily weighted topics in the exam objectives. These are the areas you’ll want to focus on and include on your cheat sheet.

- Use Google Cloud documentation, online courses, and other study materials to gather the information you need to include on your cheat sheet.

- Once you have your resources, organize the information in a way that makes sense to you. This could be through bullet points, diagrams, or other visual aids.

- Remember that your cheat sheet should be easy to read and use during the exam, so keep it concise and avoid including too much information.

- Practice using your cheat sheet while studying for the exam to make sure it contains all the information you need and is easy to use.

- Make adjustments to refine your cheat sheet as you continue to study and discover new information.

- To save space on your cheat sheet, consider using abbreviations and acronyms for longer words or phrases. Just make sure you’re consistent with your abbreviations and that you can easily remember what they stand for.

- Sometimes, including examples and case studies can be a helpful way to solidify your understanding of a concept. Consider including a few on your cheat sheet to refer to during the exam.

- Keep your cheat sheet with you as you study and review it regularly. This will help you commit the information to memory and ensure that you’re familiar with the content when it comes time for the exam.

Google Professional Data Engineer (GCP) Cheat Sheet

One of the benefits of using a cheat sheet is that it can help you identify knowledge gaps and focus on areas where you need additional study. By reviewing this cheat sheet and taking practice exams, you can identify areas where you need to improve your knowledge and focus your study efforts accordingly.

1. Designing data processing systems:

A. Understand the different data processing systems on GCP:

- Cloud Dataflow: A fully-managed service for batch and stream data processing using Apache Beam programming model.

- Cloud Dataproc: A fully-managed service for running Apache Hadoop, Spark, and Hive jobs on a cluster of virtual machines.

- Cloud Pub/Sub: A fully-managed message queuing service for exchanging messages between independent services.

- Cloud Composer: A fully-managed service for creating and managing workflows with Apache Airflow.

B. Know how to design and implement data processing systems that are scalable, fault-tolerant, and secure:

- Scalability: Use scalable services like Dataflow or Dataproc, and use auto-scaling feature to scale resources up or down based on workload.

- Fault-tolerance: Use distributed processing frameworks like Apache Beam or Spark, and ensure redundancy in data storage and processing.

- Security: Use GCP security features like VPC, IAM, and encryption at rest and in transit.

C. Understand the use cases for different data processing systems:

- Batch processing: Use Dataflow or Dataproc for processing large volumes of data at once, like data warehousing, data migration, or data archival.

- Stream processing: Use Dataflow for real-time data processing and analysis, like monitoring IoT devices, fraud detection, or real-time analytics.

- ETL: Use Dataflow or Dataproc for extracting data from various sources, transforming it, and loading it into a data warehouse or data lake.

Remember to always consider the cost and performance implications of each service, and choose the appropriate data processing system based on the specific requirements of your use case.

2. Building and Operationalizing Data Processing Systems on GCP

A. Know how to build data processing pipelines using GCP services:

- Cloud Storage: A fully-managed object storage service for storing and accessing unstructured data at scale.

- Cloud Bigtable: A fully-managed NoSQL database service for storing and processing large amounts of data with low latency.

- Cloud SQL: A fully-managed relational database service for running MySQL, PostgreSQL, and SQL Server databases in the cloud.

- Cloud Spanner: A fully-managed relational database service for running globally-distributed and horizontally-scalable databases.

B. Understand how to deploy and manage data processing systems using GCP services:

- Kubernetes Engine: A fully-managed container orchestration service for deploying and managing containerized applications at scale.

- Compute Engine: A fully-managed virtual machine service for running applications and workloads in the cloud.

- App Engine: A fully-managed platform-as-a-service for building and deploying web and mobile applications.

C. Know how to monitor and troubleshoot data processing systems using Stackdriver:

- Stackdriver: A fully-integrated monitoring, logging, and diagnostics suite for GCP services, including Dataflow, Dataproc, Pub/Sub, and Composer.

- Use Stackdriver to monitor resource utilization, identify performance bottlenecks, and troubleshoot errors and issues in data processing pipelines.

Remember to follow best practices for building scalable, reliable, and secure data processing systems, including modular design, fault-tolerant architecture, and effective error handling and recovery.

3. Designing and Implementing Data Storage Systems on GCP

A. Understand the different data storage systems on GCP:

- Cloud Storage: A fully-managed object storage service for storing and accessing unstructured data at scale.

- Cloud SQL: A fully-managed relational database service for running MySQL, PostgreSQL, and SQL Server databases in the cloud.

- Cloud Spanner: A fully-managed relational database service for running globally-distributed and horizontally-scalable databases.

- Cloud Bigtable: A fully-managed NoSQL database service for storing and processing large amounts of data with low latency.

B. Know how to design and implement data storage systems that are scalable, fault-tolerant, and secure:

- Scalability: Use horizontally-scalable services like Spanner or Bigtable, and design data schemas that support scaling.

- Fault-tolerance: Ensure redundancy and failover mechanisms in data storage and processing, and regularly test disaster recovery scenarios.

- Security: Use GCP security features like VPC, IAM, and encryption at rest and in transit.

C. Understand the use cases for different data storage systems:

- Structured data: Use Cloud SQL for storing and managing structured data, such as customer records, financial data, or inventory data.

- Unstructured data: Use Cloud Storage for storing and accessing unstructured data, such as multimedia files, documents, or logs.

- Time-series data: Use Cloud Bigtable for storing and processing time-series data, such as IoT sensor data, financial market data, or social media data.

Remember to choose the appropriate data storage system based on the specific requirements of your use case, and consider cost, performance, and maintenance implications of each service.

4. Building and Operationalizing Data Storage Systems

A. Migrating Data to GCP:

- Cloud Storage Transfer Service: A tool to transfer large amounts of data from on-premises to GCP. Supports transferring data from various sources, such as Amazon S3, HTTP/HTTPS, and Google Drive.

- Database Migration Service: A fully managed service to migrate databases from on-premises or other cloud providers to GCP. Supports MySQL, PostgreSQL, and SQL Server.

B. Managing Data Storage Systems:

- Cloud SQL: Fully managed relational database service. Supports MySQL, PostgreSQL, and SQL Server. Offers high availability, automatic backups, and automated patching. Provides flexibility to scale up or down as per demand.

- Cloud Spanner: A globally distributed relational database service. Offers high scalability, strong consistency, and automatic sharding. Suitable for mission-critical applications that require high availability and low latency.

- Cloud Bigtable: A fully managed NoSQL database service. Offers high scalability and low latency. Suitable for applications that require high throughput and low latency, such as time-series data analysis and IoT.

C. Backup and Restore:

- Cloud Storage: A highly durable and available object storage service. Suitable for storing backups of data storage systems. Provides features such as versioning and lifecycle management.

- Cloud SQL: Offers automated backups and point-in-time recovery. Backups can be restored to any point in time within the retention period.

5. Designing and Implementing Data Analysis Systems:

Data analysis systems are essential for organizations that need to derive insights and value from their data. These systems must be scalable, fault-tolerant, and secure to ensure that the data is protected, and that the system can handle large volumes of data. In this note, we’ll discuss how to design and implement data analysis systems on the Google Cloud Platform (GCP).

A. Understand Different Data Analysis Systems on GCP:

- BigQuery is a cloud-based data warehouse that allows for high-performance SQL queries. It is a fully managed service and requires no infrastructure management.

- Cloud Dataflow is a fully managed service for developing and executing data processing pipelines. It provides a unified programming model for batch and streaming data processing.

- Cloud Dataproc is a managed Hadoop and Spark service that allows for scalable data processing.

B. Design and Implement Scalable, Fault-tolerant, and Secure Data Analysis Systems:

Designing and implementing a scalable, fault-tolerant, and secure data analysis system involves several key considerations, including:

- Choosing the right architecture: Selecting the appropriate architecture based on the system requirements and use case is critical. For example, a batch processing system may require a different architecture than a real-time streaming system.

- Ensuring scalability: The system should be able to handle large volumes of data and scale up or down as needed. It’s important to consider data partitioning, data sharding, and load balancing to ensure scalability.

- Ensuring fault-tolerance: The system should be designed to handle failures gracefully. This can be achieved by implementing redundancy, failover mechanisms, and automated recovery processes.

- Ensuring security: Data security is critical, and the system should be designed to ensure data privacy, confidentiality, and integrity. This may involve implementing access controls, encryption, and other security measures.

C. Understand Use Cases for Different Data Analysis Systems:

- Ad-hoc querying may be best suited for BigQuery, which allows for high-performance SQL queries.

- Data visualization may be best suited for Google Data Studio, which is a data visualization and reporting tool that can connect to various data sources.

- Machine learning may be best suited for the Google Cloud AI Platform, which provides a suite of machine learning tools and services.

6. Building and Operationalizing Data Analysis Systems:

A. Loading Data into BigQuery:

- Cloud Storage: Cloud Storage is a Google Cloud Platform service for storing and accessing files from anywhere on the internet. It can be used to store data that needs to be loaded into BigQuery.

- Cloud Pub/Sub: Cloud Pub/Sub is a messaging service that allows you to send and receive messages between independent applications. It can be used to stream data in real time to BigQuery.

- Cloud Dataflow: Cloud Dataflow is a fully-managed service for transforming and enriching data in real-time and batch modes. It can be used to process data before loading it into BigQuery.

B. Creating and Managing BigQuery Tables, Views, and Datasets:

- BigQuery Tables: BigQuery Tables are the basic building blocks of BigQuery datasets. They store data in a columnar format and can be created using SQL or the BigQuery API.

- BigQuery Views: BigQuery Views are virtual tables that are created by running a SQL query on one or more BigQuery Tables. They are useful for creating simplified or aggregated views of data.

- BigQuery Datasets: BigQuery Datasets are containers for organizing and managing BigQuery Tables and Views. They can be used to control access to data and to manage metadata.

C. Optimizing BigQuery Queries and Using BigQuery Features:

- Partitioning: BigQuery allows you to partition tables by date or integer column, which can improve query performance by limiting the amount of data that needs to be scanned.

- Clustering: BigQuery allows you to cluster tables based on one or more columns, which can further improve query performance by grouping similar data together.

- Table Decorators: BigQuery allows you to use table decorators to query data as it existed at a specific point in time, which can be useful for analyzing changes over time or debugging data issues.

- Query Optimization: BigQuery provides tools and techniques for optimizing queries, including analyzing query plans, using caching, and leveraging BigQuery’s automatic query optimization features.

7. Designing and implementing machine learning models:

A. Understand the different machine learning services on GCP:

- Cloud ML Engine: a managed service for training and deploying machine learning models.

- Cloud AutoML: a suite of pre-trained models and tools to build custom models with minimal code.

- Cloud AI Platform: a collaborative platform to manage the end-to-end machine learning workflow.

B. Know how to design and implement machine learning models that are:

- Scalable: design models that can handle large datasets and perform well under heavy loads.

- Fault-tolerant: build models that can handle errors and recover gracefully.

- Secure: ensure data privacy and protect against potential attacks.

C. Understand the use cases for different machine learning models, such as:

- Image classification: identify and classify objects within an image.

- Natural language processing: analyze and understand human language, including sentiment analysis and language translation.

- Recommendation systems: provide personalized recommendations for products or content based on user behavior and preferences.

8. Building and Operationalizing Machine Learning Models

A. Training and Deploying Machine Learning Models:

- Understand how to train machine learning models using tools such as Cloud ML Engine, Cloud AutoML, and Cloud AI Platform.

- Know how to choose the appropriate algorithm, hyperparameters, and data preprocessing techniques for your problem.

- Understand how to deploy machine learning models to a production environment, such as a web application or mobile app.

B. Evaluating Machine Learning Models:

- Know how to evaluate machine learning models using metrics such as accuracy, precision, recall, and F1 score.

- Understand how to use confusion matrices to analyze the performance of a machine learning model.

- Know how to use cross-validation techniques to assess the generalization performance of a machine learning model.

C. Using Machine Learning Models in Production Environments:

- Understand how to use machine learning models in a production environment and how to integrate them with other systems.

- Know how to set up monitoring systems to track the performance of machine learning models in production.

- Understand how to identify and handle issues such as data drift, model decay, and bias in production.

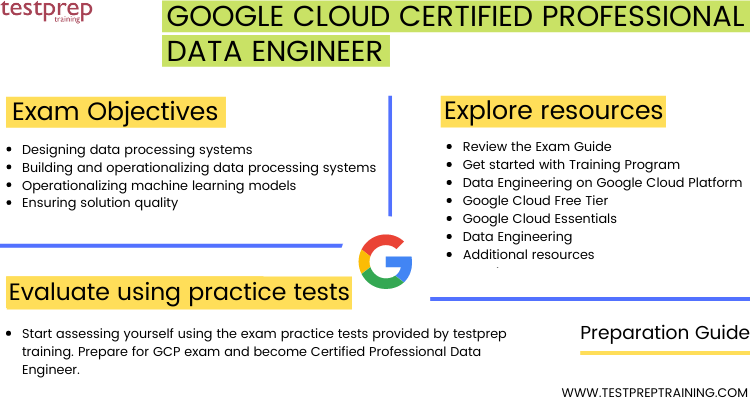

Exam Preparation Resources for Google Professional Data Engineer (GCP) exam

Preparing for the Google Professional Data Engineer certification exam requires a solid understanding of GCP data services, data processing and transformation, data storage, data analysis, machine learning, and security best practices. Here are some resources that can help you prepare for the exam:

Official Google Cloud Certification Exam Guide: This is a comprehensive guide to the exam and covers all the topics you need to know. It provides a detailed overview of the exam format, the content areas that will be covered, and the key skills you need to master.

Google Cloud Training: Google offers a range of training courses, both online and in-person, to help you prepare for the exam. These courses cover a range of topics, including data processing, storage, analysis, and machine learning. Google offers a range of instructor-led training courses for the Professional Data Engineer exam. Here are some options:

- Architecting with Google Cloud Platform: Data Engineering – This is a 3-day instructor-led course that covers the key data engineering services and tools available on GCP. It includes hands-on labs and exercises to help you gain practical experience with GCP services.

- Data Engineering on Google Cloud Platform – This is a 4-week instructor-led online course that covers the fundamentals of data engineering on GCP. It includes video lectures, hands-on labs, and quizzes to help you prepare for the exam.

- Google Cloud Certified – Professional Data Engineer Exam Readiness – This is a 1-day instructor-led course that provides an overview of the exam format and content, as well as tips and strategies for preparing for the exam.

- Data and Machine Learning Bootcamp – This is a 4-day instructor-led boot camp that covers the fundamentals of data engineering and machine learning on GCP. It includes hands-on labs and exercises to help you gain practical experience with GCP services.

Google Cloud Certified – Professional Data Engineer Practice Exam: This practice exam provides you with a simulated environment to help you prepare for the actual exam. It consists of 50 multiple-choice questions and costs $20.

GCP Documentation: The GCP documentation provides a wealth of information on GCP services, features, and best practices. It’s a great resource to learn about GCP data services and prepare for the exam.

Practice Projects: Hands-on practice is one of the best ways to prepare for the exam. You can find many practice projects on Github or other online platforms that simulate real-world scenarios and help you gain practical experience with GCP services.

Study Groups and Online Community: Joining a study group is a great way to learn from peers and get insights into exam preparation strategies. You can join a study group online or in person and collaborate with other professionals preparing for the exam.

Practice Tests: Practice tests are the most efficient as well as beneficial ways to determine the level of your preparation. Google Cloud Certified Professional Data Engineer Practice Exams help you identify weak parts of your preparation and will decrease the chances of making future mistakes. Practicing for the exam in this way will identify your loopholes and reduce the chances of your mistakes on the day of the exam.

Expert’s Corner

The Google Professional Data Engineer (GCP) exam is a challenging certification that requires a lot of preparation and dedication. However, with the help of a comprehensive cheat sheet, you can streamline your study process and increase your chances of passing the exam on your first attempt. By using a well-organized and up-to-date cheat sheet, you can optimize your study time and stay confident during the exam.

Always keep in mind that the ultimate goal of the GCP certification is to demonstrate your expertise in designing and implementing data-driven solutions on the Google Cloud Platform, so focus on building a deep understanding of the underlying principles and techniques.

With the right approach and the right resources, passing the GCP exam can be a rewarding and fulfilling achievement that opens up exciting career opportunities in the data engineering field. Good luck on your journey to becoming a Google Professional Data Engineer!

Enhance your google cloud skills and become certified Google Professional Data Engineer Now!