The DP-203 exam is aimed at validating your skills in designing and implementing data storage solutions, data processing solutions, and monitoring and optimizing data solutions. The exam consists of 40-60 multiple choice and scenario-based questions, and you will have 120 minutes to complete it. Microsoft Azure is a cloud computing service that provides various services to manage and store data. The DP-203 exam is designed for professionals who want to showcase their skills in implementing and maintaining data solutions on Azure.

To pass the DP-203 exam, you need to have a strong understanding of Azure data services and how they work. You should also have experience with data processing and storage technologies such as Azure Data Factory, Azure Databricks, Azure Stream Analytics, Azure Cosmos DB, and Azure SQL Database.

This blog will cover the topics that are covered in the DP-203 exam, including data storage and management, data processing, and monitoring and optimization. It will provide you with a comprehensive understanding of the exam objectives and prepare you to pass the DP-203 exam with confidence. So, if you are planning to take the DP-203 exam and want to pass it with flying colors, keep reading this blog to learn how to prepare effectively and increase your chances of success.

Glossary for Data Engineering on Microsoft Azure Terminology

- Azure Data Factory: A cloud-based data integration service that allows users to create, schedule, and manage data pipelines.

- Azure Databricks: A fast, easy, and collaborative Apache Spark-based analytics platform designed for big data processing and machine learning.

- Azure HDInsight: A fully-managed cloud service that makes it easy to process big data using popular open-source frameworks such as Hadoop, Spark, and Hive.

- Azure Stream Analytics: A real-time data stream processing service that allows users to analyze and react to data from various sources, such as IoT devices, social media feeds, and logs.

- Azure Cosmos DB: A globally distributed, multi-model database service that enables users to store and query massive volumes of structured and unstructured data.

- Azure SQL Database: A fully-managed relational database service that provides a scalable and secure SQL database in the cloud.

- Azure Synapse Analytics: An integrated analytics service that brings together big data and data warehousing to enable users to ingest, prepare, manage, and serve data for immediate business intelligence and machine learning needs.

- Azure Blob Storage: A massively scalable object storage service for unstructured data, such as text and binary data.

- Azure Data Lake Storage: A scalable and secure data lake that allows users to store and analyze petabytes of data with low latency.

- Azure SQL Data Warehouse: A fully-managed and scalable cloud data warehouse service that allows users to store and analyze large volumes of data.

- Azure Machine Learning: A cloud-based machine learning service that provides tools and services to build, deploy, and manage machine learning models.

- Azure Cognitive Services: A suite of pre-built APIs and SDKs that enables users to add intelligent features such as natural language processing, computer vision, and speech recognition to their applications.

- Azure Data Catalog: A cloud-based metadata management service that enables users to discover, understand, and consume data sources.

- Azure Data Explorer: A fast and highly scalable data exploration service that enables users to analyze large volumes of data in real-time.

- Azure Data Share: A secure and easy-to-use service that enables users to share data with external organizations.

- Azure Data Box: A secure and efficient way to transfer large amounts of data to and from Azure when internet connectivity is limited or too slow.

- Azure Event Grid: A fully-managed event routing service that allows users to react to events happening across Azure services or custom applications.

- Azure Service Bus: A cloud-based messaging service that enables reliable and secure communication between distributed applications and services.

- Azure Data Bricks Delta Lake: A powerful data lakehouse that combines the best features of data warehouses and data lakes to provide an efficient and scalable solution for big data processing and analytics.

- Azure Data Factory Data Flow: A visual, no-code data transformation service that allows users to build scalable and efficient data transformations on big data in the cloud.

Exam preparation resources for Data Engineering on Microsoft Azure Exam

If you’re preparing for the Data Engineering on Microsoft Azure Exam, there are a few resources you can use to help you study. Here are some official exam preparation resources with website links that you can use to prepare for the exam:

- Exam page: The Microsoft Azure Data Engineer Associate certification page provides information about the exam, including its objectives, skills measured, and prerequisites. Here is the link: https://docs.microsoft.com/en-us/learn/certifications/azure-data-engineer

- Learning paths: Microsoft Learn provides various learning paths to help you prepare for the exam. These learning paths contain a mix of text-based and video-based content, as well as hands-on exercises. Here is the link: https://docs.microsoft.com/en-us/learn/certifications/data-engineer-associate

- Microsoft Azure Data Engineering Cookbook: This cookbook contains a collection of recipes that cover various aspects of data engineering in Microsoft Azure. It covers topics such as data ingestion, data transformation, and data storage. Here is the link: https://azure.microsoft.com/en-us/resources/azure-data-engineering-cookbook/

- Azure Data Engineering Samples: This repository contains sample code and scripts that demonstrate how to use various Azure data engineering services. Here is the link: https://github.com/Azure-Samples/data-engineering-samples

- Azure Data Factory documentation: Azure Data Factory is a key component of the Microsoft Azure data engineering stack. The documentation for Azure Data Factory provides detailed information about how to use the service. Here is the link: https://docs.microsoft.com/en-us/azure/data-factory/

- Azure Databricks documentation: Azure Databricks is another key component of the Microsoft Azure data engineering stack. The documentation for Azure Databricks provides detailed information about how to use the service. Here is the link: https://docs.databricks.com/

By using these resources, you can prepare effectively for the Data Engineering on Microsoft Azure Exam and increase your chances of passing.

Expert tips to pass the Data Engineering on Microsoft Azure (DP-203) exam

Here are some expert tips that can help you pass the Data Engineering on Microsoft Azure (DP-203) exam:

- Understand the exam format: The DP-203 exam consists of multiple-choice questions, and you will have 180 minutes to complete the exam. It is important to understand the exam format, so you can manage your time effectively.

- Review the exam objectives: Make sure to review the exam objectives listed on the official Microsoft DP-203 exam page. This will give you a clear idea of what topics you need to study and what skills you need to master.

- Study the Azure data services: Azure has a variety of data services, and it is important to understand each service’s purpose, features, and use cases. You should study Azure Data Factory, Azure Databricks, Azure Stream Analytics, and Azure Synapse Analytics, among others.

- Practice hands-on exercises: The best way to learn and understand Azure data services is to practice hands-on exercises. Microsoft provides several online resources, including free courses, tutorials, and documentation, that you can use to practice and improve your skills.

- Review sample questions: Microsoft provides sample questions on the DP-203 exam page. Reviewing these questions can help you understand the exam format and the types of questions that may be asked.

- Join study groups: Joining a study group can be helpful because you can share your knowledge and learn from others. You can join online forums, discussion boards, and social media groups to find study partners.

- Take practice exams: Taking practice exams can help you identify your strengths and weaknesses and help you prepare for the actual exam. Microsoft provides practice exams for a fee, or you can find free practice exams online.

- Manage your time: Time management is critical in any exam, and the DP-203 exam is no exception. Make sure to manage your time effectively by allocating enough time to answer each question, and if you get stuck on a question, move on to the next one and come back to it later.

By following these expert tips, you can increase your chances of passing the Data Engineering on Microsoft Azure (DP-203) exam. Good luck!

Microsoft Azure DP-203 Exam Study Guide

Before moving on to the study guide you must understand the value of Azure certification:

- Every year, around 365,000 new companies sign up for Azure, according to Microsoft.

- Azure cloud services are used by more than 95 percent of Fortune 500 enterprises. As a result, the number of job openings for Azure Data Engineers is continually increasing.

- According to a Global Knowledge study, Azure will have two of the top five highest-paying certifications in the coming years.

- When looking for greater prospects in the cloud, Microsoft-certified workers have an advantage.

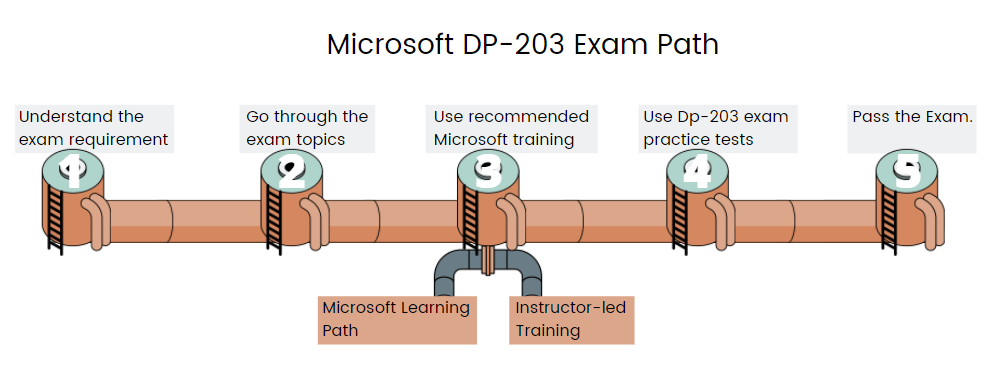

Coming on the exam study guide, the very first for every preparation is to get familiar with the exam details. So, beginning with the first step!

Step 1: Gathering details for DP-203 Exam

While collecting details, divide the content into sections with names such as exam details, format, or the exam knowledge requirement.

However, the Microsoft DP-203 exam is about data engineers so, the best way to get familiarity with this is to understand the role of Azure data engineers.

- Azure data engineers are professionals with skills for using a variety of tools and approaches to assist stakeholders to comprehend the data and designing and maintaining safe and compliant data processing pipelines.

- Secondly, these experts store and create cleaned and enriched datasets for analysis using a variety of Azure data services and languages.

- Thirdly, given a set of business objectives and restrictions, Azure data engineers also assist in ensuring that data pipelines and data storage are high-performing, efficient, organized, and dependable.

- Next, they respond quickly to unforeseen situations and minimize data loss.

- Lastly, they also create, install, monitor, and optimize data platforms to suit the requirements of the data pipeline.

Moving on to the exam knowledge requirement.

Knowledge requirement for DP-203 Exam:

Microsoft Azure DP-203 exam is designed for those who have skills for combining, converting, and consolidating data from various structured and unstructured data systems into a structure that is suitable for building analytics solutions. This exam requires:

- Firstly, strong knowledge of data processing languages such as SQL, Python, or Scala

- Secondly, understanding of parallel processing and data architecture patterns.

Exam Format:

The Microsoft Azure DP-203 exam will consist of 40-60 questions in a variety of formats, including scenario-based single-answer questions, multiple-choice questions, ordered in the right sequence type questions, and drop type questions. A minimum score of 700 is required to pass. The Microsoft DP-203 exam will cost $165 USD, and it is available in English, Chinese (Simplified), Japanese, Korean, German, French, Spanish, Portuguese (Brazil), Arabic (Saudi Arabia), Russian, Chinese (Traditional), Italian, and Indonesian (Indonesia) language.

Moving on to the next part that is the exam objectives!

Step 2: Creating a study map using the Exam topic

The best thing about the Microsoft certification exam is that you get an updated list of topics for all certifications exams. Talking about the Microsoft DP-203 exam, you will get a list of topics divided into sections and subsections. Use this to create a good study pattern for having a better start of preparation. However, the topics include:

Topic 1: Design and Implement Data Storage (15-20%)

Implement a partition strategy

- Implement a partition strategy for files (Microsoft Documentation: Copy new files based on time partitioned file name using the Copy Data tool)

- Implement a partition strategy for analytical workloads (Microsoft Documentation: Best practices when using Delta Lake, Partitions in tabular models)

- Implement a partition strategy for streaming workloads

- Implement a partition strategy for Azure Synapse Analytics (Microsoft Documentation: Partitioning tables)

- identify when partitioning is needed in Azure Data Lake Storage Gen2

Design and implement the data exploration layer

- Create and execute queries by using a compute solution that leverages SQL serverless and Spark cluster (Microsoft Documentation: Azure Synapse SQL architecture, Azure Synapse Dedicated SQL Pool Connector for Apache Spark)

- Recommend and implement Azure Synapse Analytics database templates (Microsoft Documentation: Azure Synapse database templates, Lake database templates)

- Push new or updated data lineage to Microsoft Purview (Microsoft Documentation: Push Data Factory lineage data to Microsoft Purview)

- Browse and search metadata in Microsoft Purview Data Catalog

Topic 2: Develop Data Processing (40-45%)

Ingest and transform data

- Design and implement incremental loads

- transform data by using Apache Spark (Microsoft Documentation: Transform data in the cloud by using a Spark activity)

- Transform data by using Transact-SQL (T-SQL) in Azure Synapse Analytics (Microsoft Documentation: SQL Transformation)

- Ingest and transform data by using Azure Synapse Pipelines or Azure Data Factory (Microsoft Documentation: Pipelines and activities in Azure Data Factory and Azure Synapse Analytics)

- transform data by using Azure Stream Analytics (Microsoft Documentation: Transform data by using Azure Stream Analytics)

- cleanse data (Microsoft Documentation: Overview of Data Cleansing, Clean Missing Data module)

- Handle duplicate data (Microsoft Documentation: Handle duplicate data in Azure Data Explorer)

- Avoiding duplicate data by using Azure Stream Analytics Exactly Once Delivery

- Handle missing data

- Handle late-arriving data (Microsoft Documentation: Understand time handling in Azure Stream Analytics)

- split data (Microsoft Documentation: Split Data Overview, Split Data module)

- shred JSON

- encode and decode data (Microsoft Documentation: Encode and Decode SQL Server Identifiers)

- configure error handling for a transformation (Microsoft Documentation: Handle SQL truncation error rows in Data Factory, Troubleshoot mapping data flows in Azure Data Factory)

- normalize and denormalize data (Microsoft Documentation: Overview of Normalize Data module, What is Normalize Data?)

- perform data exploratory analysis (Microsoft Documentation: Query data in Azure Data Explorer Web UI)

Develop a batch processing solution

- Develop batch processing solutions by using Azure Data Lake Storage, Azure Databricks, Azure Synapse Analytics, and Azure Data Factory (Microsoft Documentation: Choose a batch processing technology in Azure, Batch processing)

- Use PolyBase to load data to a SQL pool (Microsoft Documentation: Design a PolyBase data loading strategy)

- Implement Azure Synapse Link and query the replicated data (Microsoft Documentation: Azure Synapse Link for Azure SQL Database)

- create data pipelines (Microsoft Documentation: Creating a pipeline, Build a data pipeline)

- scale resources (Microsoft Documentation: Create an automatic formula for scaling compute nodes)

- configure the batch size (Microsoft Documentation: Selecting VM size and image for compute nodes)

- Create tests for data pipelines (Microsoft Documentation: Run quality tests in your build pipeline by using Azure Pipelines)

- integrate Jupyter or Python notebooks into a data pipeline (Microsoft Documentation: Use Jupyter Notebooks in Azure Data Studio, Run Jupyter notebooks in your workspace)

- upsert data

- Reverse data to a previous state

- Configure exception handling (Microsoft Documentation: Azure Batch error handling and detection)

- configure batch retention (Microsoft Documentation: Azure Batch best practices)

- Read from and write to a delta lake (Microsoft Documentation: What is Delta Lake?)

Develop a stream processing solution

- Create a stream processing solution by using Stream Analytics and Azure Event Hubs (Microsoft Documentation: Stream processing with Azure Stream Analytics)

- process data by using Spark structured streaming (Microsoft Documentation: What is Structured Streaming? Apache Spark Structured Streaming)

- Create windowed aggregates (Microsoft Documentation: Stream Analytics windowing functions, Windowing functions)

- handle schema drift (Microsoft Documentation: Schema drift in mapping data flow)

- process time-series data (Microsoft Documentation: Time handling in Azure Stream Analytics, What is Time series solutions?)

- processing data across partitions (Microsoft Documentation: Data partitioning guidance, Data partitioning strategies)

- Process within one partition

- configure checkpoints and watermarking during processing (Microsoft Documentation: Checkpoint and replay concepts, Example of watermarks)

- scale resources (Microsoft Documentation: Streaming Units, Scale an Azure Stream Analytics job)

- Create tests for data pipelines

- optimize pipelines for analytical or transactional purposes (Microsoft Documentation: Query parallelization in Azure Stream Analytics, Optimize processing with Azure Stream Analytics using repartitioning)

- handle interruptions (Microsoft Documentation: Stream Analytics job reliability during service updates)

- Configure exception handling (Microsoft Documentation: Exceptions and Exception Handling)

- upsert data (Microsoft Documentation: Azure Stream Analytics output to Azure Cosmos DB)

- replay archived stream data (Microsoft Documentation: Checkpoint and replay concepts)

Manage batches and pipelines

- trigger batches (Microsoft Documentation: Trigger a Batch job using Azure Functions)

- handle failed batch loads (Microsoft Documentation: Check for pool and node errors)

- validate batch loads (Microsoft Documentation: Error checking for job and task)

- manage data pipelines in Data Factory or Azure Synapse Pipelines (Microsoft Documentation: Pipelines and activities in Azure Data Factory and Azure Synapse Analytics, What is Azure Data Factory?)

- schedule data pipelines in Data Factory or Synapse Pipelines (Microsoft Documentation: Pipelines and Activities in Azure Data Factory)

- implement version control for pipeline artifacts (Microsoft Documentation: Source control in Azure Data Factory)

- manage Spark jobs in a pipeline (Microsoft Documentation: Monitor a pipeline)

Topic 3: Secure, Monitor and Optimize Data Storage and Data Processing (30-35%)

Implement data security

- Implement data masking (Microsoft Documentation: Dynamic Data Masking)

- Encrypt data at rest and in motion (Microsoft Documentation: Azure Data Encryption at rest, Azure encryption overview)

- Implement row-level and column-level security (Microsoft Documentation: Row-Level Security, Column-level security)

- Implement Azure role-based access control (RBAC) (Microsoft Documentation: What is Azure role-based access control (Azure RBAC)?)

- Implement POSIX-like access control lists (ACLs) for Data Lake Storage Gen2 (Microsoft Documentation: Access control lists (ACLs) in Azure Data Lake Storage Gen2)

- Implement a data retention policy (Microsoft Documentation: Learn about retention policies and retention labels)

- Implement secure endpoints (private and public) (Microsoft Documentation: What is a private endpoint?)

- Implement resource tokens in Azure Databricks (Microsoft Documentation: Authentication for Azure Databricks automation)

- Load a DataFrame with sensitive information

- Write encrypted data to tables or Parquet files (Microsoft Documentation: Parquet format in Azure Data Factory and Azure Synapse Analytics)

- Manage sensitive information

Monitor data storage and data processing

- implement logging used by Azure Monitor (Microsoft Documentation: Overview of Azure Monitor Logs, Collecting custom logs with Log Analytics agent in Azure Monitor)

- configure monitoring services (Microsoft Documentation: Monitoring Azure resources with Azure Monitor, Define Enable VM insights)

- measure performance of data movement (Microsoft Documentation: Overview of Copy activity performance and scalability)

- monitor and update statistics about data across a system (Microsoft Documentation: Statistics in Synapse SQL, UPDATE STATISTICS)

- monitor data pipeline performance (Microsoft Documentation: Monitor and Alert Data Factory by using Azure Monitor)

- measure query performance (Microsoft Documentation: Query Performance Insight for Azure SQL Database)

- schedule and monitor pipeline tests (Microsoft Documentation: Monitor and manage Azure Data Factory pipelines by using the Azure portal and PowerShell)

- interpret Azure Monitor metrics and logs (Microsoft Documentation: Overview of Azure Monitor Metrics, Define Azure platform logs)

- Implement a pipeline alert strategy

Optimize and troubleshoot data storage and data processing

- compact small files (Microsoft Documentation: Explain Auto Optimize)

- handle skew in data (Microsoft Documentation: Resolve data-skew problems by using Azure Data Lake Tools for Visual Studio)

- Handle data spill

- optimize resource management

- tune queries by using indexers (Microsoft Documentation: Automatic tuning in Azure SQL Database and Azure SQL Managed Instance)

- tune queries by using cache (Microsoft Documentation: Performance tuning with a result set caching)

- troubleshoot a failed spark job (Microsoft Documentation: Troubleshoot Apache Spark by using Azure HDInsight, Troubleshoot a slow or failing job on an HDInsight cluster)

- troubleshoot a failed pipeline run, including activities executed in external services (Microsoft Documentation: Troubleshoot Azure Data Factory and Synapse pipelines)

Step 3: Taking preparation to next level: Microsoft Learning Paths

This step is equally important as the exam topics. The reason is the modules that are covered in the learning paths. That is to say, these learning paths contain the modules that link directly to the exam topics which further, will help in getting a better clarity of the concepts. And, for the Microsoft DP-203 exam, the learning paths are:

Path 1: Azure for the Data Engineer

Discover how the world of data has changed, and how cloud technologies are opening up new possibilities for businesses. You’ll learn about several data platform technologies that are available including the technology steps that Data engineers use to benefit an organization.

Path 2: Storing data in Azure

Learn the fundamentals of Azure storage management, including the process of creating a Storage Account and using the model for the data to store in the cloud.

Path 3: Data integration at scale with Azure Data Factory or Azure Synapse Pipeline

You will learn how to use Azure Data Factory to develop and manage data pipelines in the cloud in this learning route.

Prerequisites:

It is necessary to accomplish the following prerequisites:

- Log in to the Azure portal successfully.

- Recognize the Azure storage possibilities.

- Discover the Azure compute choices.

Path 4: Realizing Integrated Analytical Solutions with Azure Synapse Analytics

Learn how Azure Synapse Analyses’ components may be used to develop Modern Data Warehouses and Advanced Analytical solutions, allowing you to execute various sorts of analytics.

Path 5: Working with Data Warehouses using Azure Synapse Analytics

Explore the tools and approaches available in Azure Synapse Analytics for working with Modern Data Warehouses in a productive and safe manner.

Prerequisites:

- Before beginning this study path, you should have completed Data Fundamentals.

Path 6: Performing data engineering with Azure Synapse Apache Spark Pools

Learn how to execute data engineering with Azure Synapse Apache Spark Pools, which use in-memory cluster computing to improve the speed of big-data analytic applications.

Prerequisites:

- Before beginning this study path, you should have completed Data Fundamentals.

Get access to complete documentation of the DP-203 exam using the online tutorial!

Path 7: Working with Hybrid Transactional and Analytical Processing Solutions using Azure Synapse Analytics

Using Azure Synapse Analytics’ Azure Synapse Link functionality, perform operational analytics against Azure Cosmos DB.

Prerequisites:

- Before beginning this study path, you should have completed Data Fundamentals.

Path 8: Data engineering with Azure Databricks

Learn how to perform massive data engineering tasks in the cloud with Apache Spark and powerful clusters running on the Azure Databricks platform.

Path 9: Large-Scale Data Processing with Azure Data Lake Storage Gen2

This learning path will show you how Azure Data Lake Storage can speed up the processing of Big Data analytical solutions and how simple it is to set up. You’ll also learn how it fits into typical designs and how to upload data to the data store using various techniques. Lastly, you’ll look at the many security mechanisms that will keep your data safe.

Path 10: Implementing a Data Streaming Solution with Azure Streaming Analytics

Learn how to apply the ideas of event processing and streaming data to Azure Stream Analytics. Then you’ll learn how to manage and monitor a running task, as well as how to set up a stream analytics job to stream data.

Step 4: Getting hands-on experience using Microsoft Training

Microsoft offers instructor-led training for the Azure DP-203 exam. This training course will help you in enhancing your preparation and in understanding the concept. The course includes:

Data Engineering on Microsoft Azure

In this course, you’ll learn about data engineering and the process of using Azure data platform technologies for dealing with batch and real-time analytical solutions.

- Firstly, you will start by learning about the fundamental computation and storage technologies that are utilized to create an analytical solution.

- Secondly, you will learn how to,

- use a data lake to interactively examine data contained in files.

- load data using the Apache Spark capabilities featured in Azure Synapse Analytics or Azure Databricks

- ingest data using Azure Data Factory or Azure Synapse pipelines.

- Thirdly, you will also learn how to alter data using the same tools used to ingest it.

- Then, you will learn to recognize the significance of adopting security to guarantee that data is safeguarded either in transit or at rest.

- Lastly, you will learn to create a real-time analytical system to create real-time analytical solutions.

Audience Profile:

This course is intended for:

- Firstly, data engineers, data architects, and business intelligence professionals who want to learn about data engineering and designing analytical solutions utilizing Microsoft Azure data platform technologies.

- Secondly, data analysts and data scientists who work with Microsoft Azure-based analytical tools.

Step 5: Making connections using online study groups

During exam preparation, you can make use of online study groups. Joining study groups, in other words, will allow you to keep in touch with experts who are already on this path. Moreover, you can also use this group to discuss your exam-related question or concern, as well as take the DP-203 test study notes.

Step 6: Assessing using the Practice Tests

You will learn about your weak and strong areas by completing DP-203 exam practice tests. Furthermore, you will be able to improve your answering abilities for time management, allowing you to save a significant amount of time throughout the test. However, a recommended strategy to begin taking the DP-203 exam practice tests is to finish a whole topic first and then attempt the sample examinations. As a consequence, your revision will be more effective, and you will have a deeper comprehension of the material. So, go for the top DP-203 practice exam questions and answers and pass the exam.

Tips for the exam:

- Understand the exam requirement and format

- Gain hands-on experience

- Utilize the exam topics for getting a better understanding

- Focus on exam structure and timing. Use practice tests.

- Make yourself stress-free and pass the exam

Final Words

Microsoft Certified Azure Data Engineer Associate is a position that matches perfectly. As a result, obtaining the Microsoft Certification can quickly lead to greater chances, better projects, and companies. However, to pass the exam, above we have gone through all the major areas of the DP-203 exam by covering the exam details, topics, training methods, and the practice exam. So, get yourself familiar with the exam requirements and start preparing to achieve the role of a data engineer.