To earn the Microsoft Certified Azure Data Scientist Associate certification, candidates need to successfully complete the Microsoft Exam DP-100: Planning and Implementing a Data Science Solution on Azure. The IT generation has been thinking a lot about the Microsoft exam, Developing and Implementing a Data Science Application on Azure (DP-100). Nowadays, every firm needs qualified employees who can operate machinery with care and manage operations efficiently while cutting down on wasted time. That’s what increases the demand for this exam.

DP-100 exam measures the candidate’s ability to accomplish technical tasks including-

- Define and prepare the development environment

- Prepare data for modeling

- Perform feature engineering

- Develop models

Exam DP-100 Course outline

But before we begin it is very important to have a deep dive into the course outline of the Microsoft Exam DP-100: Designing and Implementing a Data Science Solution on Azure exam.

Design and prepare a machine learning solution (20–25%)

Design a machine learning solution

- Determine the appropriate compute specifications for a training workload (Microsoft Documentation: compute targets in Azure Machine Learning)

- Describe model deployment requirements (Microsoft Documentation: Deploy machine learning models to Azure)

- Select which development approach to use to build or train a model (Microsoft Documentation: Train models with Azure Machine Learning)

Manage an Azure Machine Learning workspace

- Create an Azure Machine Learning workspace (Microsoft Documentation: Create workspace resources you need to get started with Azure Machine Learning)

- Manage a workspace by using developer tools for workspace interaction (Microsoft Documentation: Manage Azure Machine Learning workspaces in the portal or with the Python SDK (v2))

- Set up Git integration for source control (Microsoft Documentation: Source control in Azure Data Factory)

- Create and manage registries

Manage data in an Azure Machine Learning workspace

- Select Azure Storage resources (Microsoft Documentation: Introduction to Azure Storage)

- Register and maintain datastores (Microsoft Documentation: Create datastores)

- Create and manage data assets (Microsoft Documentation: Create data assets)

Manage compute for experiments in Azure Machine Learning

- Create compute targets for experiments and training (Microsoft Documentation: Configure and submit training jobs)

- Select an environment for a machine learning use case (Microsoft Documentation: What are Azure Machine Learning environments?)

- Configure attached compute resources, including Azure Synapse Spark pools and serverless Spark compute (Microsoft Documentation: Apache Spark pool configurations in Azure Synapse Analytics)

- Monitor compute utilization

Explore data, and train models (35–40%)

Explore data by using data assets and data stores

- Access and wrangle data during interactive development (Microsoft Documentation: What is data wrangling?)

- Wrangle interactive data with attached Synapse Spark pools and serverless Spark compute (Microsoft Documentation: Interactive Data Wrangling with Apache Spark in Azure Machine Learning)

Create models by using the Azure Machine Learning designer

- Create a training pipeline (Microsoft Documentation: Create a build pipeline with Azure Pipelines)

- Consume data assets from the designer (Microsoft Documentation: Create data assets)

- Use custom code components in designer (Microsoft Documentation: Add code components to a custom page for your model-driven app)

- Evaluate the model, including responsible AI guidelines (Microsoft Documentation: What is Responsible AI?)

Use automated machine learning to explore optimal models

- Use automated machine learning for tabular data (Microsoft Documentation: What is automated machine learning (AutoML)?)

- Use automated machine learning for computer vision

- Use automated machine learning for natural language processing (Microsoft Documentation: Set up AutoML to train a natural language processing model)

- Select and understand training options, including preprocessing and algorithms

- Evaluate an automated machine learning run, including responsible AI guidelines (Microsoft Documentation: What is Responsible AI?)

Use notebooks for custom model training

- Develop code by using a compute instance (Microsoft Documentation: Create and manage an Azure Machine Learning compute instance)

- Track model training by using MLflow (Microsoft Documentation: Track ML experiments and models with MLflow)

- Evaluate a model (Microsoft Documentation: Evaluate Model component)

- Train a model by using Python SDKv2

- Use the terminal to configure a compute instance (Microsoft Documentation: Access a compute instance terminal in your workspace)

Tune hyperparameters with Azure Machine Learning

- Select a sampling method (Microsoft Documentation: Sampling in Application Insights)

- Define the search space

- Define the primary metric (Microsoft Documentation: Set up AutoML training with the Azure ML Python SDK v2)

- Define early termination options (Microsoft Documentation: Hyperparameter tuning a model (v2))

Prepare a model for deployment (20–25%)

Run model training scripts

- Configure job run settings for a script (Microsoft Documentation: Configure and submit training jobs)

- Configure compute for a job run

- Consume data from a data asset in a job (Microsoft Documentation: Create data assets)

- Run a script as a job by using Azure Machine Learning (Microsoft Documentation: Azure Machine Learning in a day, Configure and submit training jobs)

- Use MLflow to log metrics from a job run (Microsoft Documentation: Log metrics, parameters and files with MLflow)

- Use logs to troubleshoot job run errors (Microsoft Documentation: Review logs to diagnose pipeline issues)

- Configure an environment for a job run (Microsoft Documentation: Create and target an environment)

- Define parameters for a job (Microsoft Documentation: Runtime parameters)

Implement training pipelines

- Create a pipeline (Microsoft Documentation: Create your first pipeline, What is Azure Pipelines?)

- Pass data between steps in a pipeline (Microsoft Documentation: How to use parameters, expressions and functions in Azure Data Factory)

- Run and schedule a pipeline (Microsoft Documentation: Configure schedules for pipelines)

- Monitor pipeline runs (Microsoft Documentation: Visually monitor Azure Data Factory)

- Create custom components (Microsoft Documentation: Create your first component)

- Use component-based pipelines (Microsoft Documentation: Create and run machine learning pipelines using components with the Azure Machine Learning CLI)

Manage models in Azure Machine Learning

- Describe MLflow model output (Microsoft Documentation: Track ML experiments and models with MLflow)

- Identify an appropriate framework to package a model (Microsoft Documentation: Model management, deployment, and monitoring with Azure Machine Learning)

- Assess a model by using responsible AI guidelines (Microsoft Documentation: What is Responsible AI?)

Deploy and retrain a model (10–15%)

Deploy a model

- Configure settings for online deployment (Microsoft Documentation: Configuration options for the Office Deployment Tool)

- Configure compute for a batch deployment (Microsoft Documentation: Deploy applications to compute nodes with Batch application packages)

- Deploy a model to an online endpoint (Microsoft Documentation: Deploy and score a machine learning model by using an online endpoint)

- Deploy a model to a batch endpoint (Microsoft Documentation: Use batch endpoints for batch scoring)

- Test an online deployed service (Microsoft Documentation: Testing the Deployment)

- Invoke the batch endpoint to start a batch scoring job (Microsoft Documentation: Use batch endpoints for batch scoring)

Apply machine learning operations (MLOps) practices

- Trigger an Azure Machine Learning job, including from Azure DevOps or GitHub (Microsoft Documentation: Trigger Azure Machine Learning jobs with GitHub Actions)

- Automate model retraining based on new data additions or data changes

- Define event-based retraining triggers (Microsoft Documentation: Create a trigger that runs a pipeline in response to a storage event)

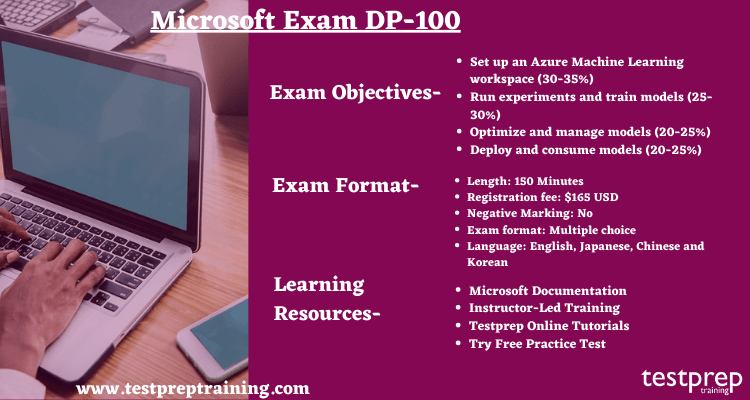

Exam Format

- DP-100 Exam covers around 40-60 questions on implementing Azure data solutions that involve questions in different formats.

- Why we say 40-60 questions is because questions are always fluctuating between this bracket of 40-60 every time.

- Also, there can be several different types of questions for the DP-100 exam such as multiple choice questions with different case studies.

- The candidate may also find single-choice questions. Moreover, fill-ups questions about the completion of the code. Certain appropriate sequence reordering of questions can also be expected.

What makes the Microsoft DP-100 Exam Difficult?

The difficulty of the Microsoft DP-100 (Designing and Implementing a Data Science Solution on Azure) exam varies depending on the individual’s prior experience and knowledge of data science, Azure, and related technologies. However, in general, it can be considered to be a challenging exam that requires a good understanding of data science concepts and the ability to apply them in real-world scenarios.

To pass the DP-100 exam, you should have a strong understanding of the following topics:

- Data Science Process: Knowledge of the data science process, including data collection, preparation, modeling, and deployment.

- Azure Data Science Services: Understanding of Azure data science services, such as Azure Machine Learning, Azure Databricks, and Azure HDInsight.

- Data Science Tools: Knowledge of data science tools, such as Python, R, and Jupyter Notebooks.

- Machine Learning: Knowledge of machine learning principles and algorithms, encompassing supervised and unsupervised learning, as well as deep learning.

- Data Engineering: Knowledge of data engineering concepts and techniques, including data ingestion, processing, and storage.

Microsoft Exam DP-100 Preparation Guide

To prepare for the DP-100 exam, you should have hands-on experience with Azure data science services and tools, as well as a strong understanding of data science concepts and practices. Microsoft provides a range of resources, including online courses, tutorials, and hands-on labs, to help individuals prepare for the exam.

Overall, the DP-100 exam is challenging, but achievable with sufficient preparation and study. By focusing on the key topics and using the available resources, individuals can develop the skills and knowledge needed to pass the exam and achieve their certification goals.

- Microsoft Learning Platform – Microsoft offers various learning paths, the candidate should visit the official website of Microsoft. The candidate can find every possible information on the official site. For this exam, the candidate will find many learning paths and documentations. Finding relatable content on the Microsoft website is quite an easy task. Also, you can find the study guides.

- Microsoft Documentation – Microsoft Documentations are an important learning resource while preparing for exams. The candidate will find documentation on every topic relating to the particular exam.

- Instructor-Led Training– The training programs that Micorosft provides itself are available on their website. The instructor-led training is an essential resource in order to prepare for the exam like Microsoft DP-100.

- Testprep Online Tutorials– Exam DP-100: Designing and Implementing a Data Science Solution on Azure Online Tutorial boosts your understanding and delves deep into the exam concepts. It not only includes exam details and policies but also reinforces your preparation.

- Try Practice Test– Practice tests play a crucial role in assuring candidates about their preparation. These tests help candidates identify their weak areas, allowing them to focus on improvement. With numerous practice tests available online, candidates have the flexibility to choose the ones that suit them best. At Testprep training, we also provide practice tests that are very helpful for those in preparation.

Some basic exam tips:

Here are some tips for preparing for and taking the Microsoft DP-100 (Designing and Implementing a Data Science Solution on Azure) exam:

- Study the Exam Objectives: Get to know the goals of the exam and ensure a clear understanding of the subjects it encompasses.

- Hands-on Experience: Get hands-on experience with Azure data science services and tools, including Azure Machine Learning, Azure Databricks, and Azure HDInsight.

- Practice with Real-World Scenarios: Practice applying data science concepts to real-world scenarios to develop your problem-solving skills.

- Use Official Microsoft Resources: Use Microsoft’s official resources, including online courses, tutorials, and hands-on labs, to prepare for the exam.

- Review Past Exam Questions: Review past exam questions to get an understanding of the types of questions you can expect on the exam.

- Stay Current with Azure Updates: Stay up to date with the latest developments in Azure and data science, as the exam may include questions on new features and services.

- Practice Time Management: Make sure you are familiar with the format of the exam and practice managing your time during practice exams, as you will need to answer a large number of questions in a limited amount of time.

- Stay Focused and Relaxed: On the day of the exam, stay focused and relaxed, and take breaks as needed to clear your mind and reduce stress.

By following these tips and using the available resources, you can increase your chances of success on the DP-100 exam and achieve your certification goals.

We at Testprep Training hope that this article helped you to get an understanding of how difficult this exam can be! For better preparation, the candidate should practice upper mention learning resources and try practice tests as well. We wish you good luck with your exam!