The market for artificial intelligence and machine learning-powered solutions is expected to grow to $1,2 billion by 2023. As this demand is not fleeting and will continue to stay with us for a longer time, it becomes extremely essential to consider the business needs now and then in the future. Furthermore, the world has witnessed that the GCP data engineering role has evolved and now requires a larger set of skills. Well, this whole scenario boils us down to the most important thing i.e GCP Data Engineer Certification- Cheat Sheet.

Therefore, in view to address the evolving skills set for the potential aspirants, the following article will be presenting the cheat sheet accompanying some major exam details.

Why Take Google Cloud Certified Data Engineer Certification?

Data management, Data Analytics, Machine Learning, and Artificial Intelligence are all red-hot topics. And who does all of these better than Google?

Acquiring a Google Data Engineer certification is not a bed full of thrones. In other words, it is not difficult to become Google certified. Google certification adds a meaningful impact on your career and job in the IT industry. It has been witnessing a good track record with positive value and benefits for both employees and employers. GCP encompasses the following benefits-

- First things first, it enhances the knowledge and understanding of technology and the product

- Secondly, provides you an extra edge over other candidates

- Subsequently, acts as proof of your continuous learning

- In addition, recognizes you as a Google certified data engineer professional globally

- Moreover, increases your chances of getting better opportunities and a higher pay scale

Now that we have acquired the benefits, we will move forward and focus on the necessary details for the Google Data Engineer certification.

GCP Data Engineer: Overview

The GCP Data Engineer exam is best suitable for individuals with an interest in data investigation. Candidates for the GCP Data Engineer certification exam assume roles for data-based decision making. The objective of the certification is the validation of the abilities of an individual for the collection, transformation, and publishing of data.

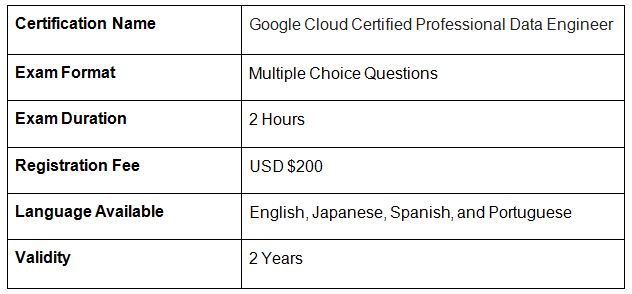

Exam Details

The GCP Data Engineer certification exam comprises of multiple-choice and multiple-select format for the questions. The total duration of the exam is 2 hours, and candidates can choose the test center located at the google database.

The registration fee for the exam is USD 200, along with applicable taxes. The GCP Data Engineer certification exam is available in only four languages i.e., English, Portuguese, Japanese, and Spanish.

Prerequisites

The prerequisites for the GCP Data Engineer certification exam is vital for every aspiring Data Engineer. And, the most prominent highlight for the GCP Data Engineer is that it doesn’t require any prerequisites. However, candidates need to fulfill the recommended experience required for the GCP Data Engineer certification exam.

So, one needs a minimum of three years of industry experience in data-based roles along with more than one year of practical experience in the design and management of solutions using GCP can be helpful. Also, another crucial prerequisite that candidates must fulfill for authenticating their eligibility is the candidate’s age. Candidates should be at least 18 years of age or more to appear for the examination.

Course Outline

Before you begin your GCP Data Engineer journey, you should know about the course outline which includes various topics and subtopics that need special attention and consideration. The course outline helps the candidates to plan a positive outcome. Therefore, it is highly important to understand the course outline, so you can completely focus on the exam objectives during your Google data engineer certification preparation. The following domains that are covered in the exam:

Section 1: Designing data processing systems (22%)

1.1 Designing for security and compliance. Considerations include:

- Identity and Access Management (e.g., Cloud IAM and organization policies) (Google Documentation: Identity and Access Management)

- Data security (encryption and key management) (Google Documentation: Default encryption at rest)

- Privacy (e.g., personally identifiable information, and Cloud Data Loss Prevention API) (Google Documentation: Sensitive Data Protection, Cloud Data Loss Prevention)

- Regional considerations (data sovereignty) for data access and storage (Google Documentation: Implement data residency and sovereignty requirements)

- Legal and regulatory compliance

1.2 Designing for reliability and fidelity. Considerations include:

- Preparing and cleaning data (e.g., Dataprep, Dataflow, and Cloud Data Fusion) (Google Documentation: Cloud Data Fusion overview)

- Monitoring and orchestration of data pipelines (Google Documentation: Orchestrating your data workloads in Google Cloud)

- Disaster recovery and fault tolerance (Google Documentation: What is a Disaster Recovery Plan?)

- Making decisions related to ACID (atomicity, consistency, isolation, and durability) compliance and availability

- Data validation

1.3 Designing for flexibility and portability. Considerations include

- Mapping current and future business requirements to the architecture

- Designing for data and application portability (e.g., multi-cloud and data residency requirements) (Google Documentation: Implement data residency and sovereignty requirements, Multicloud database management: Architectures, use cases, and best practices)

- Data staging, cataloging, and discovery (data governance) (Google Documentation: Data Catalog overview)

1.4 Designing data migrations. Considerations include:

- Analyzing current stakeholder needs, users, processes, and technologies and creating a plan to get to desired state

- Planning migration to Google Cloud (e.g., BigQuery Data Transfer Service, Database Migration Service, Transfer Appliance, Google Cloud networking, Datastream) (Google Documentation: Migrate to Google Cloud: Transfer your large datasets, Database Migration Service)

- Designing the migration validation strategy (Google Documentation: Migrate to Google Cloud: Best practices for validating a migration plan, About migration planning)

- Designing the project, dataset, and table architecture to ensure proper data governance (Google Documentation: Introduction to data governance in BigQuery, Create datasets)

Section 2: Ingesting and processing the data (25%)

2.1 Planning the data pipelines. Considerations include:

- Defining data sources and sinks (Google Documentation: Sources and sinks)

- Defining data transformation logic (Google Documentation: Introduction to data transformation)

- Networking fundamentals

- Data encryption (Google Documentation: Data encryption options)

2.2 Building the pipelines. Considerations include:

- Data cleansing

- Identifying the services (e.g., Dataflow, Apache Beam, Dataproc, Cloud Data Fusion, BigQuery, Pub/Sub, Apache Spark, Hadoop ecosystem, and Apache Kafka) (Google Documentation: Dataflow overview, Programming model for Apache Beam)

- Transformation:

- Batch (Google Documentation: Get started with Batch)

- Streaming (e.g., windowing, late arriving data)

- Language

- Ad hoc data ingestion (one-time or automated pipeline) (Google Documentation: Design Dataflow pipeline workflows)

- Data acquisition and import (Google Documentation: Exporting and Importing Entities)

- Integrating with new data sources (Google Documentation: Integrate your data sources with Data Catalog)

2.3 Deploying and operationalizing the pipelines. Considerations include:

- Job automation and orchestration (e.g., Cloud Composer and Workflows) (Google Documentation: Choose Workflows or Cloud Composer for service orchestration, Cloud Composer overview)

- CI/CD (Continuous Integration and Continuous Deployment)

Section 3: Storing the data (20%)

3.1 Selecting storage systems. Considerations include:

- Analyzing data access patterns (Google Documentation: Data analytics and pipelines overview)

- Choosing managed services (e.g., Bigtable, Cloud Spanner, Cloud SQL, Cloud Storage, Firestore, Memorystore) (Google Documentation: Google Cloud database options)

- Planning for storage costs and performance (Google Documentation: Optimize cost: Storage)

- Lifecycle management of data (Google Documentation: Options for controlling data lifecycles)

3.2 Planning for using a data warehouse. Considerations include:

- Designing the data model (Google Documentation: Data model)

- Deciding the degree of data normalization (Google Documentation: Normalization)

- Mapping business requirements

- Defining architecture to support data access patterns (Google Documentation: Data analytics design patterns)

3.3 Using a data lake. Considerations include

- Managing the lake (configuring data discovery, access, and cost controls) (Google Documentation: Manage a lake, Secure your lake)

- Processing data (Google Documentation: Data processing services)

- Monitoring the data lake (Google Documentation: What is a Data Lake?)

3.4 Designing for a data mesh. Considerations include:

- Building a data mesh based on requirements by using Google Cloud tools (e.g., Dataplex, Data Catalog, BigQuery, Cloud Storage) (Google Documentation: Build a data mesh, Build a modern, distributed Data Mesh with Google Cloud)

- Segmenting data for distributed team usage (Google Documentation: Network segmentation and connectivity for distributed applications in Cross-Cloud Network)

- Building a federated governance model for distributed data systems

Section 4: Preparing and using data for analysis (15%)

4.1 Preparing data for visualization. Considerations include:

- Connecting to tools

- Precalculating fields (Google Documentation: Introduction to materialized views)

- BigQuery materialized views (view logic) (Google Documentation: Create materialized views)

- Determining granularity of time data (Google Documentation: Filtering and aggregation: manipulating time series, Structure of Detailed data export)

- Troubleshooting poor performing queries (Google Documentation: Diagnose issues)

- Identity and Access Management (IAM) and Cloud Data Loss Prevention (Cloud DLP) (Google Documentation: IAM roles)

4.2 Sharing data. Considerations include:

- Defining rules to share data (Google Documentation: Secure data exchange with ingress and egress rules)

- Publishing datasets (Google Documentation: BigQuery public datasets)

- Publishing reports and visualizations

- Analytics Hub (Google Documentation: Introduction to Analytics Hub)

4.3 Exploring and analyzing data. Considerations include:

- Preparing data for feature engineering (training and serving machine learning models)

- Conducting data discovery (Google Documentation: Discover data)

Section 5: Maintaining and automating data workloads (18%)

5.1 Optimizing resources. Considerations include:

- Minimizing costs per required business need for data (Google Documentation: Migrate to Google Cloud: Minimize costs)

- Ensuring that enough resources are available for business-critical data processes (Google Documentation: Disaster recovery planning guide)

- Deciding between persistent or job-based data clusters (e.g., Dataproc) (Google Documentation: Dataproc overview)

5.2 Designing automation and repeatability. Considerations include:

- Creating directed acyclic graphs (DAGs) for Cloud Composer (Google Documentation: Write Airflow DAGs, Add and update DAGs)

- Scheduling jobs in a repeatable way (Google Documentation: Schedule and run a cron job)

5.3 Organizing workloads based on business requirements. Considerations include:

- Flex, on-demand, and flat rate slot pricing (index on flexibility or fixed capacity) (Google Documentation: Introduction to workload management, Introduction to legacy reservations)

- Interactive or batch query jobs (Google Documentation: Run a query)

5.4 Monitoring and troubleshooting processes. Considerations include:

- Observability of data processes (e.g., Cloud Monitoring, Cloud Logging, BigQuery admin panel) (Google Documentation: Observability in Google Cloud, Introduction to BigQuery monitoring)

- Monitoring planned usage

- Troubleshooting error messages, billing issues, and quotas (Google Documentation: Troubleshoot quota errors, Troubleshoot quota and limit errors)

- Manage workloads, such as jobs, queries, and compute capacity (reservations) (Google Documentation: Workload management using Reservations)

5.5 Maintaining awareness of failures and mitigating impact. Considerations include:

- Designing system for fault tolerance and managing restarts (Google Documentation: Designing resilient systems)

- Running jobs in multiple regions or zones (Google Documentation: Serve traffic from multiple regions, Regions and zones)

- Preparing for data corruption and missing data (Google Documentation: Verifying end-to-end data integrity)

- Data replication and failover (e.g., Cloud SQL, Redis clusters) (Google Documentation: High availability and replicas)

Google Cloud Platform

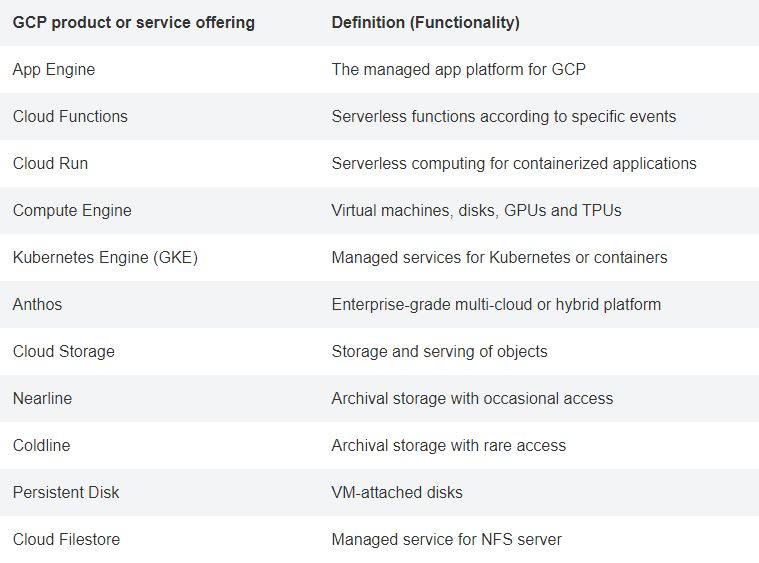

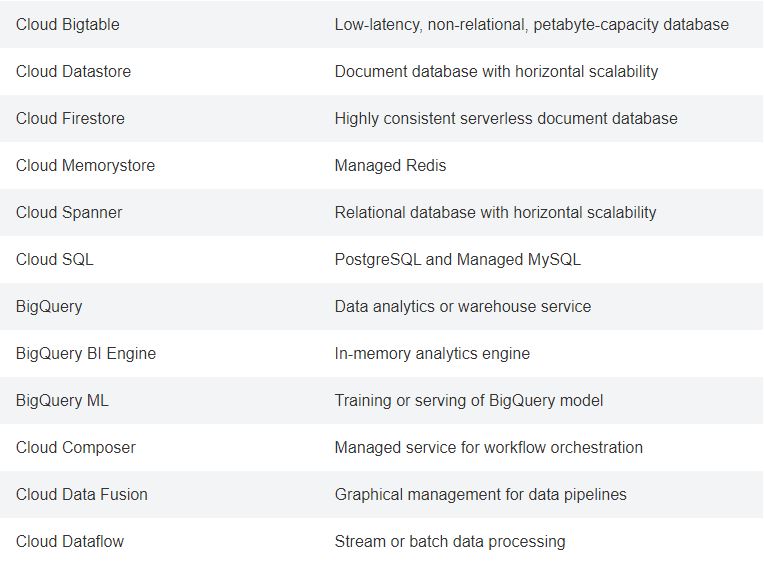

Notably, Google has become the ace of market space by providing standard services. Currently, every other Google cloud cheat sheet marks third or fourth among public cloud vendors creating tough competition to Amazon, Microsoft, and IBM. There is a slight difference in the definition of Google Cloud Platform depending on its spruce. However, the most general notion about GCP is that it is the collection of cloud computing services provided by Google. The architecture of GCP is based on the infrastructure used by Google internally for end-user products such as YouTube and Google Search.

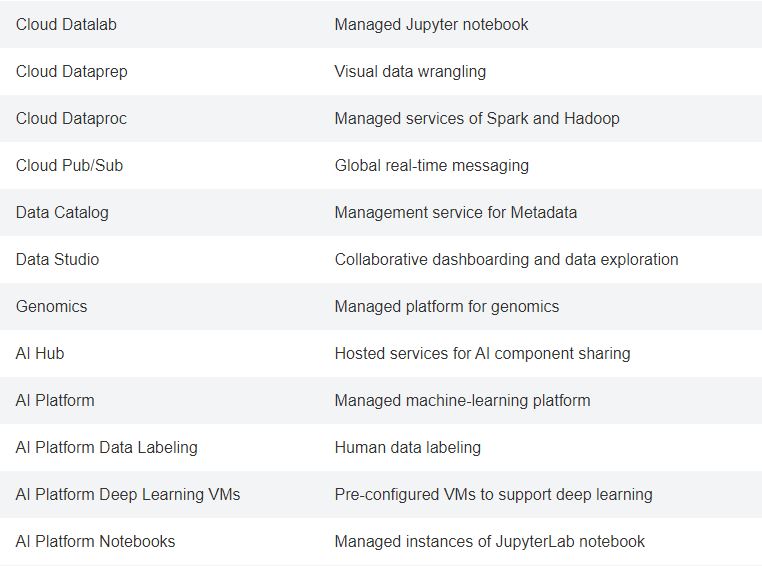

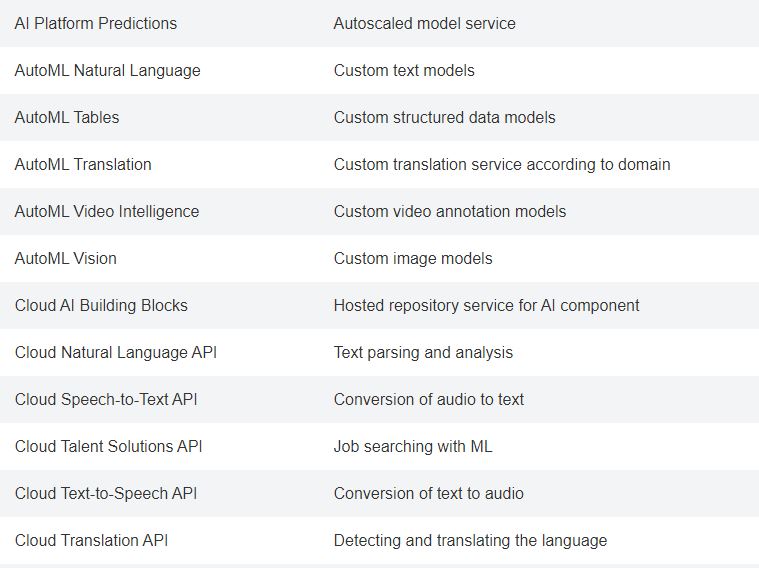

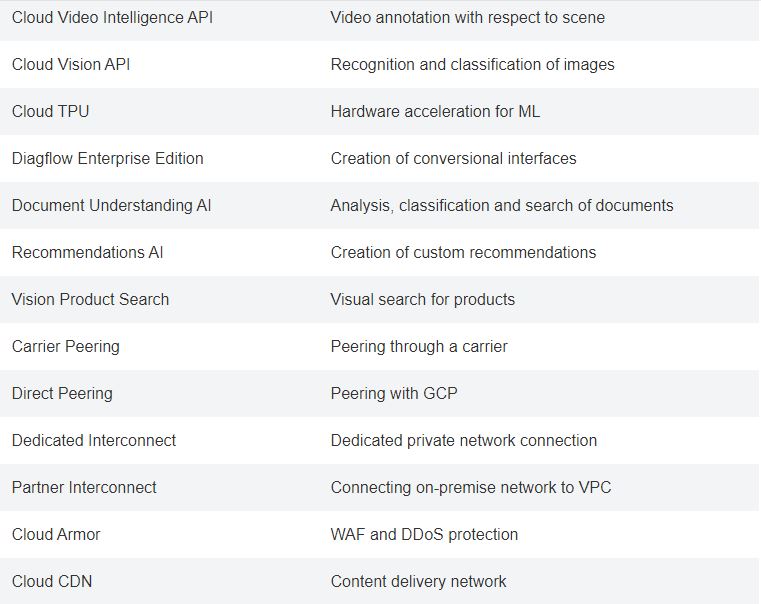

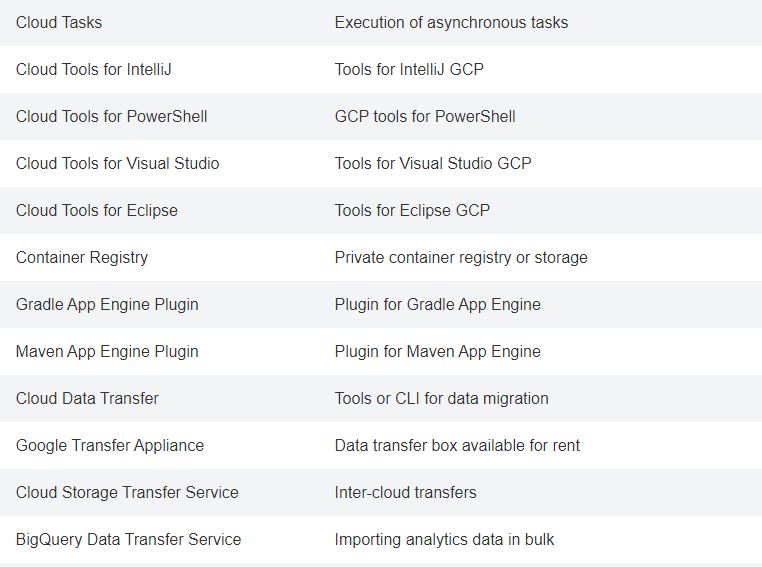

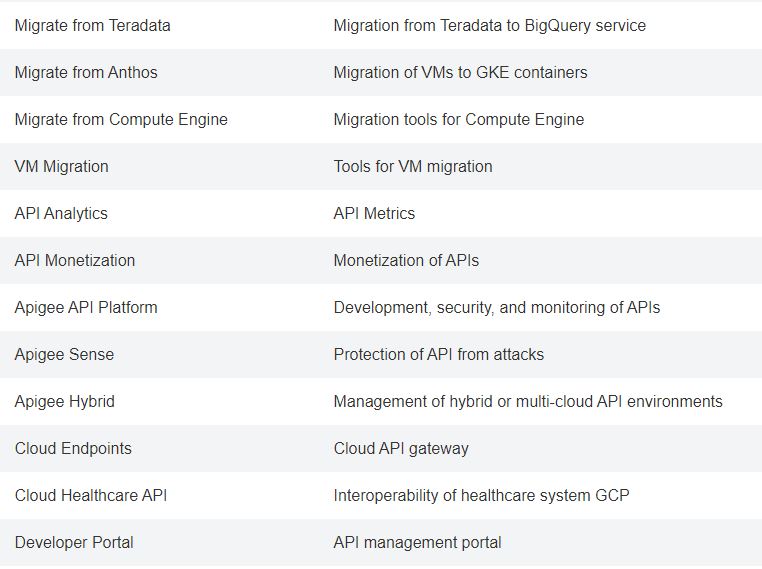

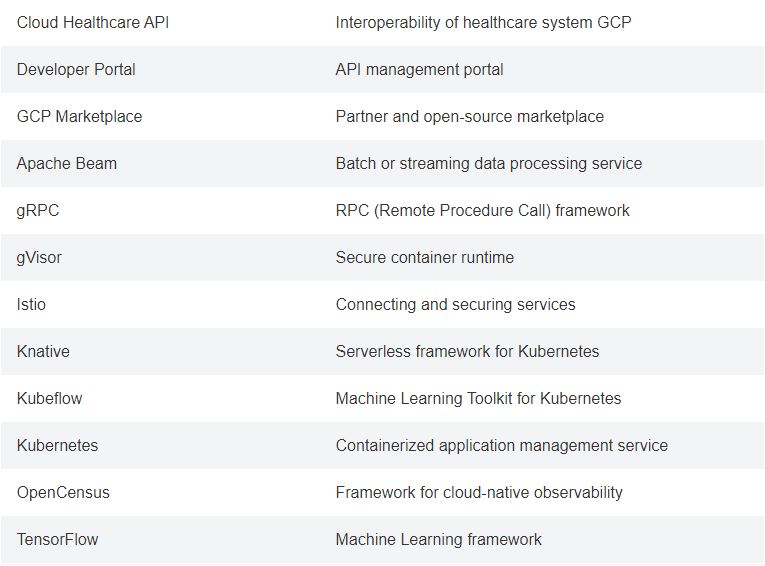

Products and Services on Google Cloud Platform

Google Cloud Platform cheat sheet is basically a compendium of products and services that are being offered. In other words, the ever-expanding portfolio of offerings by GCP is one of its most prominent highlights. We may think of the following products and services falling in the category of Google Cloud Platform.

- Computing and hosting

- Machine learning

- Storage

- Big data

- Networking

- Databases

- Computing and Hosting Services

Summing Up

Well done on making it this far! We hope that this article has been helpful and hopefully a confidence booster for those taking the exam soon. The above-mentioned certification details and the GCP cheat sheet will provide some great advice and major areas to look for. Moreover, the cheat sheet will definitely act as your salvation to pass the exam without commercial experience.

Getting a GCP Data Engineer certification can help you validate and recognize your expertise on Google Cloud Platform. Are you thinking to give your skills a recognition? If yes, then check out our Google Cloud certification training courses. Top it off with hundreds of real-time exam practice papers on GCP Data Engineer certification.

Design and operate powerful big data and machine learning solutions using Google Cloud Platform. Prepare and become a Certified GCP Data Engineer Now!