Google Professional Machine Learning Engineer

For the Google Professional Machine Learning Engineer Exam, a skilled Machine Learning Engineer should use Google Cloud tools and known methods to create, test, put into action, and enhance ML models. They work with big and intricate datasets, writing code that can be used again. The ML Engineer thinks about responsible AI and fairness during the model-making process and works closely with others to make sure ML applications succeed in the long run.

Tasks of ML Engineer:

- The ML Engineer is really good at programming and knows their way around data platforms and tools for handling lots of data.

- They’re skilled in designing models, creating pipelines for data and ML, and understanding metrics.

- The ML Engineer knows the basics of MLOps, making apps, managing infrastructure, and handling data properly.

- They make ML easy for teams across the organization, training and improving models to create effective solutions that can scale up and perform well.

Knowledge Area:

- The test doesn’t check your coding skills directly. As long as you’re decent with Python and Cloud SQL, you should be able to understand and answer questions that include code snippets.

- A background of at least three years in the industry, with one or more years spent creating and overseeing solutions using Google Cloud.

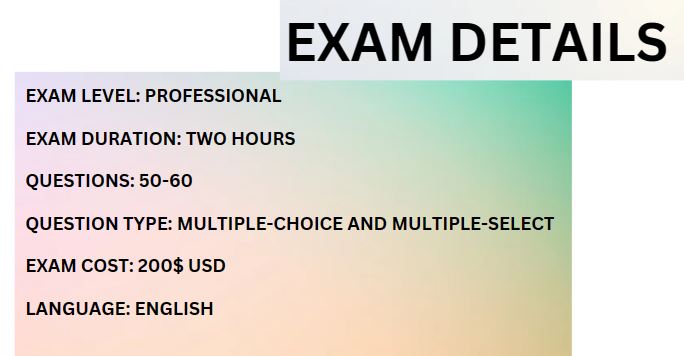

Exam Details

- The Google Professional Machine Learning Engineer exam lasts for two hours.

- To register, you’ll need to pay $200, plus any applicable taxes. The exam is offered in English language.

- As for the structure, expect 50-60 questions that include a mix of multiple-choice and multiple-select. You have the flexibility to take the exam online from anywhere or opt for an onsite proctored session at an authorized testing center.

Exam Register

Here’s how to schedule your exam:

- Go to the Google Cloud website and click “Register” for the exam you’re interested in.

- Google Cloud certifications are offered in different languages, and you can find the options on the exam page. If you’re a first-time test-taker or prefer a specific language, create a new user account in Google Cloud’s Webassessor in that language.

- During registration, decide whether you want to take the exam online or at a nearby testing facility. The Exam Delivery Method includes:

- Taking the online-proctored exam from a remote location. Check the online testing requirements beforehand.

- Taking the onsite-proctored exam at a testing center. Locate a test center near you.

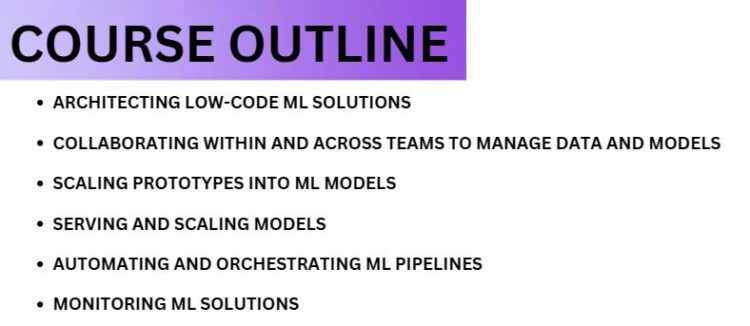

Course Outline

The guide for the exam provides a list of topics that could be included in the test. Take a look to ensure you’re familiar with the subjects. Specifically for the Professional Machine Learning Engineer Exam, here are the designated topics:

Section 1: Architecting low-code ML solutions (12%)

1.1 Developing ML models by using BigQuery ML. Considerations include:

- Building the appropriate BigQuery ML model (e.g., linear and binary classification, regression, time-series, matrix factorization, boosted trees, autoencoders) based on the business problem (Google Documentation: BigQuery ML model evaluation overview)

- Feature engineering or selection by using BigQuery ML (Google Documentation: Perform feature engineering with the TRANSFORM clause)

- Generating predictions by using BigQuery ML (Google Documentation: Use BigQuery ML to predict penguin weight)

1.2 Building AI solutions by using ML APIs. Considerations include:

- Building applications by using ML APIs (e.g., Cloud Vision API, Natural Language API, Cloud Speech API, Translation) (Google Documentation: Integrating machine learning APIs, Cloud Vision)

- Building applications by using industry-specific APIs (e.g., Document AI API, Retail API) (Google Documentation: Document AI)

1.3 Training models by using AutoML. Considerations include:

- Preparing data for AutoML (e.g., feature selection, data labeling, Tabular Workflows on AutoML) (Google Documentation: Tabular Workflow for End-to-End AutoML)

- Using available data (e.g., tabular, text, speech, images, videos) to train custom models (Google Documentation: Introduction to Vertex AI)

- Using AutoML for tabular data (Google Documentation: Create a dataset and train an AutoML classification model)

- Creating forecasting models using AutoML (Google Documentation: Forecasting with AutoML)

- Configuring and debugging trained models (Google Documentation: Monitor and debug training with an interactive shell)

Section 2: Collaborating within and across teams to manage data and models (16%)

2.1 Exploring and preprocessing organization-wide data (e.g., Cloud Storage, BigQuery, Cloud Spanner, Cloud SQL, Apache Spark, Apache Hadoop). Considerations include:

- Organizing different types of data (e.g., tabular, text, speech, images, videos) for efficient training (Google Documentation: Best practices for creating tabular training data)

- Managing datasets in Vertex AI (Google Documentation: Use managed datasets)

- Data preprocessing (e.g., Dataflow, TensorFlow Extended [TFX], BigQuery)

- Creating and consolidating features in Vertex AI Feature Store (Google Documentation: Introduction to feature management in Vertex AI)

- Privacy implications of data usage and/or collection (e.g., handling sensitive data such as personally identifiable information [PII] and protected health information [PHI]) (Google Documentation: De-identifying sensitive data)

2.2 Model prototyping using Jupyter notebooks. Considerations include:

- Choosing the appropriate Jupyter backend on Google Cloud (e.g., Vertex AI Workbench, notebooks on Dataproc) (Google Documentation: Create a Dataproc-enabled instance)

- Applying security best practices in Vertex AI Workbench (Google Documentation: Vertex AI access control with IAM)

- Using Spark kernels

- Integration with code source repositories (Google Documentation: Cloud Source Repositories)

- Developing models in Vertex AI Workbench by using common frameworks (e.g., TensorFlow, PyTorch, sklearn, Spark, JAX) (Google Documentation: Introduction to Vertex AI Workbench)

2.3 Tracking and running ML experiments. Considerations include:

- Choosing the appropriate Google Cloud environment for development and experimentation (e.g., Vertex AI Experiments, Kubeflow Pipelines, Vertex AI TensorBoard with TensorFlow and PyTorch) given the framework (Google Documentation: Introduction to Vertex AI Pipelines, Best practices for implementing machine learning on Google Cloud)

Section 3: Scaling prototypes into ML models (18%)

3.1 Building models. Considerations include:

- Choosing ML framework and model architecture (Google Documentation: Best practices for implementing machine learning on Google Cloud)

- Modeling techniques given interpretability requirements (Google Documentation: Introduction to Vertex Explainable AI)

3.2 Training models. Considerations include:

- Organizing training data (e.g., tabular, text, speech, images, videos) on Google Cloud (e.g., Cloud Storage, BigQuery)

- Ingestion of various file types (e.g., CSV, JSON, images, Hadoop, databases) into training (Google Documentation: How to ingest data into BigQuery so you can analyze it)

- Training using different SDKs (e.g., Vertex AI custom training, Kubeflow on Google Kubernetes Engine, AutoML, tabular workflows) (Google Documentation: Custom training overview)

- Using distributed training to organize reliable pipelines (Google Documentation: Distributed training)

- Hyperparameter tuning (Google Documentation: Overview of hyperparameter tuning)

- Troubleshooting ML model training failures (Google Documentation: Troubleshooting Vertex AI)

3.3 Choosing appropriate hardware for training. Considerations include:

- Evaluation of compute and accelerator options (e.g., CPU, GPU, TPU, edge devices) (Google Documentation: Introduction to Cloud TPU)

- Distributed training with TPUs and GPUs (e.g., Reduction Server on Vertex AI, Horovod) (Google Documentation: Distributed training)

Section 4: Serving and scaling models (19%)

4.1 Serving models. Considerations include:

- Batch and online inference (e.g., Vertex AI, Dataflow, BigQuery ML, Dataproc) (Google Documentation: Batch prediction components)

- Using different frameworks (e.g., PyTorch, XGBoost) to serve models (Google Documentation: Export model artifacts for prediction and explanation)

- Organizing a model registry (Google Documentation: Introduction to Vertex AI Model Registry)

- A/B testing different versions of a model

4.2 Scaling online model serving. Considerations include:

- Vertex AI Feature Store (Google Documentation: Introduction to feature management in Vertex AI)

- Vertex AI public and private endpoints (Google Documentation: Use private endpoints for online prediction)

- Choosing appropriate hardware (e.g., CPU, GPU, TPU, edge) (Google Documentation: Introduction to Cloud TPU)

- Scaling the serving backend based on the throughput (e.g., Vertex AI Prediction, containerized serving) (Google Documentation: Serving Predictions with NVIDIA Triton)

- Tuning ML models for training and serving in production (e.g., simplification techniques, optimizing the ML solution for increased performance, latency, memory, throughput) (Google Documentation: Best practices for implementing machine learning on Google Cloud)

Section 5: Automating and orchestrating ML pipelines (21%)

5.1 Developing end to end ML pipelines. Considerations include:

- Data and model validation (Google Documentation: Data validation errors)

- Ensuring consistent data pre-processing between training and serving (Google Documentation: Pre-processing for TensorFlow pipelines with tf.Transform on Google Cloud)

- Hosting third-party pipelines on Google Cloud (e.g., MLFlow) (Google Documentation: MLOps: Continuous delivery and automation pipelines in machine learning)

- Identifying components, parameters, triggers, and compute needs (e.g., Cloud Build, Cloud Run) (Google Documentation: Deploying to Cloud Run using Cloud Build)

- Orchestration framework (e.g., Kubeflow Pipelines, Vertex AI Pipelines, Cloud Composer) (Google Documentation: Introduction to Vertex AI Pipelines)

- Hybrid or multicloud strategies (Google Documentation: Build hybrid and multicloud architectures using Google Cloud)

- System design with TFX components or Kubeflow DSL (e.g., Dataflow) (Google Documentation: Architecture for MLOps using TensorFlow Extended, Vertex AI Pipelines, and Cloud Build)

5.2 Automating model retraining. Considerations include:

- Determining an appropriate retraining policy Continuous integration and continuous delivery (CI/CD) model deployment (e.g., Cloud Build, Jenkins) (Google Documentation: MLOps: Continuous delivery and automation pipelines in machine learning)

5.3 Tracking and auditing metadata. Considerations include:

- Tracking and comparing model artifacts and versions (e.g., Vertex AI Experiments, Vertex ML Metadata) (Google Documentation: Track Vertex ML Metadata, Introduction to Vertex AI Experiments)

- Hooking into model and dataset versioning (Google Documentation: Model versioning with Model Registry)

- Model and data lineage (Google Documentation: Use data lineage with Google Cloud systems)

Section 6: Monitoring ML solutions (14%)

6.1 Identifying risks to ML solutions. Considerations include:

- Building secure ML systems (e.g., protecting against unintentional exploitation of data or models, hacking)

- Aligning with Googles Responsible AI practices (e.g., biases) (Google Documentation: Responsible AI, Understand and configure Responsible AI for Imagen)

- Assessing ML solution readiness (e.g., data bias, fairness) (Google Documentation: Inclusive ML guide – AutoML)

- Model explainability on Vertex AI (e.g., Vertex AI Prediction) (Google Documentation: Introduction to Vertex Explainable AI)

6.2 Monitoring, testing, and troubleshooting ML solutions. Considerations include:

- Establishing continuous evaluation metrics (e.g., Vertex AI Model Monitoring, Explainable AI) (Google Documentation: Introduction to Vertex AI Model Monitoring, Model evaluation in Vertex AI)

- Monitoring for training-serving skew (Google Documentation: Monitor feature skew and drift)

- Monitoring for feature attribution drift (Google Documentation: Monitor feature attribution skew and drift)

- Monitoring model performance against baselines, simpler models, and across the time dimension

- Common training and serving errors

Google Professional Machine Learning Engineer Exam FAQs

Exam Policy

Google exam policies are as follows:

Certification Renewal / Recertification:

To maintain your certified status, you need to go through recertification. Unless the detailed exam descriptions state otherwise, Google Cloud certifications remain valid for two years. Recertification involves retaking the exam and achieving a passing score within a specific time frame. You can initiate the recertification process 60 days before your certification expires.

Retake Exam:

Google takes steps to ensure the security of the certified user group by enforcing exam security and testing rules. Google Cloud is dedicated to consistently following program regulations. If you don’t pass an exam, you can retake it within 14 days. Failing the second attempt imposes a 60-day wait before your next try. Failing the third time means you must wait 365 days before attempting the exam again.

Cancellation and Reschedule Policy:

If you miss your exam, there’s no refund. Canceling less than 72 hours before an onsite exam or less than 24 hours before an online exam results in forfeiting the exam fee without a refund. Rescheduling within 72 hours of an onsite exam or within 24 hours of an online exam incurs a fee. You can choose a new exam date and time by logging into your Webassessor account, selecting “Register for an Exam,” and choosing “Reschedule/Cancel” from the Scheduled/In Progress Exams option.

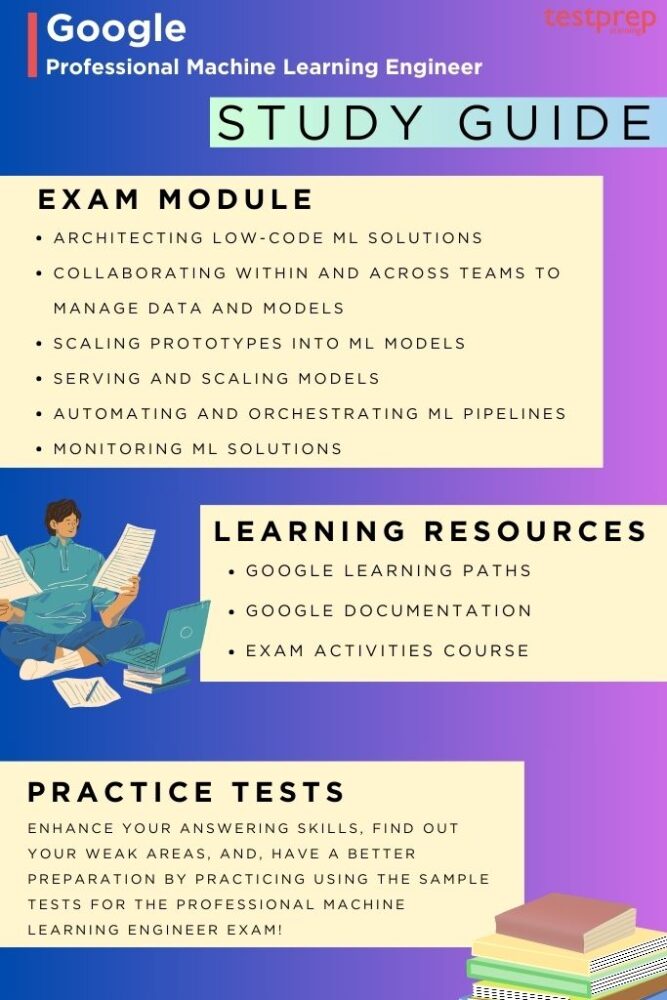

Google Professional Machine Learning Engineer Exam Study Guide

1. Get familiar with Exam Objectives

To start getting ready for the Professional Machine Learning Engineer exam, it’s important to know the exam objectives. These objectives consist of five main topics that make up significant parts of the exam. To get ready effectively, check out the exam guide to have a clearer grasp of these topics.

- Architecting low-code ML solutions

- Collaborating within and across teams to manage data and models

- Scaling prototypes into ML models

- Serving and scaling models

- Automating and orchestrating ML pipelines

- Monitoring ML solutions

2. Exploring the Machine Learning Engineer Learning Path

A Machine Learning Engineer is responsible for creating, developing, putting into action, improving, managing, and sustaining ML systems. This learning journey leads you through a handpicked set of courses, labs, and skill badges that offer practical, hands-on practice with Google Cloud technologies crucial for the ML Engineer position. After finishing the path, explore the Google Cloud Machine Learning Engineer certification to progress further in your professional adventure.

The learning path includes the following modules:

For more: https://www.cloudskillsboost.google/paths/17

– Google Cloud Hands-on Labs

In this initial practical session, you’ll enter the Google Cloud console and utilize fundamental Google Cloud elements such as Projects, Resources, IAM Users, Roles, Permissions, and APIs.

– Introduction to AI and Machine Learning on Google Cloud

This course presents the artificial intelligence (AI) and machine learning (ML) options on Google Cloud that assist in the journey from data to AI. It covers AI basics, the process of developing AI, and creating AI solutions. The course delves into the various technologies, products, and tools at your disposal for constructing an ML model, setting up an ML pipeline, and launching a generative AI project. It caters to the diverse needs of users, including data scientists, AI developers, and ML engineers.

– Launching into Machine Learning

The course begin with a talk on data—ways to enhance its quality and carry out exploratory data analysis. It covers Vertex AI AutoML, guiding you on constructing, training, and launching an ML model without needing to code. The advantages of Big Query ML are explained too. The course then delves into optimizing an ML model and the roles of generalization and sampling in evaluating the quality of custom training for ML models.

– TensorFlow on Google Cloud

The course includes creating a TensorFlow input data pipeline, constructing ML models with TensorFlow and Keras, enhancing model accuracy, scripting ML models for broader application, and crafting specialized ML models.

– Feature Engineering

This course looks into the advantages of employing Vertex AI Feature Store, methods to enhance ML model accuracy, and determining the most valuable data columns for features. It also covers lessons and hands-on exercises on feature engineering with BigQuery ML, Keras, and TensorFlow.

– Machine Learning in the Enterprise

In this course, we tackle the ML Workflow using a practical example. An ML team is presented with various business requirements and use cases. The team needs to grasp the tools needed for managing and governing data and figure out the most effective method for preprocessing the data.

– Production Machine Learning Systems

In this, we learn how to put different types of ML systems into action—static, dynamic, and continuous training, as well as static and dynamic inference, along with batch and online processing. We explore TensorFlow abstraction levels, the different choices for distributed training, and the process of creating distributed training models using custom estimators.

– Computer Vision Fundamentals with Google Cloud

This course explains various computer vision scenarios and explores diverse machine learning approaches to tackle them. The methods range from trying out pre-existing ML models and APIs to using AutoML Vision and constructing personalized image classifiers with linear models, deep neural network (DNN) models, or convolutional neural network (CNN) models.

The course guides you on enhancing a model’s accuracy through augmentation, feature extraction, and fine-tuning hyperparameters while being cautious about overfitting the data.

– Natural Language Processing on Google Cloud

In this course, you get to know the products and solutions that address NLP issues on Google Cloud. Moreover, it delves into the methods, strategies, and tools to create an NLP project with neural networks using Vertex AI and TensorFlow.

– Recommendation Systems on Google Cloud

In this, you put your understanding of classification models and embeddings into action by constructing a machine learning pipeline that operates as a recommendation engine.

Google Documentation

Google’s documentation is a thorough guide that helps users navigate the complexities of its products and services. Whether you’re a developer, a business owner, or just passionate about technology, Google’s documentation offers clear and detailed information on effectively using their technologies. The documentation spans various topics, encompassing API references, implementation guides, troubleshooting advice, and best practices. It’s user-friendly, providing step-by-step instructions and examples to ensure that users can easily understand and apply the information.

Practice Tests

Taking part in practice exams for the Google Professional Machine Learning Engineer is crucial to understanding the question format and potential topics that might come up. Beyond just getting used to the exam structure, these practice exams are vital for enhancing your preparation. They help you pinpoint your strengths and weaknesses, enabling you to concentrate on areas that require improvement. Additionally, practicing with these exams enhances your ability to respond quickly, ultimately saving valuable time in the real exam. To prepare for the test, check out online platforms to find the most useful practice exams.