Google Professional Data Engineer- Exam Update

A Professional Data Engineer ensures data becomes useful and valuable for others by collecting, transforming, and publishing it. This person assesses and chooses products and services to meet business and regulatory needs. The Professional Data Engineer is skilled in creating and overseeing reliable data processing systems, involving the design, construction, deployment, monitoring, maintenance, and security of data processing workloads.

Recommended experience:

It’s recommended to have 3+ years of industry experience, including at least 1 year of designing and managing solutions using Google Cloud.

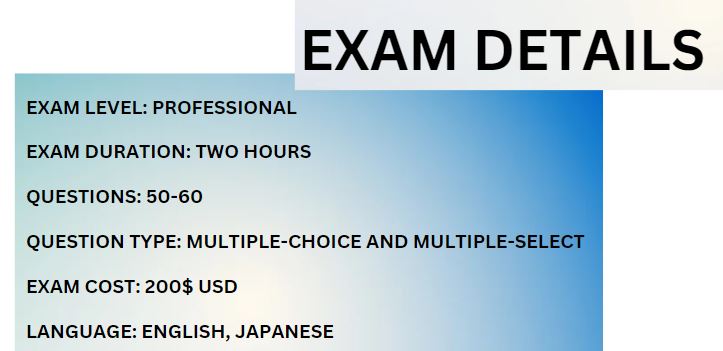

Exam Details

- The Google Professional Data Engineer is a two hours long exam.

- The registration cost for the exam is $200 (plus applicable taxes).

- The exam is available in English and Japanese languages.

- Talking about the exam structure, it will have 50-60 questions that will be a mix of multiple-choice and multiple-select.

- Take the online proctored exam from anywhere.

- Take the onsite-proctored exam at an authorized testing center.

Exam Register

Here’s how you can schedule an exam:

- Visit the Google Cloud website and click on “Register” for the exam you want to take.

- Google Cloud certifications are available in various languages, and you can find the list on the exam page. If you’re a first-time test taker or prefer a localized language, create a new user account in Google Cloud’s Webassessor in that language.

- During registration, choose whether you want to take the exam online or at a testing facility nearby. The Exam Delivery Method includes:

- Taking the online-proctored exam from a remote location. Make sure to check the online testing requirements first.

- Taking the onsite-proctored exam at a testing center. You can locate a test center near you.

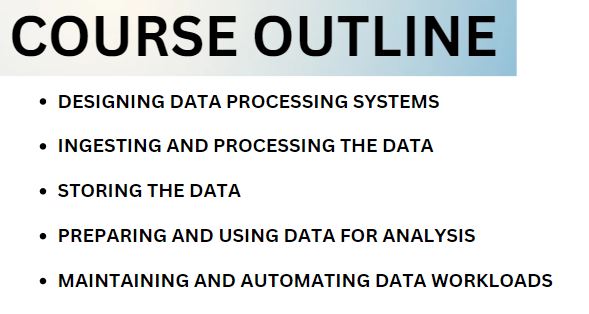

Exam Course Outline

The exam guide has a list of subjects that might be in the test. Check it out to make sure you know about the topics. And, for the Professional Data Engineer Exam, here are the specific subjects:

Section 1: Designing data processing systems (22%)

1.1 Designing for security and compliance. Considerations include:

- Identity and Access Management (e.g., Cloud IAM and organization policies) (Google Documentation: Identity and Access Management)

- Data security (encryption and key management) (Google Documentation: Default encryption at rest)

- Privacy (e.g., personally identifiable information, and Cloud Data Loss Prevention API) (Google Documentation: Sensitive Data Protection, Cloud Data Loss Prevention)

- Regional considerations (data sovereignty) for data access and storage (Google Documentation: Implement data residency and sovereignty requirements)

- Legal and regulatory compliance

1.2 Designing for reliability and fidelity. Considerations include:

- Preparing and cleaning data (e.g., Dataprep, Dataflow, and Cloud Data Fusion) (Google Documentation: Cloud Data Fusion overview)

- Monitoring and orchestration of data pipelines (Google Documentation: Orchestrating your data workloads in Google Cloud)

- Disaster recovery and fault tolerance (Google Documentation: What is a Disaster Recovery Plan?)

- Making decisions related to ACID (atomicity, consistency, isolation, and durability) compliance and availability

- Data validation

1.3 Designing for flexibility and portability. Considerations include

- Mapping current and future business requirements to the architecture

- Designing for data and application portability (e.g., multi-cloud and data residency requirements) (Google Documentation: Implement data residency and sovereignty requirements, Multicloud database management: Architectures, use cases, and best practices)

- Data staging, cataloging, and discovery (data governance) (Google Documentation: Data Catalog overview)

1.4 Designing data migrations. Considerations include:

- Analyzing current stakeholder needs, users, processes, and technologies and creating a plan to get to desired state

- Planning migration to Google Cloud (e.g., BigQuery Data Transfer Service, Database Migration Service, Transfer Appliance, Google Cloud networking, Datastream) (Google Documentation: Migrate to Google Cloud: Transfer your large datasets, Database Migration Service)

- Designing the migration validation strategy (Google Documentation: Migrate to Google Cloud: Best practices for validating a migration plan, About migration planning)

- Designing the project, dataset, and table architecture to ensure proper data governance (Google Documentation: Introduction to data governance in BigQuery, Create datasets)

Section 2: Ingesting and processing the data (25%)

2.1 Planning the data pipelines. Considerations include:

- Defining data sources and sinks (Google Documentation: Sources and sinks)

- Defining data transformation logic (Google Documentation: Introduction to data transformation)

- Networking fundamentals

- Data encryption (Google Documentation: Data encryption options)

2.2 Building the pipelines. Considerations include:

- Data cleansing

- Identifying the services (e.g., Dataflow, Apache Beam, Dataproc, Cloud Data Fusion, BigQuery, Pub/Sub, Apache Spark, Hadoop ecosystem, and Apache Kafka) (Google Documentation: Dataflow overview, Programming model for Apache Beam)

- Transformation:

- Batch (Google Documentation: Get started with Batch)

- Streaming (e.g., windowing, late arriving data)

- Language

- Ad hoc data ingestion (one-time or automated pipeline) (Google Documentation: Design Dataflow pipeline workflows)

- Data acquisition and import (Google Documentation: Exporting and Importing Entities)

- Integrating with new data sources (Google Documentation: Integrate your data sources with Data Catalog)

2.3 Deploying and operationalizing the pipelines. Considerations include:

- Job automation and orchestration (e.g., Cloud Composer and Workflows) (Google Documentation: Choose Workflows or Cloud Composer for service orchestration, Cloud Composer overview)

- CI/CD (Continuous Integration and Continuous Deployment)

Section 3: Storing the data (20%)

3.1 Selecting storage systems. Considerations include:

- Analyzing data access patterns (Google Documentation: Data analytics and pipelines overview)

- Choosing managed services (e.g., Bigtable, Cloud Spanner, Cloud SQL, Cloud Storage, Firestore, Memorystore) (Google Documentation: Google Cloud database options)

- Planning for storage costs and performance (Google Documentation: Optimize cost: Storage)

- Lifecycle management of data (Google Documentation: Options for controlling data lifecycles)

3.2 Planning for using a data warehouse. Considerations include:

- Designing the data model (Google Documentation: Data model)

- Deciding the degree of data normalization (Google Documentation: Normalization)

- Mapping business requirements

- Defining architecture to support data access patterns (Google Documentation: Data analytics design patterns)

3.3 Using a data lake. Considerations include

- Managing the lake (configuring data discovery, access, and cost controls) (Google Documentation: Manage a lake, Secure your lake)

- Processing data (Google Documentation: Data processing services)

- Monitoring the data lake (Google Documentation: What is a Data Lake?)

3.4 Designing for a data mesh. Considerations include:

- Building a data mesh based on requirements by using Google Cloud tools (e.g., Dataplex, Data Catalog, BigQuery, Cloud Storage) (Google Documentation: Build a data mesh, Build a modern, distributed Data Mesh with Google Cloud)

- Segmenting data for distributed team usage (Google Documentation: Network segmentation and connectivity for distributed applications in Cross-Cloud Network)

- Building a federated governance model for distributed data systems

Section 4: Preparing and using data for analysis (15%)

4.1 Preparing data for visualization. Considerations include:

- Connecting to tools

- Precalculating fields (Google Documentation: Introduction to materialized views)

- BigQuery materialized views (view logic) (Google Documentation: Create materialized views)

- Determining granularity of time data (Google Documentation: Filtering and aggregation: manipulating time series, Structure of Detailed data export)

- Troubleshooting poor performing queries (Google Documentation: Diagnose issues)

- Identity and Access Management (IAM) and Cloud Data Loss Prevention (Cloud DLP) (Google Documentation: IAM roles)

4.2 Sharing data. Considerations include:

- Defining rules to share data (Google Documentation: Secure data exchange with ingress and egress rules)

- Publishing datasets (Google Documentation: BigQuery public datasets)

- Publishing reports and visualizations

- Analytics Hub (Google Documentation: Introduction to Analytics Hub)

4.3 Exploring and analyzing data. Considerations include:

- Preparing data for feature engineering (training and serving machine learning models)

- Conducting data discovery (Google Documentation: Discover data)

Section 5: Maintaining and automating data workloads (18%)

5.1 Optimizing resources. Considerations include:

- Minimizing costs per required business need for data (Google Documentation: Migrate to Google Cloud: Minimize costs)

- Ensuring that enough resources are available for business-critical data processes (Google Documentation: Disaster recovery planning guide)

- Deciding between persistent or job-based data clusters (e.g., Dataproc) (Google Documentation: Dataproc overview)

5.2 Designing automation and repeatability. Considerations include:

- Creating directed acyclic graphs (DAGs) for Cloud Composer (Google Documentation: Write Airflow DAGs, Add and update DAGs)

- Scheduling jobs in a repeatable way (Google Documentation: Schedule and run a cron job)

5.3 Organizing workloads based on business requirements. Considerations include:

- Flex, on-demand, and flat rate slot pricing (index on flexibility or fixed capacity) (Google Documentation: Introduction to workload management, Introduction to legacy reservations)

- Interactive or batch query jobs (Google Documentation: Run a query)

5.4 Monitoring and troubleshooting processes. Considerations include:

- Observability of data processes (e.g., Cloud Monitoring, Cloud Logging, BigQuery admin panel) (Google Documentation: Observability in Google Cloud, Introduction to BigQuery monitoring)

- Monitoring planned usage

- Troubleshooting error messages, billing issues, and quotas (Google Documentation: Troubleshoot quota errors, Troubleshoot quota and limit errors)

- Manage workloads, such as jobs, queries, and compute capacity (reservations) (Google Documentation: Workload management using Reservations)

5.5 Maintaining awareness of failures and mitigating impact. Considerations include:

- Designing system for fault tolerance and managing restarts (Google Documentation: Designing resilient systems)

- Running jobs in multiple regions or zones (Google Documentation: Serve traffic from multiple regions, Regions and zones)

- Preparing for data corruption and missing data (Google Documentation: Verifying end-to-end data integrity)

- Data replication and failover (e.g., Cloud SQL, Redis clusters) (Google Documentation: High availability and replicas)

Google Professional Data Engineer Exam FAQs

Exam Terms and Conditions

Certification Renewal / Recertification:

To keep your certification status, you have to go through recertification. Unless the detailed exam descriptions say otherwise, Google Cloud certifications are good for two years after you get them. Recertification involves retaking the exam and getting a passing score within a specific time frame. You can start the recertification process 60 days before your certification expires.

Retake Exam:

Google works hard to keep the certified user group secure by ensuring exam security and enforcing testing rules. Google Cloud is committed to carefully and consistently following program regulations. If you don’t pass an exam, you can retake it within 14 days. If you don’t pass the second attempt, there’s a 60-day wait before your next try. Failing the third time means you need to wait 365 days before attempting the exam again.

Cancellation and reschedule policy:

If you miss your exam, you won’t get your money back. If you cancel less than 72 hours before an onsite exam or less than 24 hours before an online exam, your exam fee is forfeited without a refund. Rescheduling within 72 hours of an onsite exam or within 24 hours of an online exam incurs a fee. You can set a new exam date and time by logging into your Webassessor account, choosing “Register for an Exam,” and selecting “Reschedule/Cancel” from the Scheduled/In Progress Exams option.

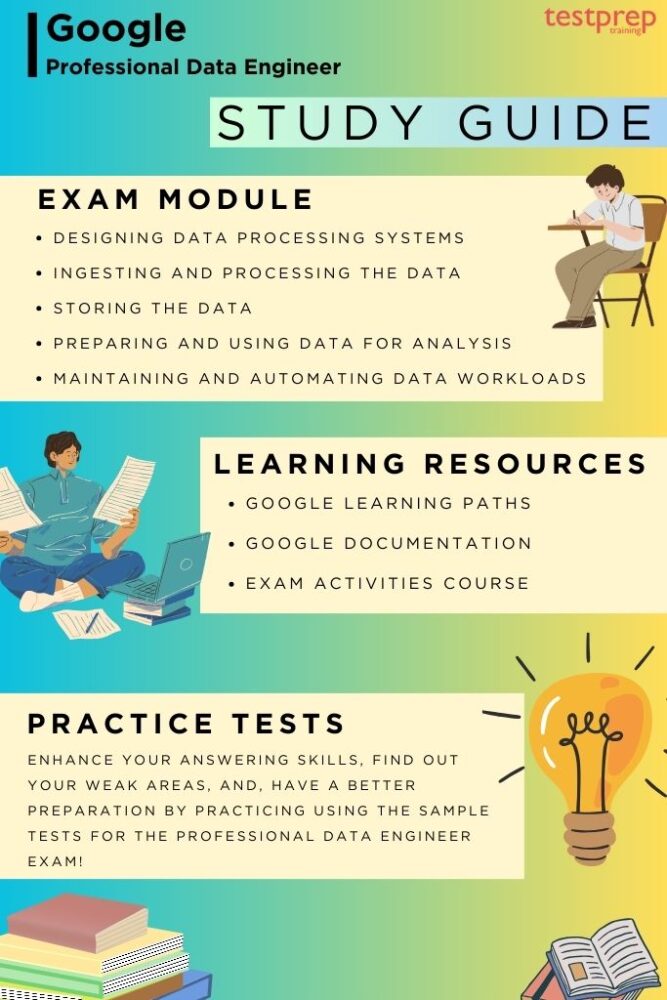

Study guide for Google Professional Data Engineer Exam

Getting Familiar with Exam Objectives

To kickstart your preparation for the Professional Data Engineer exam, it’s crucial to be familiar with the exam objectives. These objectives include five key topics that cover major sections of the exam. To prepare effectively, take a look at the exam guide to get a better understanding of the topics.

- Designing data processing systems

- Ingesting and processing the data

- Storing the data

- Preparing and using data for analysis

- Maintaining and automating data workloads

Exploring the Data Engineer Learning Path

A Data Engineer is responsible for creating systems that gather and process data for business decision-making. This learning path leads you through a carefully selected set of on-demand courses, labs, and skill badges. They offer practical, hands-on experience with Google Cloud technologies crucial for the Data Engineer role. After finishing the path, explore the Google Cloud Data Engineer certification as your next move in your professional journey.

The learning path includes the following modules:

Check complete modules here: https://www.cloudskillsboost.google/paths/16

-Google Cloud Hands-on Labs

In this initial hands-on lab, you’ll get into the Google Cloud console and get the hang of some fundamental Google Cloud features: Projects, Resources, IAM Users, Roles, Permissions, and APIs.

– Professional Data Engineer Journey

This course assists learners in making a study plan for the Professional Data Engineer (PDE) certification exam. They delve into the various domains included in the exam, understand the scope, and evaluate their readiness. Each learner then forms their own personalized study plan.

– Google Cloud Big Data and Machine Learning Fundamentals

In this course, you’ll get to know the Google Cloud products and services for big data and machine learning. They play a role in the data-to-AI lifecycle. The course looks into the steps involved, the challenges faced, and the advantages of constructing a big data pipeline and machine learning models using Vertex AI on Google Cloud.

– Modernizing Data Lakes and Data Warehouses with Google Cloud

Data pipelines consist of two crucial parts: data lakes and warehouses. In this course, we explore the specific use-cases for each storage type and delve into the technical details of the data lake and warehouse solutions on Google Cloud. Additionally, we discuss the role of a data engineer, the positive impacts of a well-functioning data pipeline on business operations, and why conducting data engineering in a cloud environment is essential.

– Building Batch Data Pipelines on Google Cloud

Data pipelines usually fit into one of three paradigms: Extra-Load, Extract-Load-Transform, or Extract-Transform-Load. In this course, we explain when to use each paradigm specifically for batch data. Additionally, we explore various technologies on Google Cloud for data transformation, such as BigQuery, running Spark on Dataproc, creating pipeline graphs in Cloud Data Fusion, and accomplishing serverless data processing with Dataflow. Learners will gain practical experience by actively building data pipeline components on Google Cloud through Qwiklabs.

– Building Resilient Streaming Analytics Systems on Google Cloud

Streaming data processing is gaining popularity because it allows businesses to obtain real-time metrics on their operations. In this course, we explain how to construct streaming data pipelines on Google Cloud. We delve into the use of Pub/Sub to manage incoming streaming data. The course also guides you on applying aggregations and transformations to streaming data with Dataflow, and storing the processed records in BigQuery or Cloud Bigtable for analysis. Learners will have the opportunity to actively build components of streaming data pipelines on Google Cloud through hands-on experience with QwikLabs.

– Smart Analytics, Machine Learning, and AI on Google Cloud

Integrating machine learning into data pipelines enhances a business’s ability to gain insights from their data. This course explores various methods of incorporating machine learning into data pipelines on Google Cloud, depending on the desired level of customization. For minimal customization, AutoML is covered. For more personalized machine learning capabilities, the course introduces Notebooks and BigQuery machine learning (BigQuery ML). Additionally, the course guides you on how to operationalize machine learning solutions using Vertex AI. Learners will actively build machine learning models on Google Cloud through hands-on experience with QwikLabs.

– Serverless Data Processing with Dataflow: Foundations

This is the initial course in a series of three, focusing on Serverless Data Processing with Dataflow. In this first part, we kick off with a quick review of Apache Beam and its connection to Dataflow. We discuss the vision behind Apache Beam and the advantages of the Beam Portability framework. This framework fulfills the vision that developers can use their preferred programming language with their chosen execution backend. We then demonstrate how Dataflow allows you to separate compute and storage, leading to cost savings. Additionally, we explore how identity, access, and management tools interact with your Dataflow pipelines. Finally, we delve into implementing the appropriate security model for your use case on Dataflow.

– Serverless Data Processing with Dataflow: Develop Pipelines

In the second part of the Dataflow course series, we take a closer look at developing pipelines using the Beam SDK. To begin, we refresh our understanding of Apache Beam concepts. Following that, we explore processing streaming data by delving into windows, watermarks, and triggers. The course covers various options for sources and sinks in your pipelines, using schemas to express structured data, and implementing stateful transformations through State and Timer APIs. We then go on to review best practices that enhance your pipeline’s performance. Towards the end of the course, we introduce SQL and Dataframes as tools to represent your business logic in Beam, and we also explore how to iteratively develop pipelines using Beam notebooks.

– Serverless Data Processing with Dataflow: Operations

In the final part of the Dataflow course series, we’ll introduce the components of the Dataflow operational model. We’ll explore tools and techniques for troubleshooting and improving pipeline performance. Next, we’ll go over best practices for testing, deployment, and ensuring reliability in Dataflow pipelines. The course will wrap up with a review of Templates, a feature that simplifies scaling Dataflow pipelines for organizations with many users. These lessons aim to guarantee that your data platform remains stable and resilient, even in unexpected situations.

Google Documentation

Google’s documentation serves as a comprehensive resource that guides users through the intricacies of its products and services. Whether you’re a developer, a business owner, or an enthusiast, Google’s documentation provides clear and detailed information on how to utilize their technologies effectively. The documentation covers a wide range of topics, including API references, implementation guides, troubleshooting tips, and best practices. It is designed to be user-friendly, offering step-by-step instructions and examples to ensure that users can easily grasp and implement the information provided.

Practice Tests

Engaging in the Google Professional Data Engineer practice exams is essential to get a feel for the question format and potential exam topics. While it helps you familiarize yourself with the exam structure, it’s crucial for boosting your preparation. These practice exams play a key role in identifying your strengths and weaknesses, allowing you to focus on areas that need improvement. Moreover, practicing with these exams improves your ability to respond efficiently, ultimately saving valuable time during the actual exam. To get ready for the test, explore online platforms to find the most effective practice exams.