Databricks Certified Associate Developer for Apache Spark 3.0

The Databricks Certified Associate Developer for Apache Spark 3.0 certification is awarded by Databricks academy. The Databricks Certified Associate Developer for Apache Spark 3.0 certification exam evaluates the essential understanding of the Spark architecture and therefore the ability to use the Spark DataFrame API to complete individual data manipulation tasks. Moreover, this certification exam evaluates the understanding of the Spark DataFrame API and therefore the ability to use the Spark DataFrame API to finish basic data manipulation tasks within a Spark session. These tasks include the following:

- Selecting, renaming, and manipulating columns

- Filtering, dropping, sorting, and aggregating rows

- Handling missing data

- Combining, reading, writing and partitioning DataFrames with schemas

- Working with UDFs and Spark SQL functions.

- In addition, the exam will assess the basics of the Spark architecture like execution/deployment modes, the execution hierarchy, fault tolerance, garbage collection, and broadcasting.

Exam Details

| Number of Questions | 60 |

| Exam Format | Multiple-choice questions |

| Exam Duration | 120 minutes |

| Passing Score | 70% and above (42 of the 60 questions) |

| Exam Type | Online proctored Exam |

| Language | Python or Scala |

| Application Fee | 200 USD |

Prerequisites

The qualified candidate should:

- have an elementart understanding of the Spark architecture, including Adaptive Query Execution

- be able to apply the Spark DataFrame API to complete individual data manipulation task, including:

- selecting, renaming and manipulating columns

- filtering, dropping, sorting, and aggregating rows

- joining, reading, writing and partitioning DataFrames

- working with UDFs and Spark SQL functions

Apache Spark 3.0: Databricks Certified Associate Developer Interview Questions

Schedule your Exam

- Create an account (or login) to https://academy.databricks.com.

- Click on the Certifications Tab to see all available certificate exams

- Click the Register button for the exam you would like to take.

- Follow on-screen prompts to schedule your exam.

Reschedule/Retake your Exam

- If you need to reschedule your exam, and it’s more than 24 hours from the start time, please log in to your Webassessor account and reschedule. If you need to reschedule your exam, and it’s within 24 hours of the start time, please contact Kryterion.

- You can re-register and retake the exam as many times as like. Each attempt costs $200.

For More: Check the Databricks Certified Associate Developer for Apache Spark 3.0 FAQs

Learning Outcomes

- Architecture of an Apache Spark Application

- Learn to run Apache Spark on a cluster of computer

- Learn the Execution Hierarchy of Apache Spark

- Create DataFrame from files and Scala Collections

- Spark DataFrame API and SQL functions

- Various techniques to select the columns of a DataFrame

- Define the schema of a DataFrame and set the data types of the columns

- Apply various methods to manipulate the columns of a DataFrame

- Filter your DataFrame based on specifics rules

- Sort data in a specific order

- Sort rows of a DataFrame in a specific order

- Arrange the rows of DataFrame as groups

- Handle NULL Values in a DataFrame

- Use JOIN or UNION to combine two data sets

- Save the result of complex data transformations to an external storage system

- Various deployment modes of an Apache Spark Application

- Working with UDFs and Spark SQL functions

- Use Databricks Community Edition to write Apache Spark Code

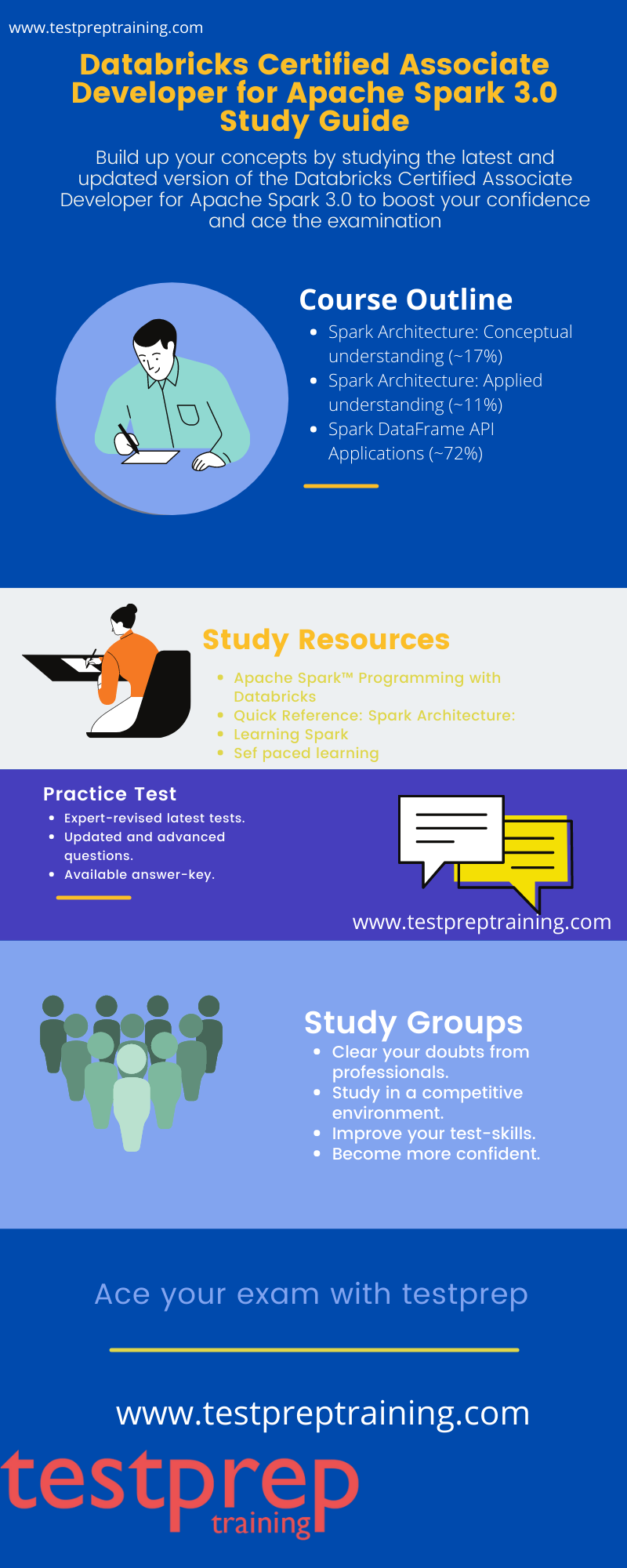

Databricks Certified Associate Developer for Apache Spark 3.0 Study Guide

It is very important to prepare for the exam with complete dedication and with the correct set of information. The internet is crammed with numerous sets of study materials, handbooks, and practice papers. Therefore, instead of following the gang one should search for study material that’s latest and expert-revised. Moreover, the right set of materials with guidance is the key to success. Since candidates are always confused regarding the right set of data, it’s our responsibility to supply you with the proper path. Therefore, follow the below mentioned and ace your exam with flying colors.

Getting Familiar with the Course Outline

- To begin with, Spark Architecture: Conceptual understanding (~17%)

- Then, Spark Architecture: Applied understanding (~11%)

- Lastly, Spark DataFrame API Applications (~72%)

Study Resources

- Apache Spark Programming with Databricks: This course uses a driven approach to explore the fundamentals of Spark Programming with Databricks, including Spark architecture, the DataFrame API, query optimization, Structured Streaming, and Delta. This is a 2 days course. The course objectives are as follow:

- Define the major components of Spark architecture and execution hierarchy

- Explain how DataFrames are built, transformed, and evaluated in Spark

- Apply the DataFrame API to explore, preprocess, join, and ingest data in Spark

- Apply the Structured Streaming API to perform analytics on streaming data

- Navigate the Spark UI and describe how the catalyst optimizer, partitioning, and caching affect Spark’s execution performance

- Quick Reference: Spark Architecture: Apache Spark is a unified analytics engine for large scale data processing known for its speed, ease and breadth of use, ability to access diverse data sources, and APIs built to support a wide range of use-cases. This course is meant to provide an overview of Spark’s internal architecture. The learning objctives of this course are as follow:

- Describe basic Spark architecture and define terminology such as “driver” and “executor”.

- how parallelization allows Spark to improve speed and scalability of an application.

- Describe lazy evaluation and how it relates to pipelining.

- Identify high-level events for each stage in the Optimization process.

- Learning Spark: This book explains how to perform simple and complex data analytics and employ machine learning algorithms. Through step-by-step walk-throughs, code snippets, and notebooks, you’ll be able to:

- Learn Python, SQL, Scala, or Java high-level Structured APIs

- Understand Spark operations and SQL Engine

- Inspect, tune, and debug Spark operations with Spark configurations and Spark UI

- Connect to data sources: JSON, Parquet, CSV, Avro, ORC, Hive, S3, or Kafka

- Perform analytics on batch and streaming data using Structured Streaming

- Build reliable data pipelines with open source Delta Lake and Spark

- Develop machine learning pipelines with MLlib and productionize models using MLflow

- Self Paced learning: The courses included in this learning bundle are listed in alphabetical order so you can choose your desired course.

Join Online Study Groups

Joining online study groups is one of the best ways to improve your studying skills. Any exam requires two things first is speed and second is accuracy. However, it’s completely your decision whether you would like to hitch such groups or not but to extend your speed and accuracy, even the toppers recommend joining study groups. These groups provide you with expert assistance and you’ll clear your doubts with peers also like professionals. Moreover, having a professional’s guidance helps keep you updated with any changes falling in any examination. Therefore, we might highly recommend every aspirant join online study groups.

Take Practice Tests

Lastly, we want our users to receive the best service and study material, so, we have made the most latest and expert-revised practice test papers for the Databricks Certified Associate Developer for Apache Spark 3.0. Therefore, while preparing for any examination the most important step is to take Practice tests. Moreover, these tests assist you to check your preparation level, build your confidence and time management, and assist you to get conversant in differing types of questions that are commonly asked in the examination. Moreover, you can take our practice test right now, which consists of questions from basic to advanced levels in a systematic manner. Therefore, keep working and ace the exam.