Google Associate Data Practitioner

The Associate Data Practitioner is responsible for securing and managing data within Google Cloud. This role involves experience with Google Cloud’s data services for activities such as data ingestion, transformation, pipeline orchestration, analysis, visualization, and applying machine learning techniques. Candidates are expected to have foundational knowledge of cloud computing models, including Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS). The Associate Data Practitioner exam evaluates your skills in:

- Preparing and ingesting data

- Analyzing and visualizing data

- Orchestrating data workflows

- Managing data effectively

Recommended Experience:

It is recommended that candidates have at least six months of hands-on experience working with data on Google Cloud. This includes familiarity with Google Cloud’s data services, tools, and technologies and practical knowledge of managing data-related tasks such as ingestion, processing, analysis, and visualization within the Google Cloud environment.

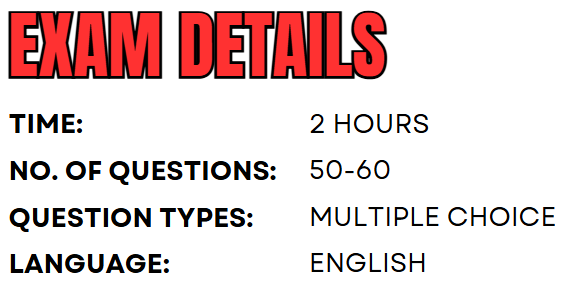

Exam Details

The Google Associate Data Practitioner exam is a 2-hour assessment available in English, with no prerequisites required. The exam consists of 50–60 multiple-choice and multiple-select questions.

Exam Delivery Methods:

- Online-proctored exam taken remotely.

- Onsite-proctored exam conducted at a testing center. You can locate a nearby test center for convenience.

Course Outline

This course outline provides a detailed framework to help you prepare effectively for the Google Associate Data Practitioner exam, covering essential topics, skills, and practical applications.

Section 1: Data Preparation and Ingestion (30%)

1.1 Prepare and process data.

Considerations include:

- Differentiate between different data manipulation methodologies (e.g., ETL, ELT, ETLT)

- Choose the appropriate data transfer tool (e.g., Storage Transfer Service, Transfer Appliance)

- Assess data quality

- Conduct data cleaning (e.g., Cloud Data Fusion, BigQuery, SQL, Dataow)

1.2 Extract and load data into appropriate Google Cloud storage systems.

Considerations include:

- Distinguish the format of the data (e.g., CSV, JSON, Apache Parquet, Apache Avro, structured database tables)

- Choose the appropriate extraction tool (e.g., Dataow, BigQuery Data Transfer Service, Database Migration Service, Cloud Data Fusion)

- Select the appropriate storage solution (e.g., Cloud Storage, BigQuery, Cloud SQL, Firestore, Bigtable, Spanner, AlloyDB)

- Choose the appropriate data storage location type (e.g., regional, dual-regional, multi-regional, zonal)

- Classify use cases into having structured, unstructured, or semi-structured data requirements

- Load data into Google Cloud storage systems using the appropriate tool (e.g., gcloud and BQ CLI, Storage Transfer Service, BigQuery Data Transfer Service, client libraries)

Section 2: Data Analysis and Presentation (27%)

2.1 Identify data trends, patterns, and insights by using BigQuery and Jupyter notebooks.

Considerations include:

- Define and execute SQL queries in BigQuery to generate reports and extract key insights

- Use Jupyter notebooks to analyze and visualize data (e.g., Colab Enterprise)

- Analyze data to answer business questions

2.2 Visualize data and create dashboards in Looker given business requirements.

Considerations include:

- Create, modify, and share dashboards to answer business questions

- Compare Looker and Looker Studio for different analytics use cases

- Manipulate simple LookML parameters to modify a data model

2.3 Define, train, evaluate, and use ML models.

Considerations include:

- Identify ML use cases for developing models by using BigQuery ML and AutoML

- Use pretrained Google large language models (LLMs) using remote connection in BigQuery

- Plan a standard ML project (e.g., data collection, model training, model evaluation, prediction)

- Execute SQL to create, train, and evaluate models using BigQuery ML

- Perform inference using BigQuery ML models

- Organize models in Model Registry

Section 3: Data Pipeline Orchestration (18%)

3.1 Design and implement simple data pipelines.

Considerations include:

- Select a data transformation tool (e.g., Dataproc, Dataow, Cloud Data Fusion, Cloud Composer, Dataform) based on business requirements

- Evaluate use cases for ELT and ETL

- Choose products required to implement basic transformation pipelines

3.2 Schedule, automate, and monitor basic data processing tasks.

Considerations include:

- Create and manage scheduled queries (e.g., BigQuery, Cloud Scheduler, Cloud Composer)

- Monitor Dataow pipeline progress using the Dataow job UI

- Review and analyze logs in Cloud Logging and Cloud Monitoring

- Select a data orchestration solution (e.g., Cloud Composer, scheduled queries, Dataproc Workow Templates, Workows) based on business requirements

- Identify use cases for event-driven data ingestion from Pub/Sub to BigQuery

- Use Eventarc triggers in event-driven pipelines (Dataform, Dataow, Cloud Functions, Cloud Run, Cloud Composer)

Section 4: Data Management (25%)

4.1 Configure access control and governance.

Considerations include:

- Establish the principles of least privileged access by using Identity and Access Management (IAM)

- Differentiate between basic roles, predefined roles, and permissions for data services (e.g., BigQuery, Cloud Storage)

- Compare methods of access control for Cloud Storage (e.g., public or private access, uniform access)

- Determine when to share data using Analytics Hub

4.2 Congure lifecycle management.

Considerations include:

- Determine the appropriate Cloud Storage classes based on the frequency of data access and retention requirements

- Configure rules to delete objects are a specified period to automatically remove unnecessary data and reduce storage expenses (e.g., BigQuery, Cloud Storage)

- Evaluate Google Cloud services for archiving data given business requirements

4.3 Identify high availability and disaster recovery strategies for data in Cloud Storage and Cloud SQL.

Considerations include:

- Compare backup and recovery solutions offered as Google-managed services

- Determine when to use replication

- Distinguish between primary and secondary data storage location type (e.g., regions, dual-regions, multi-regions, zones) for data redundancy

4.2 Apply security measures and ensure compliance with data privacy regulations.

Considerations include:

- Identify use cases for customer-managed encryption keys (CMEK), customer-supplied encryption keys (CSEK), and Google-managed encryption keys (GMEK)

- Understand the role of Cloud Key Management Service (Cloud KMS) to manage encryption keys

- Identify the difference between encryption in transit and encryption at rest

Google Associate Data Practitioner Exam FAQs

Exam Policies

Below are some of the Google exam related policies:

Recertification:

To maintain active certification status, candidates are required to recertify. The certification remains valid for three years from the date it is earned. Recertification is achieved by retaking and passing the exam within the designated recertification period. Candidates can begin the recertification process up to 60 days before their current certification expires.

Exam Scoring:

Google Cloud exams assess whether candidates meet the established minimum passing criteria, providing only pass or fail results. These exams are not intended for diagnostic purposes or to rank individuals based on their abilities. As such, numerical scores are not provided, as they may lead to misinterpretation by the examinee.

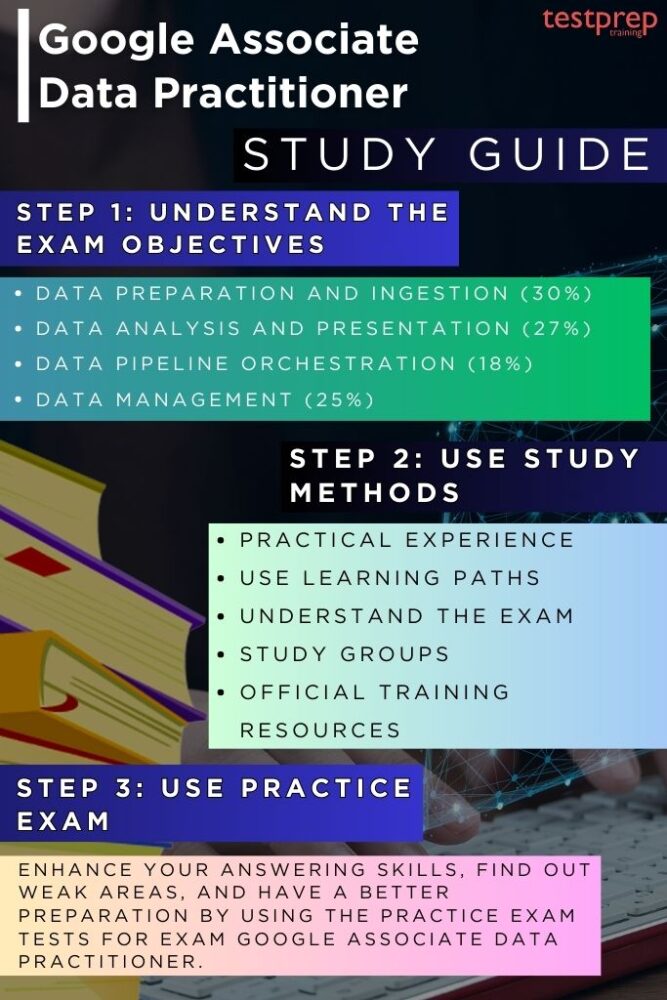

Google Associate Data Practitioner Exam Study Guide

1. Gain Practical, Real-World Experience

Before taking the Associate Data Practitioner exam, it is highly recommended to acquire at least six months of hands-on experience working with Google Cloud. This practical exposure allows you to familiarize yourself with Google Cloud’s core services, tools, and workflows. Engaging in real-world projects will help you build a strong understanding of data management tasks such as ingestion, processing, pipeline orchestration, analysis, and visualization within the Google Cloud environment. This experience is essential for confidently applying theoretical knowledge to practical scenarios encountered during the exam.

2. Understand What’s on the Exam

It is crucial to familiarize yourself with the exam structure and the topics it covers. The Associate Data Practitioner exam assesses your knowledge and skills across several key areas, including data preparation and ingestion, data analysis and visualization, orchestrating data pipelines, and managing data effectively within Google Cloud. Understanding the exam’s scope will help you identify which topics require more focus based on your current expertise.

To better understand the exam content, review the official exam guide provided by Google Cloud. This guide outlines the domains covered, along with the weight of each domain, giving you insight into the areas with the most significant impact on your overall performance. Familiarizing yourself with the format and question style will help you approach the exam with confidence and clarity.

3. Enhance Your Skills with Comprehensive Training

To effectively prepare for the Associate Data Practitioner exam, it is essential to expand and strengthen your skill set by using various training resources offered by Google Cloud. These resources are designed to provide a well-rounded learning experience, equipping you with both theoretical knowledge and practical expertise.

Explore online training courses that offer in-depth coverage of the exam domains, allowing you to learn at your own pace. For those who prefer interactive learning, in-person classes provide opportunities to engage with experienced instructors and clarify doubts in real-time. Additionally, hands-on labs offer a practical approach to mastering Google Cloud tools and services by simulating real-world scenarios, enabling you to apply your skills in a controlled environment. Further, Google Cloud also provides a variety of learning paths that cover module courses such as:

- Introduction to Data Engineering on Google Cloud

- Derive Insights from BigQuery Data

- Prepare Data for Looker Dashboards and Reports

- Introduction to AI and Machine Learning on Google Cloud

- Baseline: Infrastructure

- Optimizing Cost with Google Cloud Storage

- Implement Cloud Security Fundamentals on Google Cloud

4. Join Study Groups for Collaborative Learning

Joining study groups can significantly enhance your preparation for the Associate Data Practitioner exam. Rather than preparing in isolation, participating in study groups allows you to tap into collective knowledge and different perspectives, often revealing insights that you might not encounter on your own. Working with others exposes you to a variety of problem-solving approaches, and discussing complex concepts can deepen your understanding of key topics like data ingestion, pipeline orchestration, and Google Cloud services.

Moreover, study groups create a sense of accountability, which is essential for maintaining a disciplined study schedule. Regularly interacting with peers not only keeps you motivated but also provides the opportunity to test your knowledge through group discussions and mock exams. If you’re stuck on a particular topic or concept, group members can offer clarifications or different explanations that might resonate better with you.

5. Take Practice Exams to Build Confidence and Identify Knowledge Gaps

Taking practice exams is an essential part of your preparation for the Associate Data Practitioner exam. These exams simulate the real testing environment, allowing you to familiarize yourself with the format, question types, and time constraints you’ll encounter on the actual exam day. By regularly taking practice exams, you can track your progress, build confidence, and develop a strategy for managing your time effectively during the actual exam.

In addition to improving your test-taking skills, practice exams help identify areas where you may have gaps in your knowledge. By reviewing incorrect answers, you can pinpoint which concepts or skills require further study and focus. This targeted approach allows you to allocate your study time more efficiently, ensuring that you’re well-prepared for all sections of the exam.