AWS Certified Data Analytics Specialty (DAS-C01)

AWS Certified Data Analytics – Specialty (DAS-C01) examines the candidate’s ability in designing, building, securing, and maintaining analytics solutions on AWS. This certification is an industry-recognized credential from AWS that will validate candidates’ skills in AWS data lakes and analytics services as well. However, the exam is designed for individuals performing data analytics roles.

Further, AWS Certified Data Analytics – Specialty validates an examinee’s ability to:

- Firstly, explain the AWS data analytics services and understand the process of integrating them with each other.

- Secondly, describing the process of AWS data analytics services for data lifecycle of collection, storage, processing, and visualization.

Recommended Knowledge and Experience

For AWS Certified Data Analytics – Specialty exam candidates must have:

- Firstly, at least five years of experience with data analytics technologies and two years of hands-on experience working with AWS.

- Secondly, familiarity and experience of working with AWS services for designing, building, securing, and maintaining analytics solutions.

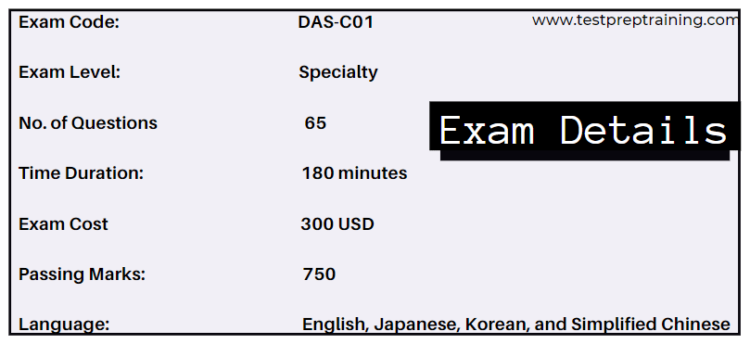

AWS Exam Details

AWS Certified Data Analytics is a Specialty level exam that consists of 65 questions. The questions can be of either multiple choice or multiple response types. For completing the exam, there is a time duration of 180 minutes. To pass the AWS Certified Data Analytics – Specialty exam, it is necessary to score at least 750 (100-1000). Further, the exam comes with two delivery methods; a Testing center and an Online proctored exam. AWS Certified Data Analytics – Specialty exam cost is 300 USD (Practice exam: 40 USD) and is available in English, Japanese, Korean, and Simplified Chinese Language.

Signing up for AWS Exam

AWS Certification helps individuals to develop skills and knowledge by validating cloud expertise with industry-recognized certification. Signing up for the AWS will help organizations candidates to:

- Firstly, schedule and manage exams

- Secondly, view certification history

- Thirdly, access to digital badges

- Next, take exam sample tests

- Lastly, view your benefits of certifications

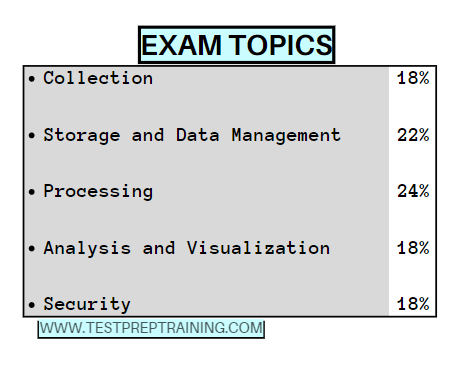

AWS Exam Course Outline

For AWS Certified Data Analytics – Specialty (DAS-C01) Exam, an exam guide is provided that includes exam objectives. The topics covered for the exam include:

Domain 1: Collection

1.1 Determine the operational characteristics of the collection system

- Evaluate that the data loss is within tolerance limits in the event of failures (AWS Documentation: Fault tolerance, Failure Management)

- Evaluate costs associated with data acquisition, transfer, and provisioning from various sources into the collection system (e.g., networking, bandwidth, ETL/data migration costs) (AWS Documentation: Cloud Data Migration, Plan for Data Transfer, Amazon EC2 FAQs)

- Assess the failure scenarios that the collection system may undergo, and take remediation actions based on impact (AWS Documentation: Remediating Noncompliant AWS Resources, CIS AWS Foundations Benchmark controls, Failure Management)

- Determine data persistence at various points of data capture (AWS Documentation: Capture data)

- Identify the latency characteristics of the collection system (AWS Documentation: I/O characteristics and monitoring, Amazon CloudWatch concepts)

1.2 Select a collection system that handles the frequency, volume, and the source of data

- Describe and characterize the volume and flow characteristics of incoming data (streaming, transactional, batch) (AWS Documentation: Characteristics, streaming data)

- Match flow characteristics of data to potential solutions

- Assess the tradeoffs between various ingestion services taking into account scalability, cost, fault tolerance, latency, etc. (AWS Documentation: Amazon EMR FAQs, Data ingestion methods)

- Explain the throughput capability of a variety of different types of data collection and identify bottlenecks (AWS Documentation: Caching Overview, I/O characteristics and monitoring)

- Choose a collection solution that satisfies connectivity constraints of the source data system

1.3 Select a collection system that addresses the key properties of data, such as order, format, and compression

- Describe how to capture data changes at the source (AWS Documentation: Capture changes from Amazon DocumentDB, Creating tasks for ongoing replication using AWS DMS, Using change data capture)

- Discuss data structure and format, compression applied, and encryption requirements (AWS Documentation: Compression encodings, Athena compression support)

- Distinguish the impact of out-of-order delivery of data, duplicate delivery of data, and the tradeoffs between at-most-once, exactly-once, and at-least-once processing (AWS Documentation: Amazon SQS FIFO (First-In-First-Out) queues, Amazon Simple Queue Service)

- Describe how to transform and filter data during the collection process (AWS Documentation: Transform Data, Filter class)

Domain 2: Storage and Data Management

2.1 Determine the operational characteristics of the storage solution for analytics

- Determine the appropriate storage service(s) on the basis of cost vs. performance (AWS Documentation: Amazon S3 pricing, Storage Architecture Selection)

- Understand the durability, reliability, and latency characteristics of the storage solution based on requirements (AWS Documentation: Storage, Selection)

- Determine the requirements of a system for strong vs. eventual consistency of the storage system (AWS Documentation: Amazon S3 Strong Consistency, Consistency Model)

- Determine the appropriate storage solution to address data freshness requirements (AWS Documentation: Storage, Storage Architecture Selection)

2.2 Determine data access and retrieval patterns

- Determine the appropriate storage solution based on update patterns (e.g., bulk, transactional, micro batching) (AWS Documentation: select your storage solution, Performing large-scale batch operations, Batch data processing)

- Determine the appropriate storage solution based on access patterns (e.g., sequential vs. random access, continuous usage vs.ad hoc) (AWS Documentation: optimizing Amazon S3 performance, Amazon S3 FAQs)

- Determine the appropriate storage solution to address change characteristics of data (appendonly changes vs. updates)

- Determine the appropriate storage solution for long-term storage vs. transient storage (AWS Documentation: Storage, Using Amazon S3 storage classes)

- Determine the appropriate storage solution for structured vs. semi-structured data (AWS Documentation: Ingesting and querying semistructured data in Amazon Redshift, Storage Best Practices for Data and Analytics Applications)

- Determine the appropriate storage solution to address query latency requirements (AWS Documentation: In-place querying, Performance Guidelines for Amazon S3, Storage Architecture Selection)

2.3 Select appropriate data layout, schema, structure, and format

- Determine appropriate mechanisms to address schema evolution requirements (AWS Documentation: Handling schema updates, Best practices for securing sensitive data in AWS data stores)

- Select the storage format for the task (AWS Documentation: Task definition parameters, Specifying task settings for AWS Database Migration Service tasks)

- Select the compression/encoding strategies for the chosen storage format (AWS Documentation: Choosing compression encodings for the CUSTOMER table, Compression encodings)

- Select the data sorting and distribution strategies and the storage layout for efficient data access (AWS Documentation: Best practices for using sort keys to organize data, Working with data distribution styles)

- Explain the cost and performance implications of different data distributions, layouts, and formats (e.g., size and number of files) (AWS Documentation: optimizing Amazon S3 performance)

- Implement data formatting and partitioning schemes for data-optimized analysis (AWS Documentation: Partitioning data in Athena, Partitions and data distribution)

2.4 Define data lifecycle based on usage patterns and business requirements

- Determine the strategy to address data lifecycle requirements (AWS Documentation: Amazon Data Lifecycle Manager)

- Apply the lifecycle and data retention policies to different storage solutions (AWS Documentation: Setting lifecycle configuration on a bucket, Managing your storage lifecycle)

2.5 Determine the appropriate system for cataloging data and managing metadata

- Evaluate mechanisms for discovery of new and updated data sources (AWS Documentation: Discovering on-premises resources using AWS discovery tools)

- Evaluate mechanisms for creating and updating data catalogs and metadata (AWS Documentation: Catalog and search, Data cataloging)

- Explain mechanisms for searching and retrieving data catalogs and metadata (AWS Documentation: Understanding tables, databases, and the Data Catalog)

- Explain mechanisms for tagging and classifying data (AWS Documentation: Data Classification, Data classification overview)

AWS Data Analytics Specialty Interview Questions

Domain 3: Processing

3.1 Determine appropriate data processing solution requirements

- Understand data preparation and usage requirements (AWS Documentation: Data Preparation, Preparing data in Amazon QuickSight)

- Understand different types of data sources and targets (AWS Documentation: Targets for data migration, Sources for data migration)

- Evaluate performance and orchestration needs (AWS Documentation: Performance Efficiency)

- Evaluate appropriate services for cost, scalability, and availability (AWS Documentation: High availability and scalability on AWS)

3.2 Design a solution for transforming and preparing data for analysis

- Apply appropriate ETL/ELT techniques for batch and real-time workloads (AWS Documentation: ETL and ELT design patterns for lake house architecture)

- Implement failover, scaling, and replication mechanisms (AWS Documentation: Disaster recovery options in the cloud, Working with read replicas)

- Implement techniques to address concurrency needs (AWS Documentation: Managing Lambda reserved concurrency, Managing Lambda provisioned concurrency)

- Implement techniques to improve cost-optimization efficiencies (AWS Documentation: Cost Optimization)

- Apply orchestration workflows (AWS Documentation: AWS Step Functions)

- Aggregate and enrich data for downstream consumption (AWS Documentation: Joining and Enriching Streaming Data on Amazon Kinesis, Designing a High-volume Streaming Data Ingestion Platform Natively on AWS)

3.3 Automate and operationalize data processing solutions

- Implement automated techniques for repeatable workflows

- Apply methods to identify and recover from processing failures (AWS Documentation: Failure Management, Recover your instance)

- Deploy logging and monitoring solutions to enable auditing and traceability (AWS Documentation: Enable Auditing and Traceability)

Domain 4: Analysis and Visualization

4.1 Determine the operational characteristics of the analysis and visualization solution

- Determine costs associated with analysis and visualization (AWS Documentation: Analyzing your costs with AWS Cost Explorer)

- Determine scalability associated with analysis (AWS Documentation: Predictive scaling for Amazon EC2 Auto Scaling)

- Determine failover recovery and fault tolerance within the RPO/RTO (AWS Documentation: Plan for Disaster Recovery (DR))

- Determine the availability characteristics of an analysis tool (AWS Documentation: Analytics)

- Evaluate dynamic, interactive, and static presentations of data (AWS Documentation: Data Visualization, Use static and dynamic device hierarchies)

- Translate performance requirements to an appropriate visualization approach (pre-compute and consume static data vs. consume dynamic data)

4.2 Select the appropriate data analysis solution for a given scenario

- Evaluate and compare analysis solutions (AWS Documentation: Evaluating a solution version with metrics)

- Select the right type of analysis based on the customer use case (streaming, interactive, collaborative, operational)

4.3 Select the appropriate data visualization solution for a given scenario

- Evaluate output capabilities for a given analysis solution (metrics, KPIs, tabular, API) (AWS Documentation: Using KPIs, Using Amazon CloudWatch metrics)

- Choose the appropriate method for data delivery (e.g., web, mobile, email, collaborative notebooks) (AWS Documentation: Amazon SageMaker Studio Notebooks architecture, Ensure efficient compute resources on Amazon SageMaker)

- Choose and define the appropriate data refresh schedule (AWS Documentation: Refreshing SPICE data, Refreshing data in Amazon QuickSight)

- Choose appropriate tools for different data freshness requirements (e.g., Amazon Elasticsearch Service vs. Amazon QuickSight vs. Amazon EMR notebooks) (AWS Documentation: Amazon EMR, Choosing the hardware for your Amazon EMR cluster, Build an automatic data profiling and reporting solution)

- Understand the capabilities of visualization tools for interactive use cases (e.g., drill down, drill through, and pivot) (AWS Documentation: Adding drill-downs to visual data in Amazon QuickSight, Using pivot tables)

- Implement the appropriate data access mechanism (e.g., in memory vs. direct access) (AWS Documentation: Security Best Practices for Amazon S3, Identity and access management in Amazon S3)

- Implement an integrated solution from multiple heterogeneous data sources (AWS Documentation: Data Sources and Ingestion)

Domain 5: Security

5.1 Select appropriate authentication and authorization mechanisms

- Implement appropriate authentication methods (e.g., federated access, SSO, IAM) (AWS Documentation: Identity and access management for IAM Identity Center)

- Implement appropriate authorization methods (e.g., policies, ACL, table/column level permissions) (AWS Documentation: Managing access permissions for AWS Glue resources, Policies and permissions in IAM)

- Implement appropriate access control mechanisms (e.g., security groups, role-based control) (AWS Documentation: Implement access control mechanisms)

5.2 Apply data protection and encryption techniques

- Determine data encryption and masking needs (AWS Documentation: Protecting Data at Rest, Protecting data using client-side encryption)

- Apply different encryption approaches (server-side encryption, client-side encryption, AWS KMS, AWS CloudHSM) (AWS Documentation: AWS Key Management Service FAQs, Protecting data using server-side encryption, Cryptography concepts)

- Implement at-rest and in-transit encryption mechanisms (AWS Documentation: Encrypting Data-at-Rest and -in-Transit)

- Implement data obfuscation and masking techniques (AWS Documentation: Data masking using AWS DMS, Create a secure data lake by masking)

- Apply basic principles of key rotation and secrets management (AWS Documentation: Rotate AWS Secrets Manager secrets)

5.3 Apply data governance and compliance controls

- Determine data governance and compliance requirements (AWS Documentation: Management and Governance)

- Understand and configure access and audit logging across data analytics services (AWS Documentation: Monitoring audit logs in Amazon OpenSearch Service)

- Implement appropriate controls to meet compliance requirements (AWS Documentation: Security and compliance)

AWS Data Analytics Specialty Interview Questions

For More: Check AWS Certified Data Analytics Specialty (DAS-C01) Exam FAQs

AWS Exam Policies

Amazon Web Services (AWS) provides exam policies that provide information about the terms and procedures of the certifications exam. These exam policies include details about exam training and certification. Some of the policies include:

Exam Retake Policy

AWS states that candidates who will not be able to pass the exam must have to wait for 14 days before they are eligible to retake the exam. Further, there is no limit on exam attempts until the exam is passed. But, for every exam attempt, the full registration price must be paid.

Exam Rescheduling

For rescheduling or cancelling an exam, follow the steps:

- Firstly, sign in to aws.training/Certification.

- Then, select the Go to your Account button.

- Thirdly, select Manage PSI or Pearson VUE Exams button.

- After that, you will be redirected to the PSI or Pearson VUE dashboard.

- Next, if the exam is scheduled with PSI, then, click on View Details for the exam that is scheduled. Else, if the exam is scheduled with Pearson VUE, then, select the exam in the Upcoming Appointments menu.

- Further, you should know that the exam can be rescheduled up to 24 hours before the scheduled exam time. And, each exam appointment can only be rescheduled twice. For taking the exam a third time, candidates are required to cancel the exam and then schedule it for a convenient date.

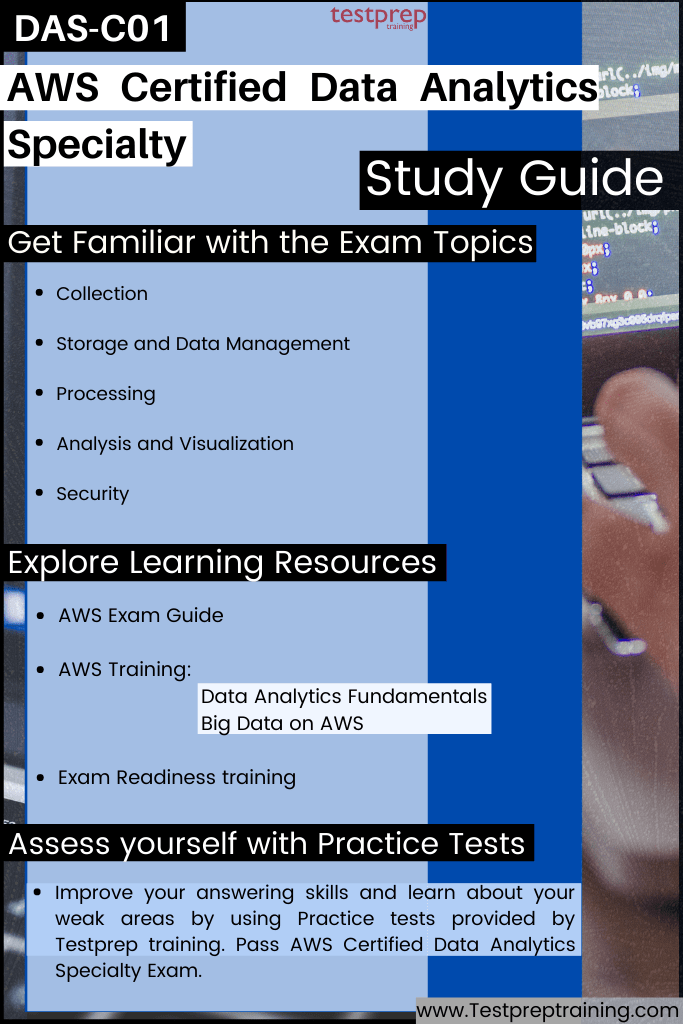

AWS Certified Data Analytics Specialty Study Guide

1. Exam Objectives

For getting a better understanding of the concepts it is important to use exam objectives. AWS provides a list of topics covering exam domains for the AWS Certified Data Analytics Specialty exam. Going through this will help you get familiar with the topics and sub-topics and then start preparation accordingly.

2. AWS Exam Guide

AWS Certified Data Analytics – Specialty exam study guide contains the topics and the details related to the exam. This exam guide will help in learning about the intended audience and the knowledge requirement for the exam. Using this candidates can get to know if they have the required skills and experience for the AWS Certified Data Analytics – Specialty exam.

3. AWS Training

AWS helps candidates to develop technical skills by providing recommended courses according to the exam. However, for AWS Certified Data Analytics – Specialty (DAS-C01) Exam the recommended course include:

Data Analytics Fundamentals

This course provides understanding about the data analysis solutions and the data analytic processes. It provides five key factors that indicate the need for specific AWS services for collecting, processing, analyzing, and presenting data. Further, there are objectives covered in this course, some of them include:

- Firstly, identifying the characteristics of data analysis solutions and indicating a solution.

- Secondly, explaining types of data including structured, semistructured, and unstructured data.

- Thirdly, explaining data storage types such as data lakes, AWS Lake Formation, data warehouses, and the Amazon Simple Storage Service (Amazon S3).

- Next, examining the characteristics and differences in batch and stream processing.

- Then, explaining how Amazon Kinesis is used to process streaming data.

- Lastly, examining the characteristics of different storage systems for source data. Also, analyzing the differences of row-based and columnar data storage methods.

Big Data on AWS

This course provides knowledge and understanding of cloud-based big data solutions such as Amazon EMR, Amazon Redshift, and AWS big data platform. Moreover, it helps in learning the concept of using Amazon EMR In order to process data using the broad ecosystem of Hadoop tools like Hive and Hue. Further, this will also help in:

- learning and understanding the process of creating big data environments

- working with services such as Amazon DynamoDB, Amazon Redshift, Amazon QuickSight, and Amazon Kinesis

- supporting best practices for designing big data environments for security and cost-effectiveness.

4. Exam Readiness training by AWS

Exam Readiness training helps in understanding the process of explaining exam questions and allocating your study time. However, for this AWS Certified Data Analytics – Specialty exam readiness provides training course that is,

Exam Readiness: AWS Certified Data Analytics Specialty

This course helps in understanding the exam’s topic and familiarizing with the questions format and exam approach. In this, you will understand about:

- Firstly, understanding the examination process

- Secondly, understanding and getting familiar with the exam pattern and question types

- Thirdly, identifying how questions relate to AWS data analytics concepts

- Next, explain the concepts being tested by exam questions

- Lastly, developing a study plan for exam preparation

5. Practice Tests

Taking a practice test works as a self-assessment for identifying the knowledge and skills gaps. Moreover, AWS Certified Data Analytics – Specialty exam practice tests will help in having the best possible revision, and to understand the pattern of the questions so that you don’t face any problem during the exam. Start talking AWS Certified Data Analytics – Specialty practice tests for getting better preparation.