Microsoft Fabric emerges as a transformative force in the rapidly evolving landscape of data analytics, unifying data engineering, data science, and business intelligence into a single, integrated platform. The DP-700: Implementing Data Engineering Solutions Using Microsoft Fabric certification validates the skills necessary to harness this powerful technology, demonstrating proficiency in designing, implementing, and optimizing data pipelines and analytical workloads. This blog post serves as an exhaustive guide, meticulously dissecting the core components of Microsoft Fabric—from the foundational OneLake to the dynamic capabilities of Synapse Data Engineering, Data Warehousing, Real-Time Analytics, and the seamless integration with Power BI. Whether you’re a seasoned data engineer seeking to solidify your expertise or an aspiring professional aiming to navigate the complexities of modern data solutions, this resource will equip you with the knowledge and practical insights needed to conquer the DP-700 exam and master the art of data engineering within the Microsoft Fabric ecosystem.

Microsoft Fabric: Unified Data Analytics for Modern Enterprises

Microsoft Fabric is a powerful, all-in-one analytics platform designed to streamline data engineering, data science, real-time analytics, and business intelligence. By integrating multiple data tools and services into a single ecosystem, Fabric eliminates the need for disconnected systems, making data management and analysis more efficient. Organizations can leverage its capabilities to unify their data workflows, improve collaboration, and drive data-driven decision-making.

– Key Features of Microsoft Fabric

1. Unified Analytics Ecosystem

Microsoft Fabric consolidates various data disciplines into a single platform, enabling seamless integration across:

- Data Engineering: Develop, process, and optimize large-scale data pipelines.

- Data Science: Build and deploy machine learning models for predictive analytics.

- Data Warehousing: Perform scalable and high-performance data analytics.

- Real-Time Analytics: Analyze streaming data to gain instant insights.

- Business Intelligence: Utilize Power BI for interactive data visualization and reporting.

This comprehensive approach reduces the complexity of managing multiple separate solutions, enhancing efficiency and consistency across the organization.

2. OneLake: A Centralized Data Repository

At the heart of Microsoft Fabric is OneLake, a Software as a Service (SaaS) data lake that serves as a unified storage solution. It provides:

- A single location for all organizational data, ensuring consistency and accessibility.

- Simplified data storage and governance across different workloads.

- Seamless integration with Microsoft and third-party data sources.

With OneLake, teams can collaborate on data projects without the need for complex data transfers or redundant storage solutions.

3. Integrated Data Services

Fabric includes several integrated services that cater to different aspects of data management and analytics:

- Data Factory – Facilitates data integration and ETL/ELT processes.

- Synapse Data Engineering – Provides Spark-based data processing and transformation.

- Synapse Data Science – Enables machine learning model creation and deployment.

- Synapse Data Warehousing – Supports high-performance data storage and analysis.

- Synapse Real-Time Analytics – Analyzes streaming data for instant insights.

- Power BI – Enhances data visualization and reporting capabilities.

By offering these services under one umbrella, Fabric ensures a seamless experience for data professionals, eliminating the need to switch between disparate platforms.

4. AI-Powered Capabilities

Microsoft Fabric integrates AI throughout its platform to enhance data-driven decision-making. AI-powered features help:

- Automate data preparation and transformation.

- Generate insights and recommendations based on historical trends.

- Optimize machine learning workflows for improved efficiency.

This AI-driven approach simplifies complex tasks, allowing organizations to focus on extracting value from their data rather than managing infrastructure.

– Why Choose Microsoft Fabric?

Microsoft Fabric democratizes data analytics, making it accessible for businesses of all sizes. With its unified approach, organizations can:

- Reduce operational complexity by eliminating siloed systems.

- Enhance collaboration through centralized data storage and integrated tools.

- Improve security and governance with built-in compliance and access control features.

- Accelerate decision-making with real-time insights powered by AI.

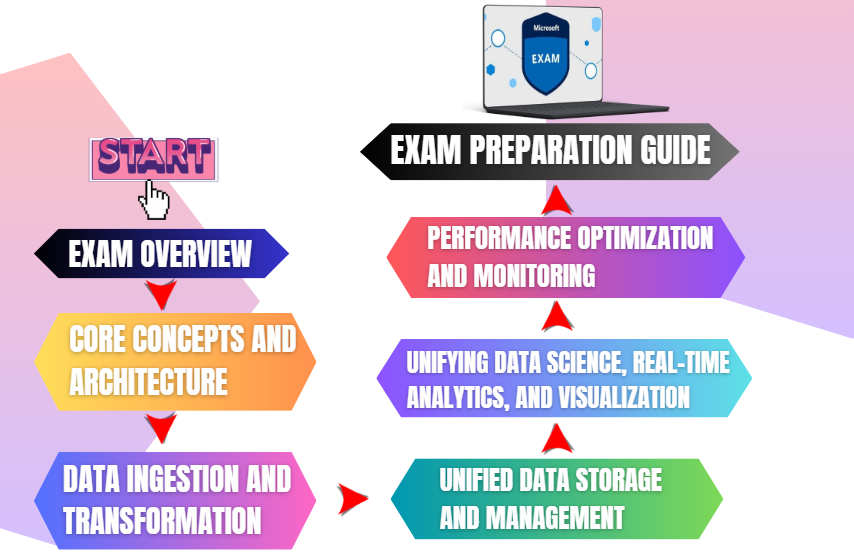

Microsoft DP-700 Exam Cheat Sheet

The DP-700: Implementing Data Engineering Solutions Using Microsoft Fabric Cheat Sheet provides a concise overview of key exam topics, including data ingestion, transformation, storage, security, and real-time analytics. It highlights essential Microsoft Fabric components like OneLake, Synapse, Data Factory, and Power BI, helping you quickly grasp critical concepts and prepare efficiently for the exam.

DP-700 Exam Overview

The DP-700 exam assesses a candidate’s ability to design, implement, and manage data engineering solutions using Microsoft Fabric. This requires a deep understanding of data loading patterns, architectural frameworks, and orchestration processes to support enterprise analytics.

Key Responsibilities

Candidates are expected to:

- Ingest and transform data to ensure efficient data processing and integration.

- Secure and manage analytics solutions by implementing governance, compliance, and access controls.

- Monitor and optimize performance to enhance system efficiency and scalability.

As a data professional, you will work closely with analytics engineers, data architects, analysts, and administrators to develop and deploy data solutions tailored to business needs. A strong command of SQL, PySpark, and Kusto Query Language (KQL) is essential for effective data manipulation and transformation.

Skills Measured

- Implement and manage an analytics solution

- Ingest and transform data

- Monitor and optimize an analytics solution

This certification validates expertise in Microsoft Fabric’s data engineering capabilities, ensuring organizations can derive actionable insights from their data with efficiency and precision.

Exam Details

The DP-700: Implementing Data Engineering Solutions Using Microsoft Fabric exam is a key requirement for earning the Microsoft Certified: Fabric Data Engineer Associate certification. Candidates are given 100 minutes to complete the assessment, which is available in English. The exam is proctored and strictly follows a closed-book format, ensuring a controlled testing environment. Additionally, it may include interactive components that require hands-on problem-solving to assess practical skills in data engineering.

Microsoft Fabric: Core Concepts and Architecture

Microsoft Fabric is a comprehensive unified data analytics platform that integrates various data workloads into a single, cohesive environment. It is designed to eliminate data silos, enhance collaboration, and streamline data processing across an organization. Fabric provides an end-to-end solution for data engineering, data science, real-time analytics, data warehousing, and business intelligence, enabling seamless data management and advanced analytics.

At the heart of Microsoft Fabric is OneLake, a cloud-based Software as a Service (SaaS) data lake, which acts as the central repository for all organizational data. OneLake supports open data formats and ensures efficient data sharing, governance, and interoperability across multiple tools and platforms. This architecture allows enterprises to work with data more efficiently, securely, and at scale, without the need for complex infrastructure management.

– Key Core Concepts

1. Unified Analytics Platform

Microsoft Fabric is built to integrate diverse data workloads into a single platform, removing the need for multiple disconnected systems. It offers a holistic approach to enterprise analytics by centralizing data operations and promoting seamless collaboration across teams.

- Data Engineering: Enables large-scale data ingestion, transformation, and processing.

- Data Science: Supports machine learning model development, training, and deployment.

- Real-Time Analytics: Provides instant insights from streaming data sources.

- Data Warehousing: Delivers a high-performance, scalable analytics warehouse.

- Business Intelligence (BI): Powers data visualization and reporting using Power BI.

2. OneLake: The Centralized Data Repository

OneLake is the foundational data lake for Microsoft Fabric, providing a single, unified storage location for structured, semi-structured, and unstructured data. Unlike traditional data storage solutions, OneLake is automatically provisioned and managed by Microsoft, reducing the operational overhead of storage management.

Key Features of OneLake

- Centralized and Unified Storage: Eliminates data fragmentation by serving as a common repository for all workloads.

- Open Data Format Support: Allows seamless access to various file types, including Parquet, Delta Lake, and CSV.

- Virtualized Access with Shortcuts: Enables real-time data access from external sources (e.g., AWS S3, Azure Data Lake Storage Gen2) without the need for data duplication.

- Automated Provisioning and Management: As a SaaS offering, Microsoft handles infrastructure, scaling, and security, allowing organizations to focus solely on data analytics.

3. Workload-Specific Capabilities

Microsoft Fabric is structured around specialized workloads, each catering to a distinct aspect of data processing. These workloads ensure a modular yet integrated approach to enterprise analytics.

Fabric Workloads Overview

- Data Factory: Enables data integration through ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) pipelines, facilitating smooth data movement across systems.

- Synapse Data Engineering: Leverages Apache Spark for distributed data processing, ensuring high-speed transformation of large datasets.

- Synapse Data Science: Provides advanced tools for building and deploying machine learning (ML) models, including integration with Azure Machine Learning.

- Synapse Data Warehousing: A fully managed cloud data warehouse optimized for large-scale analytics and complex queries.

- Synapse Real-Time Analytics: Supports streaming data processing, allowing businesses to derive insights from IoT devices, logs, and real-time event streams.

- Power BI: A powerful business intelligence and visualization tool that connects directly to Fabric’s data workloads for real-time insights and reporting.

– Architectural Highlights

1. Lake-Centric Architecture

Microsoft Fabric follows a lake-centric architecture, where OneLake acts as the central hub for all data workloads. Unlike traditional architectures that require separate storage solutions for different analytics tasks, Fabric allows all workloads to operate on the same dataset in OneLake, ensuring data consistency, security, and accessibility.

Benefits of Lake-Centric Architecture

- Eliminates data duplication by providing a unified storage location.

- Enhances data consistency by allowing multiple workloads to work on the same dataset.

- Supports a multi-cloud strategy, enabling connectivity with external cloud providers.

2. Seamless Integration with Azure and Third-Party Services

One of the major advantages of Microsoft Fabric is its tight integration with other Microsoft Azure services, as well as external cloud platforms.

- Native Azure Integration: Directly connects with services like Azure Data Factory, Azure Synapse, Azure Machine Learning, and Azure Data Lake Storage.

- Multi-Cloud Connectivity: Accesses data from external cloud storage solutions like AWS S3 and Google Cloud Storage using OneLake Shortcuts.

- Eliminates Complex Data Movement: Reduces the need for data replication and ETL pipelines, enabling organizations to query data directly from multiple sources.

3. Governance and Security

Microsoft Fabric incorporates enterprise-grade security and governance features to protect sensitive data while ensuring compliance with industry regulations.

Security Features

- Centralized Security Management: Role-based access control (RBAC) ensures that only authorized users can access specific datasets.

- Data Lineage Tracking: Provides visibility into how data is processed, transformed, and consumed.

- Compliance Controls: Supports compliance with regulations such as GDPR, HIPAA, and ISO 27001.

4. SaaS-Based Simplicity

Microsoft Fabric is a fully managed SaaS solution, meaning that Microsoft handles the underlying infrastructure, updates, and scaling. This allows organizations to:

- Reduce Operational Overhead: No need for manual infrastructure provisioning or maintenance.

- Enable Rapid Deployment: Get started with analytics and AI workloads immediately, without complex setup processes.

- Ensure High Availability: Microsoft automatically scales workloads based on demand, ensuring consistent performance.

Data Ingestion and Transformation in Microsoft Fabric

Data ingestion and transformation form the backbone of modern data engineering. Microsoft Fabric, an end-to-end data analytics platform, provides a powerful suite of tools to streamline these processes. It enables businesses to efficiently collect, process, and transform vast amounts of data from multiple sources, ensuring it is structured, clean, and ready for analytics, reporting, and AI-driven insights.

Fabric integrates Data Factory for orchestrating data workflows and Synapse Data Engineering for Spark-based processing, simplifying the Extract, Transform, Load (ETL) and Extract, Load, Transform (ELT) processes. With OneLake as a unified storage layer, Microsoft Fabric eliminates data silos, improves accessibility, and enhances performance.

This section explores the key components of Microsoft Fabric’s data ingestion and transformation capabilities, covering pipeline orchestration, real-time data ingestion, transformation techniques, and optimization strategies.

– Data Factory in Fabric: The Orchestration Engine

Data Factory in Microsoft Fabric is a fully managed data integration service that allows businesses to move and transform data across diverse environments. It provides no-code, low-code, and code-based solutions, making it accessible to both data engineers and business users.

Key Capabilities of Data Factory

1. Pipelines: Automating Data Workflows

A pipeline is a logical grouping of data movement and transformation tasks, defining the workflow to extract, transform, and load data.

- Pipelines can ingest structured, semi-structured, and unstructured data from diverse sources.

- They support both batch processing and real-time data ingestion.

- Pipelines can be scheduled, event-driven, or triggered based on conditions, offering flexibility in data workflow automation.

2. Activities: Performing Data Operations

Activities within a pipeline define specific actions such as data movement, transformation, and control flow.

- Copy Activity: Moves data between sources and destinations, supporting over 90 connectors, including Azure Blob Storage, AWS S3, SQL databases, and third-party APIs.

- Data Flow Activity: Provides visual data transformation, enabling tasks like filtering, aggregating, and pivoting data.

- Notebook Activity: Executes Spark notebooks to perform advanced transformations using PySpark, Scala, or Spark SQL.

- Control Flow Activity: Enables conditional execution, loops, and branching for dynamic pipeline workflows.

3. Datasets and Linked Services: Managing Connections

- Datasets represent structured data references, defining where and how data is stored within a pipeline.

- Linked Services define secure connections to external data sources, ensuring efficient, scalable, and governed access.

4. Orchestration and Scheduling: Automating Data Ingestion

- Supports time-based, trigger-based, and event-driven executions.

- Enables incremental loading strategies to process only new or updated records, reducing unnecessary processing.

- Supports monitoring and alerting, ensuring pipeline health and performance.

– Data Ingestion Techniques in Microsoft Fabric

Efficient data ingestion is key to scalable data analytics. Microsoft Fabric provides robust ingestion techniques optimized for structured, semi-structured, and unstructured data.

1. Connecting to Multiple Data Sources

Fabric offers out-of-the-box connectors for diverse sources, ensuring seamless integration.

- Cloud Storage: Azure Data Lake Storage (ADLS) Gen2, AWS S3, Google Cloud Storage

- Databases: Azure SQL Database, PostgreSQL, MySQL, Oracle, Snowflake

- Streaming Sources: Azure Event Hubs, Kafka, IoT Hub

- File-Based Sources: JSON, Parquet, CSV, XML, Avro

2. Incremental and Real-Time Data Loading

Instead of processing entire datasets repeatedly, Microsoft Fabric supports:

- Change Data Capture (CDC): Identifies new, updated, or deleted records, ensuring efficient synchronization.

- Delta Lake-based Updates: Uses ACID-compliant transactions for scalable, incremental ingestion.

- Streaming Data Pipelines: Captures real-time data for low-latency analytics.

3. Hybrid Data Ingestion

Fabric enables hybrid data ingestion across on-premises and multi-cloud environments, ensuring secure data movement between different infrastructures without data duplication.

– Data Transformation with Synapse Data Engineering

Once data is ingested, Synapse Data Engineering enables powerful transformation capabilities using Apache Spark, SQL, and AI-driven techniques.

1. Spark Notebooks: Advanced Transformation Engine

Apache Spark Notebooks in Fabric provide scalable, distributed data processing for complex transformations.

- Supports PySpark, Scala, and Spark SQL for high-performance data manipulation.

- Enables schema evolution and transactional consistency with Delta Lake-based Lakehouse tables.

- Facilitates ML and AI integration using MLflow, TensorFlow, and Scikit-learn.

2. Lakehouse Architecture: A Unified Data Model

Fabric embraces a Lakehouse architecture, combining the best of data lakes and data warehouses.

- Delta Lake format ensures ACID transactions, schema enforcement, and versioning.

- Unified Data Access: Fabric workloads—Data Engineering, Data Science, Business Intelligence (BI), and AI—share the same Lakehouse tables.

- Cost Optimization: Data is stored once in OneLake, avoiding unnecessary duplication.

3. Data Wrangling & Enrichment

Fabric provides intuitive data-wrangling capabilities through visual and code-based methods.

- Data Cleansing: Handles null values, duplicates, and inconsistencies.

- Schema Transformation: Enables column renaming, type conversion, and restructuring.

- Data Enrichment: Combines datasets, performs lookups, and adds derived attributes.

4. Real-Time Transformation & Streaming Analytics

For real-time data pipelines, Fabric supports:

- Event Stream Processing: Capturing and transforming data from IoT devices, logs, and real-time feeds.

- Azure Stream Analytics Integration: Applying SQL-like transformations on live data.

- Kusto Query Language (KQL): Used for analyzing large-scale logs and telemetry data.

– Optimization Strategies for Data Ingestion & Transformation

To enhance performance, cost-efficiency, and scalability, organizations can adopt these best practices:

1. Optimizing Data Pipelines

- Use parallel execution to speed up data processing.

- Implement incremental processing to reduce redundant data movement.

- Utilize partitioning and indexing to improve query performance.

2. Performance Tuning in Synapse Data Engineering

- Optimize Spark jobs using adaptive execution plans.

- Use broadcast joins for small datasets and shuffle-based joins for large datasets.

- Store data in optimized Parquet or Delta formats for faster access.

3. Governance and Security

- Enforce Role-Based Access Control (RBAC) for data security.

- Apply Data Loss Prevention (DLP) policies to prevent sensitive data exposure.

- Track data lineage to ensure compliance and auditability.

Microsoft Fabric: Unified Data Storage and Management

Microsoft Fabric simplifies this challenge by offering a unified data storage and management environment, integrating the best of data lakes, data warehouses, and governance frameworks into a single, seamless platform. This explores the key components of Microsoft Fabric’s data storage and management capabilities, offering a deep dive into OneLake, Lakehouses, Synapse Data Warehousing, and security frameworks.

– OneLake: The Foundation of Unified Data Storage

OneLake serves as the backbone of Microsoft Fabric, offering a centralized, SaaS-based data lake that consolidates data across an organization. Unlike traditional storage systems, which often create fragmented silos, OneLake ensures seamless data accessibility across various workloads, including data engineering, analytics, and AI-driven applications.

Key Features of OneLake

1. Unified Storage Architecture

- Acts as a single, organization-wide data lake, ensuring consistent data access across all teams and departments.

- Implements a hierarchical file system, making it easier to manage, structure, and navigate large datasets.

- Reduces data duplication by storing files in a common repository, enhancing storage efficiency.

2. Support for Open Formats

- OneLake natively supports industry-standard data formats such as Delta Lake, Parquet, CSV, and JSON, ensuring broad interoperability.

- Data remains accessible to third-party tools, including Apache Spark, SQL-based engines, and AI models, without requiring format conversions.

3. Virtualized Data Access with Shortcuts

- OneLake “shortcuts” enable organizations to reference external data sources (e.g., Azure Data Lake Storage Gen2, AWS S3, or on-premises databases) without duplicating or physically moving the data.

- This virtualized approach reduces storage costs and improves query efficiency, allowing teams to access data in its original location while leveraging Microsoft Fabric’s analytics tools.

4. Simplified Data Management

- As a fully managed SaaS solution, OneLake eliminates the need for complex infrastructure management, reducing operational overhead.

- Automated performance optimization, indexing, and security enforcement ensure smooth and secure data operations.

– Lakehouse: The Power of a Unified Architecture

The Lakehouse architecture in Microsoft Fabric seamlessly integrates the scalability of data lakes with the structured querying capabilities of data warehouses. This hybrid approach enables organizations to store, process, and analyze structured, semi-structured, and unstructured data within a single platform.

How Microsoft Fabric Implements the Lakehouse Concept

1. Combining Data Lakes and Warehouses

- Fabric stores data in open formats within OneLake, allowing both raw and processed datasets to coexist in a structured manner.

- Supports multi-modal workloads, enabling data engineers, analysts, and data scientists to access and transform data without needing multiple storage layers.

2. Delta Lake for Transactional Consistency

- The Delta Lake format ensures ACID transactions, schema enforcement, and time-travel capabilities, making it possible to rollback changes and maintain historical versions of data.

- Built-in schema evolution enables automatic adaptation to changes in data structures, reducing the need for manual interventions.

3. Optimized Data Organization

- Data is structured into tables and folders, allowing SQL-based querying, batch processing, and real-time streaming analytics.

- The Lakehouse model supports machine learning and AI workloads, providing seamless integration with Azure Machine Learning and Synapse Analytics.

– Synapse Data Warehousing: High-Performance Analytics

For structured data processing and analytical workloads, Microsoft Fabric includes Synapse Data Warehousing, a high-performance, cloud-native data warehouse solution designed for fast, scalable, and cost-efficient query execution.

Key Capabilities of Synapse Data Warehousing

1. Optimized for Analytical Workloads

- Designed for big data analytics, supporting large-scale OLAP (Online Analytical Processing) queries with high concurrency.

- Provides enterprise-grade performance for BI reporting, financial forecasting, and complex data modeling.

2. SQL-Based Querying with T-SQL

- Users can leverage T-SQL (Transact-SQL) to query and manipulate data, ensuring compatibility with existing SQL-based applications.

- Native support for joins, aggregations, and complex transformations enables efficient data exploration.

3. Performance Optimization and Indexing

- Automated partitioning and indexing enhance query performance and retrieval speeds.

- Columnstore indexes and materialized views improve storage efficiency and reduce computational overhead.

4. Scalability and Elasticity

- Supports on-demand scaling, allowing businesses to increase or decrease compute resources based on workload requirements.

- Cost-efficient architecture reduces processing costs for sporadic workloads, making it ideal for dynamic business needs.

– Data Security and Governance in Microsoft Fabric

Ensuring data security, compliance, and governance is critical in modern data management. Microsoft Fabric provides a comprehensive security framework, integrating access control, encryption, and compliance monitoring to protect sensitive data.

1. Centralized Security Management

- Implements role-based access control (RBAC) to ensure that only authorized users can access or modify data.

- Provides audit logging and activity tracking to monitor user interactions and prevent unauthorized data changes.

2. Data Governance with Microsoft Purview

- Microsoft Purview is integrated into Fabric, offering centralized governance, data lineage tracking, and metadata management.

- Organizations can catalog, classify, and monitor data usage, ensuring compliance with GDPR, HIPAA, and other regulatory standards.

3. Security Features for Data Protection

- Row-level and column-level security enforce granular access control, restricting data visibility based on user roles and permissions.

- Data masking and encryption ensure that sensitive information remains protected, even when shared across multiple teams.

Unifying Data Science, Real-Time Analytics, and Visualization

Microsoft Fabric provides a comprehensive, cloud-based data platform that enables seamless integration of machine learning, real-time analytics, and data visualization. By unifying these capabilities, Fabric empowers businesses to extract valuable insights from both historical and streaming data in a highly efficient manner. At its core, Microsoft Fabric brings together:

- Synapse Data Science for machine learning and AI-driven insights

- Synapse Real-Time Analytics for processing and analyzing streaming data

- Power BI for data visualization and reporting

This section discuss the key components of Microsoft Fabric, exploring how organizations can leverage its capabilities to enhance data-driven decision-making.

– Synapse Data Science: Machine Learning and AI Capabilities

Microsoft Fabric integrates seamlessly with Synapse Data Science, offering a comprehensive suite of tools for machine learning (ML) and artificial intelligence (AI) development. This enables data scientists and AI engineers to build, train, and deploy ML models within a scalable and secure environment.

Key Features of Synapse Data Science

1. Machine Learning Capabilities

- Fabric supports popular machine learning frameworks, including scikit-learn, TensorFlow, and PyTorch, allowing flexibility in model development.

- Built-in tools enable data preprocessing, feature engineering, and model evaluation, ensuring a streamlined ML workflow.

- Seamless integration with Azure Machine Learning expands the range of automated ML and deep learning capabilities available to users.

2. MLflow for Experiment Tracking

- Fabric incorporates MLflow, an open-source machine learning lifecycle management tool, to track experiments, manage models, and streamline deployment.

- Users can log parameters, metrics, and artifacts for each ML experiment, making model comparison and reproducibility effortless.

3. Notebook-Based Development

- Data scientists can use interactive notebooks (Jupyter-style) within Fabric to write, test, and execute machine learning code.

- These notebooks support Python, R, and SQL, providing a flexible environment for collaborative development and real-time experimentation.

– Synapse Real-Time Analytics: Processing Live Data Streams

With the increasing demand for real-time insights, Microsoft Fabric offers Synapse Real-Time Analytics, a high-performance solution for processing and analyzing streaming data. This component is optimized for time-series data, making it ideal for industries like finance, manufacturing, IoT, and cybersecurity.

How Synapse Real-Time Analytics Works

1. Kusto Query Language (KQL) for Fast Data Exploration

- Synapse Real-Time Analytics uses Kusto Query Language (KQL), a powerful, efficient query language designed for analyzing large-scale streaming data.

- KQL is optimized for time-series analysis, making it particularly useful for log monitoring, security analytics, and IoT data processing.

2. Real-Time Data Ingestion and Processing

- Fabric enables real-time ingestion from various sources, including:

- Azure Event Hubs

- IoT devices

- Kafka streams

- Custom streaming applications

- This ensures that organizations can capture and process data in motion, allowing for immediate analysis and response.

3. Event Streams for Managing High-Volume Data

- Microsoft Fabric’s Real-Time Hub acts as a centralized location for ingesting, processing, and routing live data streams.

- Event Streams allow businesses to monitor real-time changes, detect anomalies, and trigger automated actions based on predefined conditions.

4. Eventhouses: High-Performance Time-Series Analytics

- Eventhouses are specialized storage and querying solutions for time-series data.

- They are optimized for fast retrieval of real-time streaming data, making them ideal for use cases such as fraud detection, predictive maintenance, and stock market analysis.

5. Real-Time Data Visualization

- Fabric includes built-in visualization tools that enable users to create live dashboards, helping businesses track operational metrics, detect trends, and take immediate action.

– Power BI Integration: Advanced Data Visualization and Insights

To make data-driven decision-making more accessible and intuitive, Microsoft Fabric integrates directly with Power BI, one of the most powerful business intelligence (BI) and data visualization tools available. This integration enables organizations to transform complex datasets into interactive reports and dashboards.

Key Power BI Capabilities in Microsoft Fabric

1. Direct Lake Mode for Instant Data Access

- Direct Lake Mode allows Power BI to query data directly from OneLake, eliminating the need for data duplication or pre-aggregation.

- This results in faster dashboard performance and real-time insights without excessive processing delays.

2. Custom Reports and Dashboards

- Power BI provides drag-and-drop reporting capabilities, allowing users to create custom reports, charts, and KPI dashboards without extensive technical expertise.

- It enables businesses to track key performance indicators (KPIs), monitor trends, and generate automated reports.

3. Advanced Data Modeling and Aggregation

- Power BI’s data modeling capabilities allow users to create relationships between different datasets, define calculated columns, and apply aggregations for deep analysis.

- Integration with DAX (Data Analysis Expressions) and Power Query enables advanced data transformations.

4. Real-Time Dashboards

- Power BI supports real-time dashboards, displaying live data from Synapse Real-Time Analytics workloads.

- This is particularly useful for operational monitoring, such as tracking IoT sensor readings, monitoring website traffic, or analyzing financial transactions in real time.

Why Microsoft Fabric? The Competitive Advantage

Microsoft Fabric stands out as a comprehensive data platform by offering a unified approach to data storage, processing, and visualization. Unlike traditional data solutions that require multiple disjointed tools, Fabric integrates machine learning, real-time analytics, and business intelligence into a single environment, streamlining operations and reducing complexity. This makes it an ideal choice for organizations looking to maximize their data’s potential while maintaining efficiency and scalability.

- One of the key advantages of Microsoft Fabric is its centralized data ecosystem. By leveraging OneLake as the primary data storage solution, organizations can store structured, semi-structured, and unstructured data in a unified manner, eliminating data silos. This centralization enhances collaboration across teams, as different workloads—ranging from machine learning experiments to real-time analytics—can access the same data without duplication or complex data movement processes.

- Fabric also provides a strong foundation for machine learning and artificial intelligence development. With built-in support for widely used frameworks such as scikit-learn, TensorFlow, and PyTorch, data scientists and AI engineers can efficiently build, train, and deploy models. The integration of MLflow simplifies model lifecycle management, while the seamless connection with Azure Machine Learning expands capabilities for more advanced AI-driven solutions.

- Real-time data processing is another key strength of Microsoft Fabric. The inclusion of Synapse Real-Time Analytics enables businesses to capture, analyze, and react to streaming data from various sources, including IoT devices, financial transactions, and web applications. Using Kusto Query Language (KQL), users can perform high-speed queries on massive datasets, making it easier to detect trends, identify anomalies, and respond to critical events in real time.

- Data visualization and reporting capabilities are significantly enhanced through Fabric’s deep integration with Power BI. Organizations can create interactive dashboards and reports that connect directly to OneLake using Direct Lake Mode, enabling real-time insights without additional data transformations. Power BI’s advanced data modeling and visualization features make it easier for business users to derive meaningful insights without requiring technical expertise.

- Scalability, security, and governance are critical considerations in any modern data platform, and Microsoft Fabric excels in these areas. The platform ensures robust security through features such as row-level security, column-level security, and data encryption, protecting sensitive information across all workloads. Additionally, Microsoft Purview integration provides centralized data governance, ensuring compliance with regulatory requirements and maintaining data quality across the organization.

- By offering a seamless combination of centralized storage, machine learning, real-time analytics, and business intelligence, Microsoft Fabric enables organizations to adopt a data-driven approach with greater efficiency and flexibility. Its unified platform reduces the need for fragmented data tools, streamlines operations, and enhances collaboration across departments.

Performance Optimization and Monitoring in Microsoft Fabric

Optimizing performance and maintaining robust monitoring mechanisms are critical for ensuring the efficiency, reliability, and cost-effectiveness of data engineering solutions within Microsoft Fabric. By implementing performance-tuning strategies, organizations can enhance data processing speeds, streamline query execution, and optimize resource utilization. Additionally, proactive monitoring and cost management practices help maintain operational stability while minimizing unnecessary expenditures.

– Performance Tuning

Optimizing various components within Microsoft Fabric requires targeted strategies tailored to different workloads, including Spark-based processing, data warehousing, real-time analytics, and business intelligence solutions such as Power BI.

For Spark-based data processing in Synapse Data Engineering, key optimization techniques include:

- Efficient Data Partitioning and Bucketing – Reducing shuffle operations enhances parallel processing efficiency.

- Optimized Spark Configurations – Adjusting memory allocation, executor instances, and caching settings improves computational performance.

- Efficient Data Formats – Utilizing Parquet and Delta Lake improves read/write operations, ensuring faster query execution.

- Minimized Data Shuffling – Avoiding unnecessary data movement optimizes Spark job efficiency.

In Synapse Data Warehousing, performance tuning involves:

- Indexing and Partitioning – Structuring data effectively enhances query performance.

- Optimized Query Execution – Writing efficient T-SQL queries reduces processing time.

- Data Distribution Strategies – Ensuring optimal table distribution minimizes computational overhead.

OneLake performance optimization focuses on efficient data organization, leveraging shortcuts to eliminate redundancy, and structuring files to enhance retrieval speeds. Ensuring an optimized storage format significantly reduces latency and improves query responsiveness.

For real-time analytics, performance enhancements include:

- Optimized Kusto Query Language (KQL) Queries – Structuring queries for rapid execution.

- Efficient Event Stream Management – Controlling data throughput for high-performance streaming.

- Optimized Eventhouse Queries – Ensuring fast and efficient processing of time-series data.

In Power BI, performance improvements are achieved through:

- Optimized Data Models – Structuring datasets to improve visualization performance.

- Reduced Query Complexity – Minimizing unnecessary joins and aggregations.

- Direct Lake Mode Utilization – Enabling high-speed data retrieval directly from OneLake.

– Monitoring and Logging

A structured approach to monitoring ensures that workloads operate seamlessly, preventing performance degradation and unexpected failures. Microsoft Fabric provides extensive logging and diagnostic tools for real-time performance tracking.

Pipeline Monitoring enables:

- Tracking Data Factory pipeline executions to identify bottlenecks.

- Logging errors and monitoring pipeline run statuses.

- Analyzing data flow performance for optimization.

Notebook Execution Monitoring includes:

- Tracking Spark notebook resource utilization and execution times.

- Logging output and errors for debugging and optimization.

Azure Monitor Integration allows organizations to:

- Collect and analyze system logs and performance metrics.

- Set up automated alerts for detecting anomalies and performance degradation.

Logging and Auditing Mechanisms ensure:

- Comprehensive tracking of data access and modifications.

- Compliance with security and regulatory requirements.

Real-Time Analytics Monitoring includes:

- Monitoring event stream throughput and latency for optimized processing.

- Evaluating KQL query execution efficiency.

- Tracking Eventhouse performance to ensure smooth real-time analytics.

– Cost Management

Cost optimization is integral to maintaining an efficient and scalable data engineering infrastructure within Microsoft Fabric. Effective strategies include:

- Identifying Unnecessary Resource Consumption – Analyzing workload patterns to eliminate wasteful compute usage.

- Utilizing Reserved Capacity – Leveraging cost-saving options for long-term workloads.

- Optimizing Data Storage and Processing – Ensuring efficient data structuring to reduce storage costs.

- Automating Compute Resource Management – Scheduling workload execution to minimize idle resource consumption.

Understanding the cost implications of different Fabric workloads enables organizations to:

- Monitor and analyze resource usage.

- Utilize cost analysis tools for budgeting and forecasting.

- Adjust resource allocations based on workload demands.

DP-700 Exam Preparation Guide

Preparing for the DP-700 exam requires a strategic approach that combines theoretical knowledge, hands-on practice, and the use of high-quality study resources. Microsoft Fabric is an evolving platform, and understanding its core functionalities is essential for success. This section provides a comprehensive roadmap to help you effectively prepare for the exam, covering study strategies, recommended learning materials, practice tests, and community resources.

Study Tips and Strategies

A well-structured study plan is key to mastering the exam objectives. The DP-700 exam evaluates your ability to implement and manage data engineering solutions within Microsoft Fabric. Consider the following strategies to enhance your preparation:

Enhanced Study Strategies for DP-700 Exam Preparation

– Understand the Exam Objectives

A strong foundation begins with a clear understanding of the DP-700 exam objectives, which outline the core skills and knowledge areas you’ll be tested on. Visit the official Microsoft website to review the latest exam syllabus, as Microsoft periodically updates its certification content to reflect advancements in data engineering and analytics. Break down the objectives into specific topics, such as data integration, data storage, real-time analytics, and security, and identify how they align with your current skill set. The major topics are:

1. Implement and manage an analytics solution (30–35%)

Configure Microsoft Fabric workspace settings

- Configure Spark workspace settings (Microsoft Documentation: Data Engineering workspace administration settings in Microsoft Fabric)

- Configure domain workspace settings (Microsoft Documentation: Fabric domains)

- Configure OneLake workspace settings (Microsoft Documentation: Workspaces in Microsoft Fabric and Power BI, Workspace Identity Authentication for OneLake Shortcuts and Data Pipelines)

- Configure data workflow workspace settings (Microsoft Documentation: Introducing Apache Airflow job in Microsoft Fabric)

Implement lifecycle management in Fabric

- Configure version control (Microsoft Documentation: What is version control?)

- Implement database projects

- Create and configure deployment pipelines (Microsoft Documentation: Get started with deployment pipelines)

Configure security and governance

- Implement workspace-level access controls (Microsoft Documentation: Roles in workspaces in Microsoft Fabric)

- Implement item-level access controls

- Implement row-level, column-level, object-level, and file-level access controls (Microsoft Documentation: Row-level security in Fabric data warehousing, Column-level security in Fabric data warehousing)

- Implement dynamic data masking (Microsoft Documentation: Dynamic data masking in Fabric data warehousing)

- Apply sensitivity labels to items (Microsoft Documentation: Apply sensitivity labels to Fabric items)

- Endorse items (Microsoft Documentation: Endorse Fabric and Power BI items)

- Choose between a pipeline and a notebook (Microsoft Documentation: How to use Microsoft Fabric notebooks)

- Design and implement schedules and event-based triggers (Microsoft Documentation: Create a trigger that runs a pipeline in response to a storage event)

- Implement orchestration patterns with notebooks and pipelines, including parameters and dynamic expressions (Microsoft Documentation: Use Fabric Data Factory Data Pipelines to Orchestrate Notebook-based Workflows)

2. Ingest and transform data (30–35%)

Design and implement loading patterns

- Design and implement full and incremental data loads (Microsoft Documentation: Incrementally load data from a source data store to a destination data store)

- Prepare data for loading into a dimensional model (Microsoft Documentation: Dimensional modeling in Microsoft Fabric Warehouse: Load tables)

- Design and implement a loading pattern for streaming data (Microsoft Documentation: Microsoft Fabric event streams – overview)

Ingest and transform batch data

- Choose an appropriate data store (Microsoft Documentation: Microsoft Fabric decision guide: choose a data store)

- Choose between dataflows, notebooks, and T-SQL for data transformation (Microsoft Documentation: Move and transform data with dataflows and data pipelines)

- Create and manage shortcuts to data (Microsoft Documentation: Data quality for Microsoft Fabric shortcut databases)

- Implement mirroring (Microsoft Documentation: What is Mirroring in Fabric?)

- Ingest data by using pipelines (Microsoft Documentation: Ingest data into your Warehouse using data pipelines)

- Transform data by using PySpark, SQL, and KQL (Microsoft Documentation: Transform data with Apache Spark and query with SQL, Use a notebook with Apache Spark to query a KQL database)

- Denormalize data

- Group and aggregate data

- Handle duplicate, missing, and late-arriving data (Microsoft Documentation: Handle duplicate data in Azure Data Explorer)

Ingest and transform streaming data

- Choose an appropriate streaming engine (Microsoft Documentation: Choose a stream processing technology in Azure, Configure streaming ingestion on your Azure Data Explorer cluster)

- Process data by using eventstreams (Microsoft Documentation: Process data streams in Fabric event streams)

- Process data by using Spark structured streaming (Microsoft Documentation: Get streaming data into lakehouse with Spark structured streaming)

- Process data by using KQL (Microsoft Documentation: Query data in a KQL queryset)

- Create windowing functions (Microsoft Documentation: Introduction to Stream Analytics windowing functions)

3. Monitor and optimize an analytics solution (30–35%)

- Monitor data ingestion (Microsoft Documentation: Demystifying Data Ingestion in Fabric)

- Monitor data transformation (Microsoft Documentation: Data Factory)

- Monitor semantic model refresh (Microsoft Documentation: Use the Semantic model refresh activity to refresh a Power BI Dataset)

- Configure alerts (Microsoft Documentation: Set alerts based on Fabric events in Real-Time hub)

- Identify and resolve pipeline errors (Microsoft Documentation: Errors and Conditional execution, Troubleshoot lifecycle management issues)

- Identify and resolve dataflow errors

- Identify and resolve notebook errors

- Identify and resolve eventhouse errors (Microsoft Documentation: Automating Real-Time Intelligence Eventhouse deployment using PowerShell)

- Identify and resolve eventstream errors (Microsoft Documentation: Troubleshoot Data Activator errors)

- Identify and resolve T-SQL errors

- Optimize a lakehouse table (Microsoft Documentation: Delta Lake table optimization and V-Order)

- Optimize a pipeline

- Optimize a data warehouse (Microsoft Documentation: Synapse Data Warehouse in Microsoft Fabric performance guidelines)

- Optimize eventstreams and eventhouses (Microsoft Documentation: Microsoft Fabric event streams – overview, Eventhouse overview)

- Optimize Spark performance (Microsoft Documentation: What is autotune for Apache Spark configurations in Fabric?)

- Optimize query performance (Microsoft Documentation: Query insights in Fabric data warehousing, Synapse Data Warehouse in Microsoft Fabric performance guidelines)

– Hands-on Practice

Practical experience is the most effective way to understand Microsoft Fabric’s data engineering ecosystem. While theoretical knowledge is important, the exam will test your ability to apply concepts to real-world scenarios. Gain hands-on experience with Fabric services such as:

- Lakehouses – Work with structured and unstructured data, optimize storage, and implement data management strategies.

- Data Pipelines – Develop, schedule, and monitor ETL workflows using Microsoft Fabric’s Data Factory capabilities.

- Notebooks – Use Python, SQL, and Spark in Fabric notebooks to analyze and process large datasets.

- Data Warehouses – Design, optimize, and query Synapse Data Warehouses to ensure high-performance analytics.

Consider setting up end-to-end projects, such as ingesting data from multiple sources, transforming it with Spark notebooks, and analyzing the results using Power BI. This approach will strengthen your problem-solving skills and reinforce key exam concepts.

– Effective Time Management

Balancing different exam topics while managing your daily responsibilities can be challenging. Creating a structured study plan is essential for staying on track. Follow these steps to maximize efficiency:

- Break down the syllabus into smaller sections and assign study time based on topic difficulty and importance.

- Use a calendar or planner to set deadlines for completing specific modules or hands-on labs.

- Allocate more time to weaker areas while maintaining a steady review of stronger topics.

- Incorporate active learning techniques such as summarizing notes, explaining concepts to others, and applying knowledge in practice tests.

- Stick to a study routine, whether it’s daily or weekly, to ensure consistent progress without last-minute cramming.

– Conceptual Understanding Over Memorization

The DP-700 exam is not just about recalling facts—it tests your ability to analyze scenarios and make data-driven decisions. Microsoft Fabric operates as a comprehensive platform integrating various services, each with specific use cases and best practices. Instead of memorizing individual commands or configurations, focus on:

- Understanding the architecture of Fabric’s services and how they interconnect.

- Learning the reasoning behind optimizations, such as when to use Direct Lake mode vs. Import mode in Power BI.

- Exploring real-world scenarios, like handling large-scale streaming data with Event Streams and Eventhouses.

- Developing troubleshooting skills, so you can resolve errors in data pipelines and optimize slow-performing queries effectively.

– Regular Practice

Consistent and structured practice is essential for retaining knowledge and improving problem-solving skills. To ensure well-rounded preparation:

- Dedicate time each week to hands-on labs using Microsoft Fabric’s sandbox environment or free-tier resources.

- Work on real-life projects involving data ingestion, transformation, storage, and visualization to understand practical applications.

- Take frequent quizzes and practice exams to familiarize yourself with the exam format and identify weak areas.

- Review incorrect answers carefully to understand mistakes and refine your approach.

- Engage in peer discussions or study groups to exchange insights and different perspectives on exam topics.

Microsoft Learn Resources

Microsoft provides extensive learning resources through Microsoft Learn, which serves as an essential platform for preparing for the DP-700 exam.

- Microsoft Learn Modules: Microsoft Learn offers structured learning paths that cover each exam objective in depth. These modules include interactive lessons, hands-on labs, and knowledge checks.

- Microsoft Documentation: The official Microsoft Fabric documentation is a vital resource for understanding the latest features and functionalities. As Fabric is a relatively new platform, keeping up with the latest updates and best practices through official documentation is highly recommended.

- Fabric-Specific Learning Paths: Given that Microsoft Fabric is a major component of the exam, explore the dedicated learning paths within Microsoft Learn that focus on Fabric’s data engineering, storage, and real-time analytics capabilities.

Practice Exams and Mock Tests

To assess your knowledge and identify gaps, practice exams are essential. They not only familiarize you with the exam format but also improve your confidence before the actual test.

- Official Microsoft Practice Tests: If available, take Microsoft’s official practice tests to gauge your readiness. These tests closely mimic the structure and difficulty level of the real exam.

- Third-Party Practice Exams: Many reputable online platforms offer DP-700 mock exams that simulate real-world scenarios. Ensure you choose up-to-date resources that align with the latest exam objectives.

- Result Analysis and Review: After each practice exam, analyze your performance. Identify the areas where you made mistakes, review the explanations for incorrect answers, and revisit the study materials as needed.

Community Resources and Support

Engaging with the broader Microsoft Fabric and data engineering community can provide additional insights and keep you updated on industry trends.

- Microsoft Fabric Community Forums: Participate in Microsoft Fabric forums to ask questions, share knowledge, and stay informed about platform updates. Learning from real-world experiences of other professionals can enhance your understanding.

- Online Forums and Technical Blogs: Follow technical blogs, LinkedIn posts, and discussion forums where experts share insights, best practices, and study tips for the DP-700 exam.

- Study Groups and Peer Learning: Joining a study group can be a great way to reinforce learning. Collaborating with peers allows for knowledge-sharing, discussing complex topics, and gaining different perspectives on exam concepts.

Conclusion

Mastering the DP-700 exam and the intricacies of Microsoft Fabric signifies a profound understanding of modern data engineering practices. From the foundational OneLake, streamlining data storage and access, to the dynamic capabilities of Synapse Data Engineering, Data Warehousing, Real-Time Analytics, and the seamless integration with Power BI, this platform offers a unified and powerful environment for data professionals. By grasping the core concepts, architecture, data ingestion and transformation techniques, storage management, analytical tools, performance optimizations, and monitoring strategies, you’ll be well-equipped to design and implement robust data solutions.

Embrace the hands-on experience, use the wealth of Microsoft Learn resources, and engage with the vibrant Fabric community. This journey not only prepares you for the certification but also positions you to excel in the rapidly evolving world of data analytics, empowering you to drive impactful insights and transform data into actionable intelligence. The DP-700 certification is more than just a credential; it’s a testament to your capability to navigate and leverage the future of data engineering within the Microsoft Fabric ecosystem.