As an aspiring AWS Machine Learning Specialty certified professional, you need to have a strong understanding of various AWS services, tools, and techniques related to machine learning. This cheat sheet will provide you with a quick and concise reference guide to the key concepts, terminologies, and best practices that you need to know for the exam.

This cheat sheet is divided into different sections, each covering a specific topic relevant to the AWS Machine Learning Specialty certification exam. You’ll find useful information on AWS services, such as Amazon SageMaker, Amazon Rekognition, and Amazon Comprehend, as well as on machine learning algorithms, model training, and deployment. Additionally, the cheat sheet includes tips and tricks for optimizing machine learning models, handling data processing and management, and ensuring data security and privacy.

Whether you’re just starting to learn about machine learning on AWS or you’re preparing for the certification exam, this cheat sheet is a valuable resource that will help you to solidify your understanding of the key concepts and prepare you to pass the exam with confidence. So, let’s dive in and start exploring the AWS Machine Learning Specialty Cheat Sheet!

What is AWS Machine Learning Specialty?

AWS Machine Learning specialty certification exam exam is intended for Amazon Web Services. It enables developers to use algorithms to discover patterns in user data, construct mathematical models based on these patterns, and subsequently design and execute predictive applications. This exam verifies a candidate’s ability to use the AWS Cloud to construct, train, tune, and deploy machine learning (ML) models. It assesses a candidate’s ability to create, build, deploy, and manage machine learning (ML) solutions for a variety of business challenges. It will demonstrate that the candidate has the capacity to:

- Choose and provide valid reasons for selecting the suitable machine learning approach for a specific business issue.

- Recognize the AWS services that are suitable for implementing machine learning solutions.

- Create and execute machine learning solutions that are scalable, cost-effective, dependable, and secure.

AWS Machine Learning Specialty Glossary

- AWS Machine Learning – A web-based service that enables developers to create and deploy machine learning models on a large scale.

- Algorithm – A set of instructions that a machine learning model follows to perform a specific task, such as classification or regression.

- AutoML – Automated Machine Learning, a set of tools and techniques that enable developers to automatically build, train, and optimize machine learning models without the need for manual intervention.

- Batch inference – The process of using a trained machine learning model to make predictions on a large dataset in one go.

- Data preprocessing – The procedure of refining and converting raw data into a format suitable for utilization by a machine learning model.

- Deep learning – A form of machine learning that employs artificial neural networks to represent intricate patterns within data.

- Ensemble learning – The process of combining multiple machine learning models to improve the accuracy and robustness of predictions.

- Feature engineering – The procedure of choosing and modifying features (such as variables) within a dataset to enhance the efficiency of a machine learning model.

- Hyperparameter tuning – The process of optimizing the settings (i.e., hyperparameters) of a machine learning model to achieve the best performance on a given dataset.

- Inference – The procedure of employing a trained machine learning model to generate forecasts on fresh data.

- ML pipeline – A series of steps that are used to build, train, and deploy a machine learning model.

- Model deployment – The process of making a trained machine learning model available for use by other applications or services.

- Model training – The process of training a machine learning model on a dataset to learn the underlying patterns in the data.

- Overfitting – A situation in which a machine learning model performs well on the training data but poorly on new, unseen data.

- Reinforcement learning – A form of machine learning that includes instructing a model to make decisions by considering feedback from its surroundings.

- SageMaker – A fully-managed machine learning service provided by AWS that allows developers to build, train, and deploy machine learning models at scale.

- Supervised learning – A machine learning category that encompasses instructing a model using labeled data, which means data that has already been categorized or classified.

- Unsupervised learning – A type of machine learning that involves training a model on unlabeled data (i.e., data that has not been categorized).

Exam preparation resources for the AWS Machine Learning Specialty exam

here are some official resources for AWS Machine Learning Specialty exam preparation:

- AWS Machine Learning Specialty Exam Guide: This guide provides an overview of the exam, its format, and what to expect. It also includes a list of recommended AWS services, whitepapers, and other resources for exam preparation. You can find the guide here: https://d1.awsstatic.com/training-and-certification/docs-ml/AWS-Certified-Machine-Learning-Specialty_Exam-Guide.pdf

- AWS Certified Machine Learning Specialty Learning Path: This learning path on the AWS website provides free training resources for the exam. It includes video courses, hands-on labs, and other resources to help you prepare for the exam. You can find the learning path here: https://aws.amazon.com/training/learning-paths/machine-learning/

- AWS Whitepapers: AWS offers a number of whitepapers on machine learning that can be useful for exam preparation. These include “Introduction to Machine Learning on AWS”, “Building Machine Learning Pipelines on AWS”, and “Amazon SageMaker Technical Whitepaper”. You can find the whitepapers here: https://aws.amazon.com/whitepapers/

- AWS Sample Exam Questions: AWS offers a set of sample exam questions to help you prepare for the exam. These questions are designed to give you an idea of the types of questions you can expect on the actual exam. You can find the sample questions here: https://d1.awsstatic.com/training-and-certification/docs-ml/AWS-Certified-Machine-Learning-Specialty_Sample-Questions.pdf

- AWS Machine Learning Specialty Exam Readiness: This course on the AWS website provides an overview of the exam and tips for exam preparation. It includes a practice exam to help you assess your readiness for the actual exam. You can find the course here: https://aws.amazon.com/training/course-descriptions/machine-learning-specialty-exam-readiness/

Cheat Sheet : AWS Machine Learning Specialty

All you need to get started on your revisions is the AWS Machine Learning Specialty Cheat Sheet. It will provide you a brief overview of all the materials you’ll need to pass the test. It will also serve as your golden ticket to obtaining your certificate.

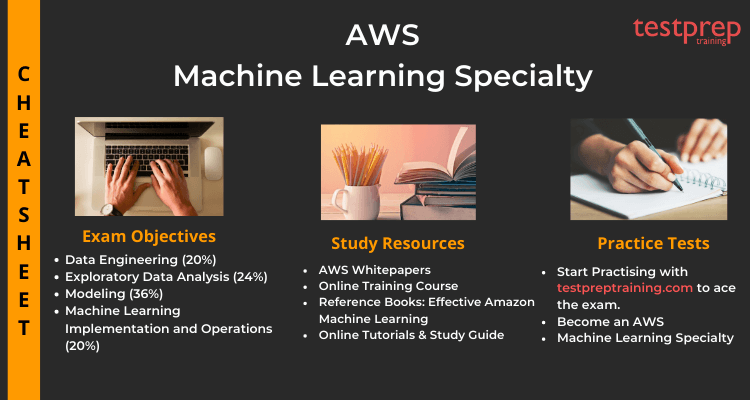

1. Familiarise with Exam Objectives

The first step is to gather all test regulations and course information. Before you begin your test preparations, you should familiarise yourself with the exam course. The course outline serves as the exam’s template. It goes through all of the crucial test elements and ideas that will be addressed on the exam. As a result, in order to pass the exam, you must consult the Exam Guide. The following domains are covered in this AWS Machine Learning Certification Course:

Domain 1: Data Engineering (20%)

1.1 Create data repositories for ML.

- Identify data sources (e.g., content and location, primary sources such as user data) (AWS Documentation: Supported data sources)

- Determine storage mediums (for example, databases, Amazon S3, Amazon Elastic File System [Amazon EFS], Amazon Elastic Block Store [Amazon EBS]). (AWS Documentation: Using Amazon S3 with Amazon ML, Creating a Datasource with Amazon Redshift Data, Using Data from an Amazon RDS Database, Host instance storage volumes, Amazon Machine Learning and Amazon Elastic File System)

1.2 Identify and implement a data ingestion solution.

- Identify data job styles and job types (for example, batch load, streaming).

- Orchestrate data ingestion pipelines (batch-based ML workloads and streaming-based ML workloads).

- Amazon Kinesis (AWS Documentation: Amazon Kinesis Data Streams)

- Amazon Data Firehose

- Amazon EMR (AWS Documentation: Process Data Using Amazon EMR with Hadoop Streaming, Optimize downstream data processing)

- Amazon Glue (AWS Documentation: Simplify data pipelines, AWS Glue)

- Amazon Managed Service for Apache Flink

- Schedule Job (AWS Documentation: Job scheduling, Time-based schedules for jobs and crawlers)

1.3 Identify and implement a data transformation solution.

- Transforming data transit (ETL: Glue, Amazon EMR, AWS Batch) (AWS Documentation: extract, transform, and load data for analytic processing using AWS Glue)

- Handle ML-specific data by using MapReduce (for example, Apache Hadoop, Apache Spark, Apache Hive). (AWS Documentation: Large-Scale Machine Learning with Spark on Amazon EMR, Apache Hive on Amazon EMR, Apache Spark on Amazon EMR, Use Apache Spark with Amazon SageMaker, Perform interactive data engineering and data science workflows)

Domain 2: Exploratory Data Analysis (24%)

2.1 Sanitize and prepare data for modeling.

- Identify and handle missing data, corrupt data, stop words, etc. (AWS Documentation: Managing missing values in your target and related datasets, Amazon SageMaker DeepAR now supports missing values, Configuring Text Analysis Schemes)

- Formatting, normalizing, augmenting, and scaling data (AWS Documentation: Understanding the Data Format for Amazon ML, Common Data Formats for Training, Data Transformations Reference, AWS Glue DataBrew, Easily train models using datasets, Visualizing Amazon SageMaker machine learning predictions)

- Determine whether there is sufficient labeled data. (AWS Documentation:data labeling for machine learning, Amazon Mechanical Turk, Use Amazon Mechanical Turk with Amazon SageMaker)

- Identify mitigation strategies.

- Use data labelling tools (for example, Amazon Mechanical Turk).

2.2 Perform feature engineering.

- Identify and extract features from data sets, including from data sources such as text, speech, image, public datasets, etc. (AWS Documentation: Feature Processing, Feature engineering, Amazon Textract, Amazon Textract features)

- Analyze/evaluate feature engineering concepts (binning, tokenization, outliers, synthetic features, 1 hot encoding, reducing dimensionality of data) (AWS Documentation: Data Transformations Reference, Building a serverless tokenization solution to mask sensitive data, ML-powered anomaly detection for outliers, ONE_HOT_ENCODING, Running Principal Component Analysis, Perform a large-scale principal component analysis)

2.3 Analyze and visualize data for ML.

- Create Graphs (scatter plot, time series, histogram, box plot) (AWS Documentation: Using scatter plots, Run a query that produces a time series visualization, Using histograms, Using box plots)

- Interpreting descriptive statistics (correlation, summary statistics, p value)

- Perform cluster analysis (for example, hierarchical, diagnosis, elbow plot, cluster size).

Domain 3: Modeling (36%)

3.1 Frame business problems as ML problems.

- Determine when to use and when not to use ML (AWS Documentation: When to Use Machine Learning)

- Know the difference between supervised and unsupervised learning

- Select from among classification, regression, forecasting, clustering, recommendation, and foundation models. (AWS Documentation: K-means clustering with Amazon SageMaker, Building a customized recommender system in Amazon SageMaker)

3.2 Select the appropriate model(s) for a given ML problem.

- Xgboost, logistic regression, K-means, linear regression, decision trees, random forests, RNN, CNN, Ensemble, Transfer learning (AWS Documentation: XGBoost Algorithm, K-means clustering with Amazon SageMaker, Forecasting financial time series, Amazon Forecast can now use Convolutional Neural Networks, Detecting hidden but non-trivial problems in transfer learning models)

- Express intuition behind models

3.3 Train ML models.

- Split data between training and validation (for example, cross validation). (AWS Documentation: Train a Model, Incremental Training, Managed Spot Training, Validate a Machine Learning Model, Cross-Validation, Model support, metrics, and validation, Splitting Your Data)

- Understand optimization techniques for ML training (for example, gradient descent, loss functions, convergence).

- Choose appropriate compute resources (for example GPU or CPU, distributed or non-distributed).

- Choose appropriate compute platforms (Spark or non-Spark).

- Update and retraining Models (AWS Documentation:Retraining Models on New Data, Automating model retraining and deployment)

- Batch vs. real-time/online

3.4 Perform hyperparameter optimization.

- Perform Regularization (AWS Documentation:Training Parameters)

- Drop out

- L1/L2

- Perform Cross validation (AWS Documentation: Cross-Validation)

- Model initialization

- Neural network architecture (layers/nodes), learning rate, activation functions

- Understand tree-based models (number of trees, number of levels).

- Understand linear models (learning rate).

3.5 Evaluate ML models.

- Avoid overfitting and underfitting

- Detect and handle bias and variance (AWS Documentation: Underfitting vs. Overfitting, Amazon SageMaker Clarify Detects Bias and Increases the Transparency, Amazon SageMaker Clarify)

- Evaluate metrics (for example, area under curve [AUC]-receiver operating characteristics [ROC], accuracy, precision, recall, Root Mean Square Error [RMSE], F1 score).

- Interpret confusion matrix (AWS Documentation: Custom classifier metrics)

- Offline and online model evaluation (A/B testing) (AWS Documentation: Validate a Machine Learning Model, Machine Learning Lens)

- Compare models using metrics (time to train a model, quality of model, engineering costs) (AWS Documentation: Easily monitor and visualize metrics while training models, Model Quality Metrics, Monitor model quality)

- Cross validation (AWS Documentation: Cross-Validation, Model support, metrics, and validation)

Domain 4: Machine Learning Implementation and Operations (20%)

4.1 Build ML solutions for performance, availability, scalability, resiliency, and fault tolerance. (AWS Documentation: Review the ML Model’s Predictive Performance, Best practices, Resilience in Amazon SageMaker)

- Log and monitor AWS environments (AWS Documentation:Logging and Monitoring)

- AWS CloudTrail and AWS CloudWatch (AWS Documentation: Logging Amazon ML API Calls with AWS CloudTrail, Log Amazon SageMaker API Calls, Monitoring Amazon ML, Monitor Amazon SageMaker)

- Build error monitoring solutions (AWS Documentation: ML Platform Monitoring)

- Deploy to multiple AWS Regions and multiple Availability Zones. (AWS Documentation: Regions and Endpoints, Best practices)

- AMI and golden image (AWS Documentation: AWS Deep Learning AMI)

- Docker containers (AWS Documentation: Why use Docker containers for machine learning development, Using Docker containers with SageMaker)

- Deploy Auto Scaling groups (AWS Documentation: Automatically Scale Amazon SageMaker Models, Configuring autoscaling inference endpoints)

- Rightsizing resources, for example:

- Instances (AWS Documentation: Ensure efficient compute resources on Amazon SageMaker)

- Provisioned IOPS (AWS Documentation: Optimizing I/O for GPU performance tuning of deep learning)

- Volumes (AWS Documentation: Customize your notebook volume size, up to 16 TB)

- Load balancing (AWS Documentation: Managing your machine learning lifecycle)

- AWS best practices (AWS Documentation: Machine learning best practices in financial services)

4.2 Recommend and implement the appropriate ML services and features for a given problem.

- ML on AWS (application services)

- Amazon Poly (AWS Documentation: Amazon Polly, Build a unique Brand Voice with Amazon Polly)

- Amazon Lex (AWS Documentation: Amazon Lex, Build more effective conversations on Amazon Lex)

- Amazon Transcribe (AWS Documentation: Amazon Transcribe, Transcribe speech to text in real time)

- Amazon Q

- Understand AWS service quotas (AWS Documentation: Amazon SageMaker endpoints and quotas, Amazon Machine Learning endpoints and quotas, System Limits)

- Determine when to build custom models and when to use Amazon SageMaker built-in algorithms.

- Understand AWS infrastructure (for example, instance types) and cost considerations.

- Using spot instances to train deep learning models using AWS Batch (AWS Documentation: Train Deep Learning Models on GPUs)

4.3 Apply basic AWS security practices to ML solutions.

- AWS Identity and Access Management (IAM) (AWS Documentation: Controlling Access to Amazon ML Resources, Identity and Access Management in AWS Deep Learning Containers)

- S3 bucket policies (AWS Documentation: Using Amazon S3 with Amazon ML, Granting Amazon ML Permissions to Read Your Data from Amazon S3)

- Security groups (AWS Documentation: Secure multi-account model deployment with Amazon SageMaker, Use an AWS Deep Learning AMI)

- VPC (AWS Documentation: Securing Amazon SageMaker Studio connectivity, Direct access to Amazon SageMaker notebooks, Building secure machine learning environments)

- Encryption and anonymization (AWS Documentation: Protect Data at Rest Using Encryption, Protecting Data in Transit with Encryption, Anonymize and manage data in your data lake)

4.4 Deploy and operationalize ML solutions.

- Exposing endpoints and interacting with them (AWS Documentation: Creating a machine learning-powered REST API, Call an Amazon SageMaker model endpoint)

- Understand ML models.

- A/B testing (AWS Documentation: A/B Testing ML models in production, Dynamic A/B testing for machine learning models)

- Retrain pipelines (AWS Documentation: Automating model retraining and deployment, Machine Learning Lens)

- Debug and troubleshoot ML models (AWS Documentation:Debug Your Machine Learning Models, Analyzing open-source ML pipeline models in real time, Troubleshoot Amazon SageMaker model deployments)

- Detect and mitigate drop in performance (AWS Documentation: Identify bottlenecks, improve resource utilization, and reduce ML training costs, Optimizing I/O for GPU performance tuning of deep learning training)

- Monitor performance of the model (AWS Documentation: Monitor models for data and model quality, bias, and explainability, Monitoring in-production ML models at large scale)

2. Know about the Learning Resources

There are several tools available to help you prepare for the test. However, you must determine which are useful to you. The resources allow you to achieve more in less time. Here you will find easy connections to all of the resources you will require to pass the exam.

– AWS Machine Learning White Paper

The AWS team offers several whitepapers aimed at enhancing your technical expertise. These whitepapers are developed exclusively by the AWS team, analysts, and other AWS collaborators. You may wish to focus your attention on the following whitepapers:

- Power Machine Learning at Scale

- Managing Machine Learning Projects

- Machine Learning Foundations: Evolution of ML and AI

- Augmented AI: The Power of Human and Machine

- Machine Learning Lens – AWS Well-Architected Framework

– Online Training Courses

AWS Machine Learning Certification Training is accessible in a variety of formats. You may find the training programs that are most appropriate for you based on the curriculum and your time available. There are both online and instructor-led classes available, both of which provide an interactive learning environment. Additionally, you may clarify your concerns and take the test series along with the courses from the same site. For more training options, you can visit Training Library by Amazon for machine learning.

– Recommended Progression

- Machine Learning Exam Basics

- Process Model: CRISP-DM on the AWS Stack

- The Elements of Data Science

- Storage Deep Dive Learning Path

- Machine Learning Security

- Developing Machine Learning Applications

- Types of Machine Learning Solutions

– Branching content areas

- Communicating with Chat Bots

- Speaking of: Machine Translation and NLP

- Seeing Clearly: Computer Vision Theory

– Optional training

3. Reference Books

The greatest valuable resource of all time is booked. For the AWS machine learning specialty test, you can consult a number of resources. You can select any book that covers all areas of the curriculum and is written in a language that is comfortable for you. There are several books available, including:

- Mastering Machine Learning on AWS: Advanced machine learning in Python using SageMaker, Apache Spark, and TensorFlow

- Effective Amazon Machine Learning

- Learning Amazon Web Services (AWS): A Hands-On Guide to the Fundamentals of AWS Cloud | First Edition | By Pearson

4. Online Tutorials and Study Guide

Online Tutorials help you improve your knowledge and have a better comprehension of test themes. Exam specifics and policies are also covered in the AWS Machine Learning Tutorials. As a consequence, learning using Online Tutorials will help you improve your preparedness. Furthermore, Study Guides will be a valuable resource for you as you prepare for the AWS Machine Learning Specialty test. These resources will assist you in remaining consistent and determined.

5. Attempt Practice Tests

The only method to pass the test with a good score is to take the AWS Machine Learning Practice Exam. Your concepts will become more apparent as you practise. Always practise sample papers and take as many exam series as possible. This will aid in the discovery of your flaws and the identification of your weak spots. Furthermore, you will discover the areas where you need to improve and the areas where you are completely prepared for the exam. This is the most crucial aspect of the preparatory process. Many reputable educational websites provide example papers with a 100% guarantee of achievement. Try a free practice test now!